Working on a book about Citizen Diplomacy.

Living in the woods.

Also - being mom to four boys and one baby girl 🤘🏻

Memory-efficiency, inference speed, without compromising model quality.

LFMs have been benchmarked on real hardware, proving that they can beat Transformers.

Liquid AI have also just released Hyena Edge👇

Memory-efficiency, inference speed, without compromising model quality.

LFMs have been benchmarked on real hardware, proving that they can beat Transformers.

Liquid AI have also just released Hyena Edge👇

Maps AI value expressions across real-world interactions to inform grounded AI value alignment

arxiv.org/abs/2504.15236

Maps AI value expressions across real-world interactions to inform grounded AI value alignment

arxiv.org/abs/2504.15236

Highlights limitations of next-token prediction and proposes noise-injection strategies for open-ended creativity

arxiv.org/abs/2504.15266

GitHub: github.com/chenwu98/alg...

Highlights limitations of next-token prediction and proposes noise-injection strategies for open-ended creativity

arxiv.org/abs/2504.15266

GitHub: github.com/chenwu98/alg...

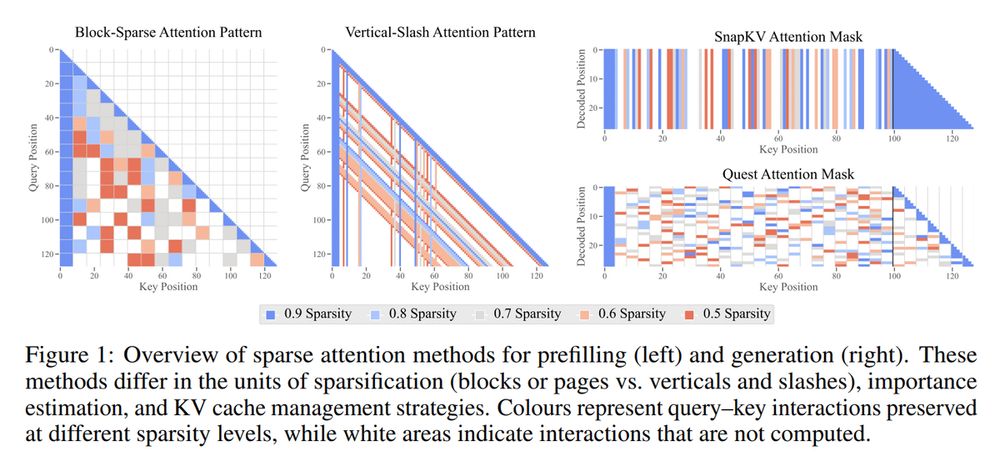

Investigates sparse attention trade-offs and proposes scaling laws for long-context LLMs

arxiv.org/abs/2504.17768

Investigates sparse attention trade-offs and proposes scaling laws for long-context LLMs

arxiv.org/abs/2504.17768

Presents PHD-Transformer to enable efficient long-context pretraining without inflating memory costs

arxiv.org/abs/2504.14992

Presents PHD-Transformer to enable efficient long-context pretraining without inflating memory costs

arxiv.org/abs/2504.14992

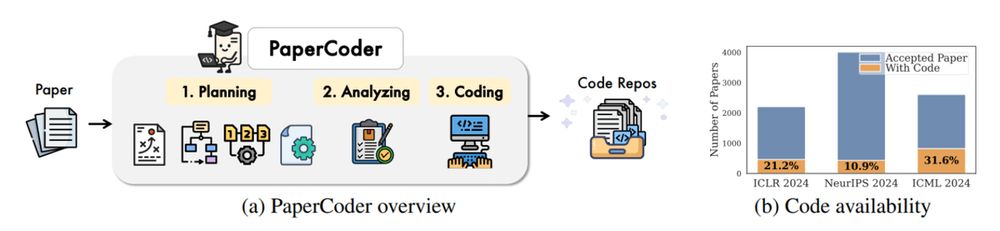

Automates end-to-end ML paper-to-code translation with a multi-agent framework

arxiv.org/abs/2504.17192

Code: github.com/going-doer/P...

Automates end-to-end ML paper-to-code translation with a multi-agent framework

arxiv.org/abs/2504.17192

Code: github.com/going-doer/P...

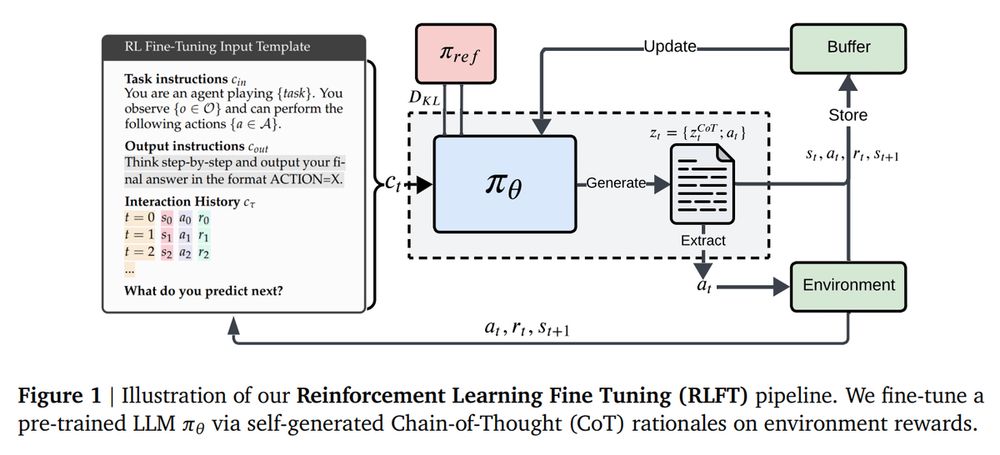

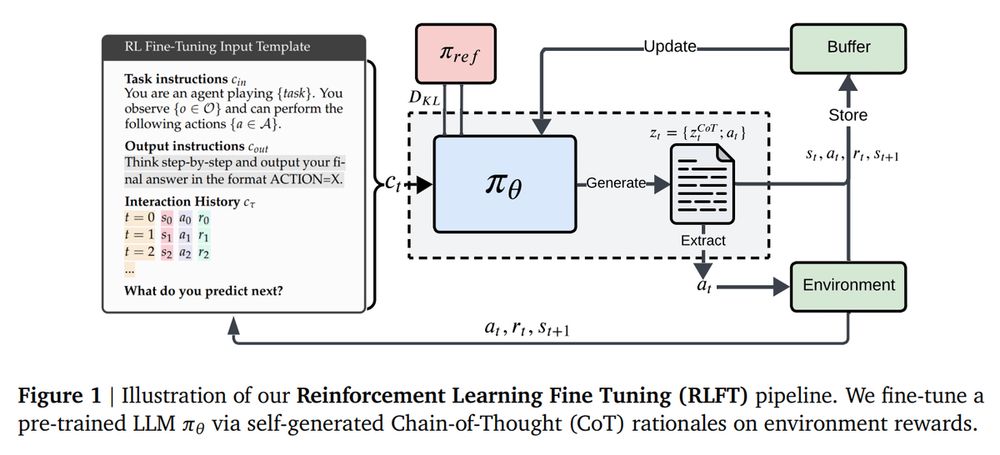

Analyzes how RL fine-tuning improves exploration and decision-making abilities of LLMs

arxiv.org/abs/2504.16078

Analyzes how RL fine-tuning improves exploration and decision-making abilities of LLMs

arxiv.org/abs/2504.16078

Introduces a method for self-evolving LLMs at test-time using reward signals without labeled data

arxiv.org/abs/2504.16084

GitHub: github.com/PRIME-RL/TTRL

Introduces a method for self-evolving LLMs at test-time using reward signals without labeled data

arxiv.org/abs/2504.16084

GitHub: github.com/PRIME-RL/TTRL

▪️ Test-Time Reinforcement Learning

▪️ LLMs are Greedy Agents

▪️ Paper2Code

▪️ Efficient Pretraining Length Scaling

▪️ The Sparse Frontier

▪️ Roll the dice & look before you leap

▪️ Discovering and Analyzing Values in Real-World Language Model Interactions

🧵

▪️ Test-Time Reinforcement Learning

▪️ LLMs are Greedy Agents

▪️ Paper2Code

▪️ Efficient Pretraining Length Scaling

▪️ The Sparse Frontier

▪️ Roll the dice & look before you leap

▪️ Discovering and Analyzing Values in Real-World Language Model Interactions

🧵

Advances multimodal reasoning with a hybrid RL paradigm balancing reward guidance and rule-based strategies.

arxiv.org/abs/2504.16656

Model: huggingface.co/Skywork/Skyw...

Advances multimodal reasoning with a hybrid RL paradigm balancing reward guidance and rule-based strategies.

arxiv.org/abs/2504.16656

Model: huggingface.co/Skywork/Skyw...

It's a generative verifier that scales step-wise reward modeling with minimal supervision.

arxiv.org/abs/2504.16828

GitHub: github.com/mukhal/think...

It's a generative verifier that scales step-wise reward modeling with minimal supervision.

arxiv.org/abs/2504.16828

GitHub: github.com/mukhal/think...

Expands vision-language models to handle long-context video and image comprehension with specialized training tricks and efficient scaling.

arxiv.org/abs/2504.15271

Project page: nvlabs.github.io/EAGLE/

Expands vision-language models to handle long-context video and image comprehension with specialized training tricks and efficient scaling.

arxiv.org/abs/2504.15271

Project page: nvlabs.github.io/EAGLE/

Builds state-of-the-art mathematical reasoning models with OpenMathReasoning dataset.

arxiv.org/abs/2504.16891

Builds state-of-the-art mathematical reasoning models with OpenMathReasoning dataset.

arxiv.org/abs/2504.16891

Builds a universal audio foundation model for understanding, generating, and conversing in audio and text, achieving SOTA across diverse benchmarks.

arxiv.org/abs/2504.18425

Codes, model checkpoints, the evaluation toolkits: github.com/MoonshotAI/K...

Builds a universal audio foundation model for understanding, generating, and conversing in audio and text, achieving SOTA across diverse benchmarks.

arxiv.org/abs/2504.18425

Codes, model checkpoints, the evaluation toolkits: github.com/MoonshotAI/K...

Achieve strong reasoning capabilities with tiny models by applying cost-efficient low-rank adaptation and reinforcement learning.

arxiv.org/abs/2504.15777

Achieve strong reasoning capabilities with tiny models by applying cost-efficient low-rank adaptation and reinforcement learning.

arxiv.org/abs/2504.15777

▪️ Hyena Edge

▪️ Tina: Tiny Reasoning Models via LoRA

▪️ Kimi-Audio

▪️ Aimo-2 winning solution

▪️ Eagle 2.5

▪️ Trillion-7B

▪️ Surya OCR

▪️ ThinkPRM

▪️ Skywork R1V2

🧵

▪️ Hyena Edge

▪️ Tina: Tiny Reasoning Models via LoRA

▪️ Kimi-Audio

▪️ Aimo-2 winning solution

▪️ Eagle 2.5

▪️ Trillion-7B

▪️ Surya OCR

▪️ ThinkPRM

▪️ Skywork R1V2

🧵

Yet even with the loud launch and 50 big-name partners, Google's A2A remains underappreciated. Why?

Here are several reasons 👇

www.turingpost.com/p/a2a

Yet even with the loud launch and 50 big-name partners, Google's A2A remains underappreciated. Why?

Here are several reasons 👇

www.turingpost.com/p/a2a

Introduces the Autonomous Generalist Scientist (AGS) and proposes the possibility of new scaling laws in research

arxiv.org/abs/2503.22444

Introduces the Autonomous Generalist Scientist (AGS) and proposes the possibility of new scaling laws in research

arxiv.org/abs/2503.22444

Redesigns large-scale MoE serving with disaggregated attention and FFN modules to boost GPU utilization and cut costs through a tailored pipeline and M2N communication

arxiv.org/abs/2504.02263

Redesigns large-scale MoE serving with disaggregated attention and FFN modules to boost GPU utilization and cut costs through a tailored pipeline and M2N communication

arxiv.org/abs/2504.02263

A z-score-based dynamic gradient clipping method that reduces loss spikes during LLM pretraining more effectively than traditional methods

arxiv.org/abs/2504.02507

Code: github.com/bluorion-com...

A z-score-based dynamic gradient clipping method that reduces loss spikes during LLM pretraining more effectively than traditional methods

arxiv.org/abs/2504.02507

Code: github.com/bluorion-com...

Proposes “agentic knowledgeable self-awareness” for agent models, enabling dynamic self-regulation of knowledge use during planning and decision-making

arxiv.org/abs/2504.03553

Code: github.com/zjunlp/KnowS...

Proposes “agentic knowledgeable self-awareness” for agent models, enabling dynamic self-regulation of knowledge use during planning and decision-making

arxiv.org/abs/2504.03553

Code: github.com/zjunlp/KnowS...

A compositional generalist-specialist framework for GUI automation. Introduces Mixture-of-Grounding and Proactive Hierarchical Planning

arxiv.org/abs/2504.00906

Code: github.com/simular-ai/A...

A compositional generalist-specialist framework for GUI automation. Introduces Mixture-of-Grounding and Proactive Hierarchical Planning

arxiv.org/abs/2504.00906

Code: github.com/simular-ai/A...

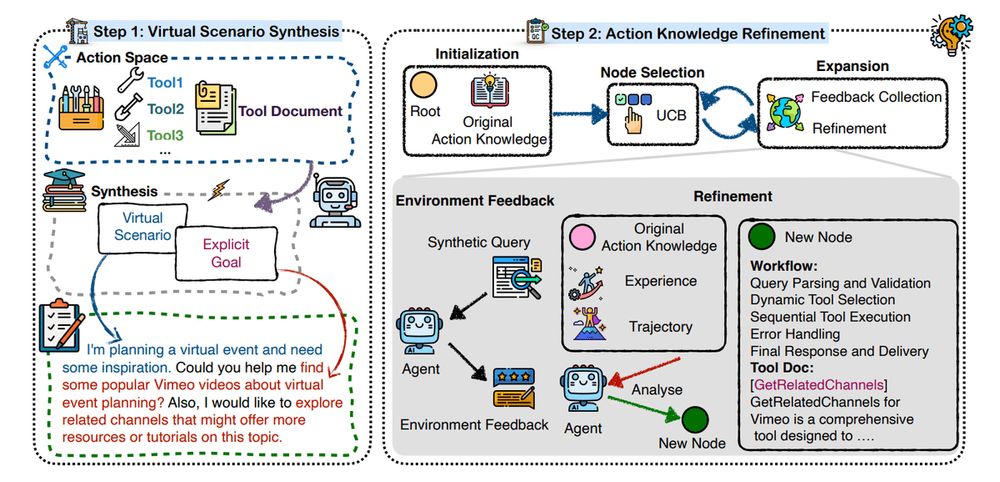

Equips LLM-based agents with scenario simulation and MCTS-based refinement of action knowledge

arxiv.org/abs/2504.03561

Equips LLM-based agents with scenario simulation and MCTS-based refinement of action knowledge

arxiv.org/abs/2504.03561

Open-source implementation of a minimalist RL training approach for LLMs, mirroring DeepSeek-R1-Zero’s effectiveness with fewer steps

arxiv.org/abs/2503.24290

GitHub: github.com/Open-Reasone...

Open-source implementation of a minimalist RL training approach for LLMs, mirroring DeepSeek-R1-Zero’s effectiveness with fewer steps

arxiv.org/abs/2503.24290

GitHub: github.com/Open-Reasone...

Benchmarks scaling strategies across tasks and models, showing mixed results depending on domain and verifier use

arxiv.org/abs/2504.00294

Code: github.com/microsoft/eu...

Benchmarks scaling strategies across tasks and models, showing mixed results depending on domain and verifier use

arxiv.org/abs/2504.00294

Code: github.com/microsoft/eu...