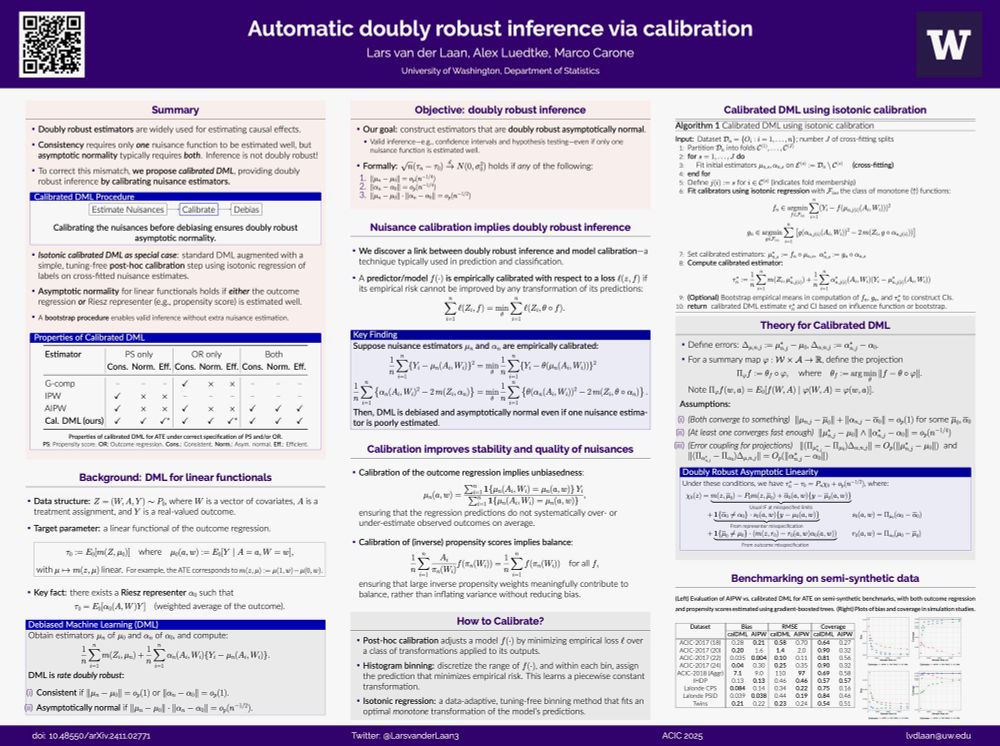

Lars van der Laan

@larsvanderlaan3.bsky.social

570 followers

120 following

24 posts

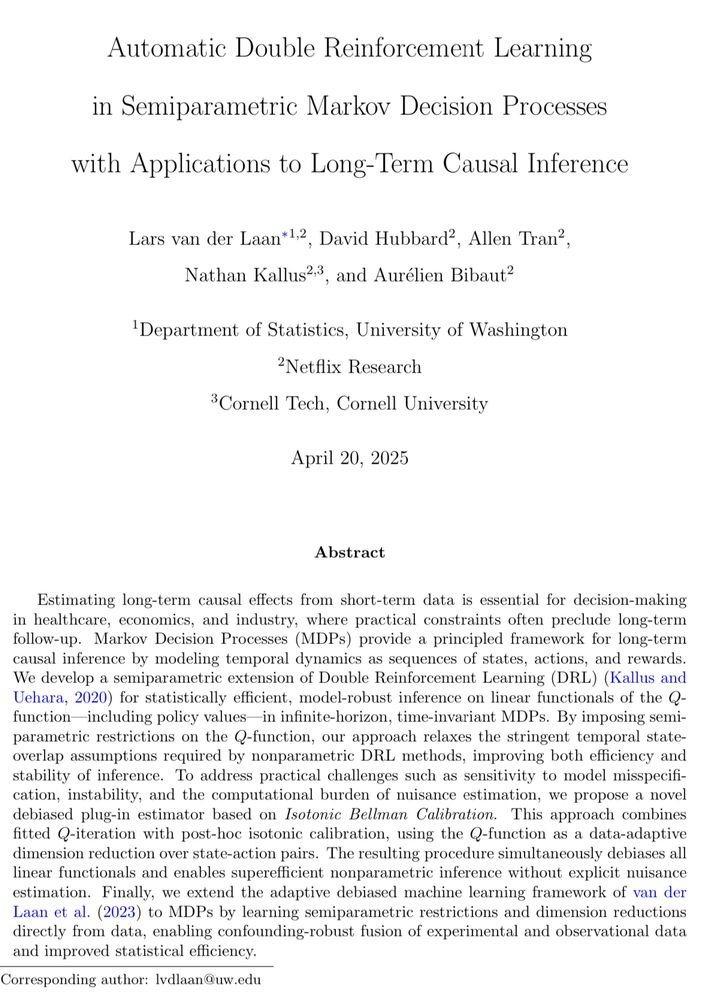

Ph.D. Student @uwstat; Research fellowship @Netflix; visiting researcher @UCJointCPH; M.A. @UCBStatistics - machine learning; calibration; semiparametrics; causal inference.

https://larsvanderlaan.github.io

Posts

Media

Videos

Starter Packs

Reposted by Lars van der Laan

Reposted by Lars van der Laan

Alex Luedtke

@alexluedtke.bsky.social

· May 23

Reposted by Lars van der Laan

apoorva lal

@apoorvalal.com

· May 19

Reposted by Lars van der Laan

Reposted by Lars van der Laan

arxiv.stat.ME

@arxiv-stat-me.bsky.social

· May 13

arxiv.stat.ME

@arxiv-stat-me.bsky.social

· Nov 12

arxiv stat.ML

@arxiv-stat-ml.bsky.social

· Feb 11

Reposted by Lars van der Laan

arxiv stat.ML

@arxiv-stat-ml.bsky.social

· Feb 11