Lucy Dowdall

@lucydowdall.bsky.social

200 followers

130 following

18 posts

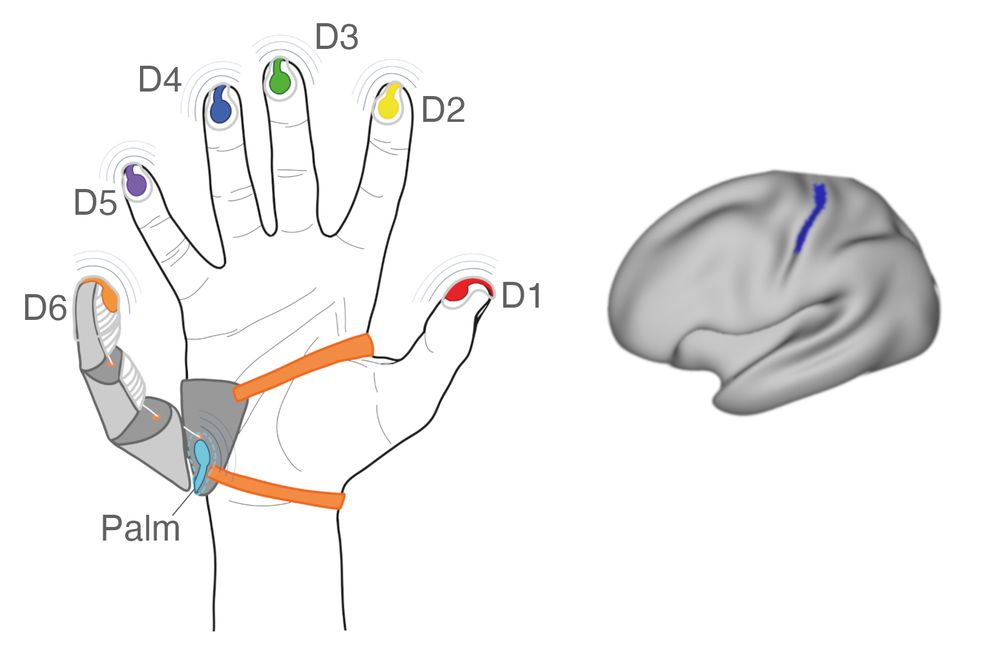

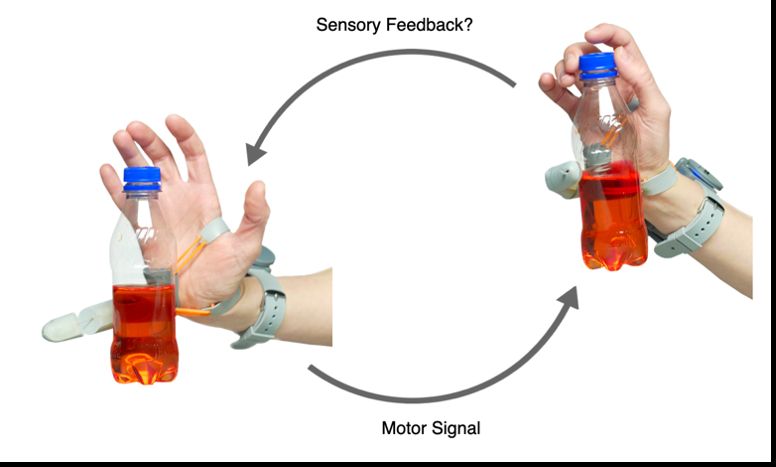

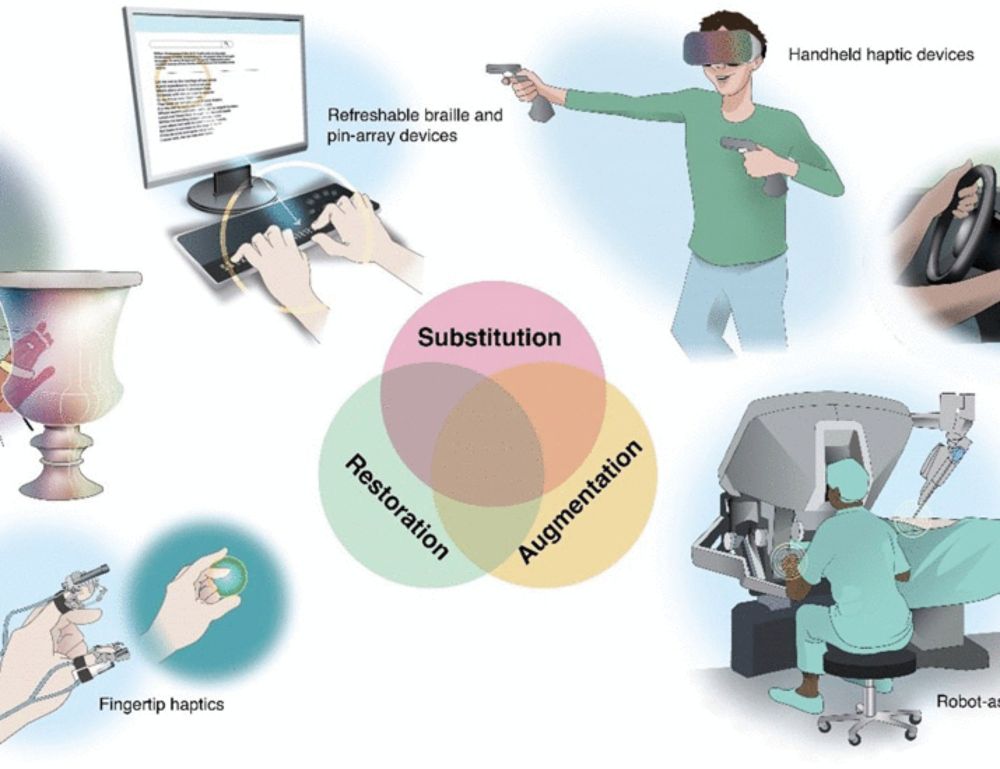

Cognitive Neuroscience PhD Student 🧠 | Plasticity Lab, University of Cambridge 🦾 | sensory feedback, sensorimotor learning, and neurotech | she/her

Posts

Media

Videos

Starter Packs

Reposted by Lucy Dowdall

Lucy Dowdall

@lucydowdall.bsky.social

· Jul 8

Reposted by Lucy Dowdall

Lucy Dowdall

@lucydowdall.bsky.social

· Jun 19

Reposted by Lucy Dowdall

Reposted by Lucy Dowdall

Reposted by Lucy Dowdall

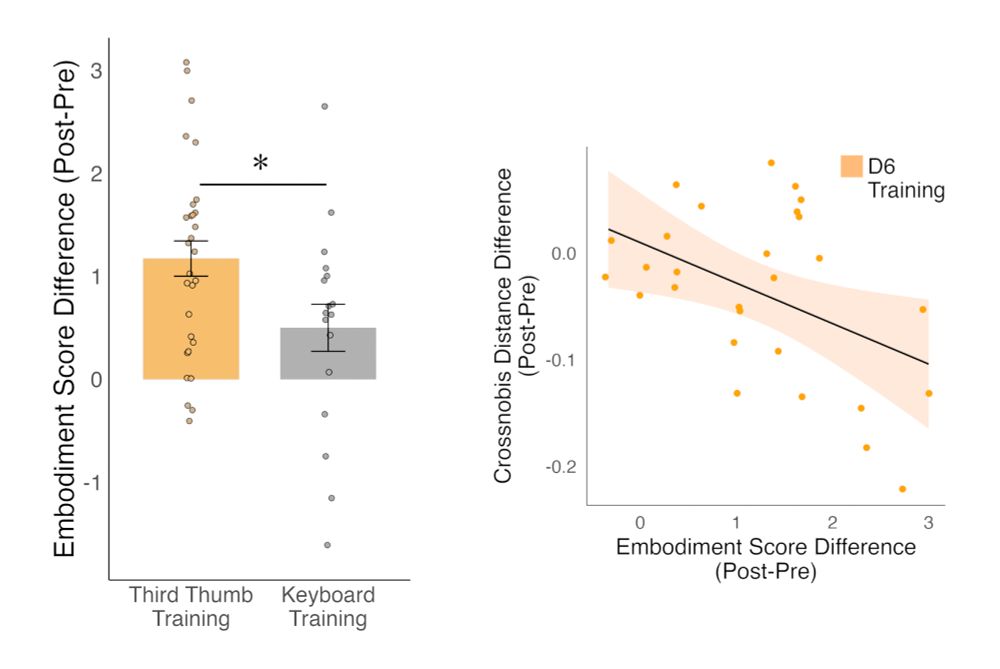

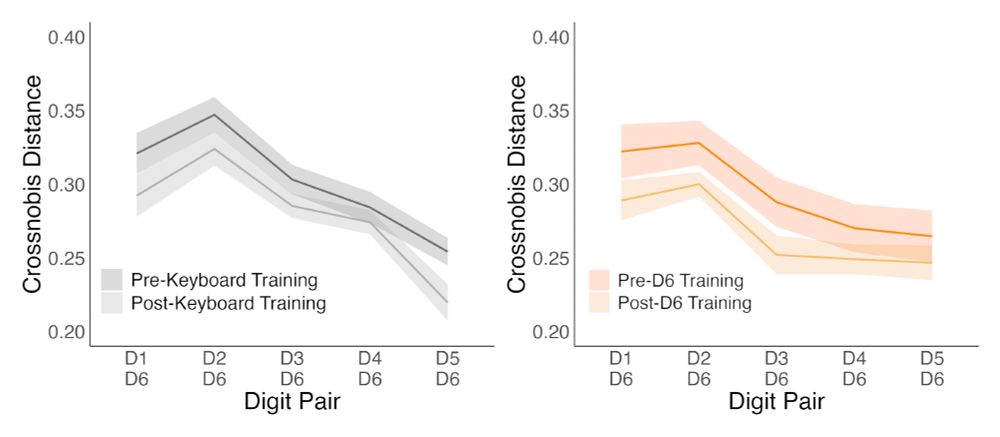

Lucy Dowdall

@lucydowdall.bsky.social

· Nov 25