We demonstrate that human preferences can be reverse engineered effectively by pipelining LLMs to optimise upstream preambles via reinforcement learning 🧵⬇️

We demonstrate that human preferences can be reverse engineered effectively by pipelining LLMs to optimise upstream preambles via reinforcement learning 🧵⬇️

Check it out at youtu.be/DL7qwmWWk88?...

Check it out at youtu.be/DL7qwmWWk88?...

All credits to @hannahrosekirk.bsky.social A.Whitefield, P.Röttger, A.M.Bean, K.Margatina, R.Mosquera-Gomez, J.Ciro, @maxbartolo.bsky.social H.He, B.Vidgen, S.Hale

Catch Hannah tomorrow at neurips.cc/virtual/2024/poster/97804

All credits to @hannahrosekirk.bsky.social A.Whitefield, P.Röttger, A.M.Bean, K.Margatina, R.Mosquera-Gomez, J.Ciro, @maxbartolo.bsky.social H.He, B.Vidgen, S.Hale

Catch Hannah tomorrow at neurips.cc/virtual/2024/poster/97804

Reach out (or pop by the @cohere.com booth) if you want to chat about human feedback, robustness and reasoning, prompt optimisation, adversarial data, glitch tokens, evaluation, or anything else!

Reach out (or pop by the @cohere.com booth) if you want to chat about human feedback, robustness and reasoning, prompt optimisation, adversarial data, glitch tokens, evaluation, or anything else!

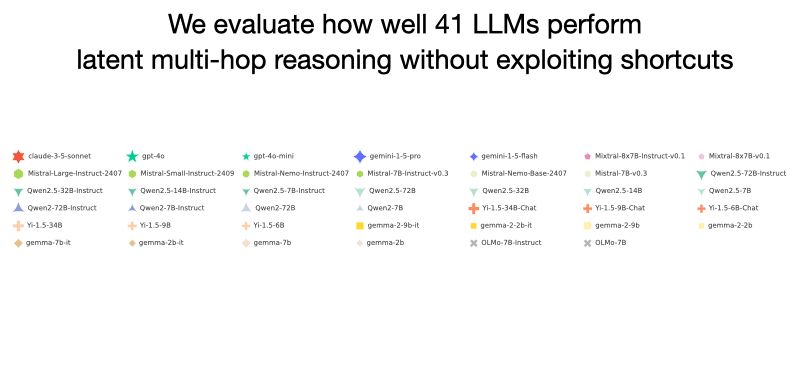

Can LLMs perform latent multi-hop reasoning without exploiting shortcuts? We find the answer is yes – they can recall and compose facts not seen together in training or guessing the answer, but success greatly depends on the type of the bridge entity (80% for country, 6% for year)! 1/N

Procedural knowledge in pretraining drives LLM reasoning ⚙️🔢

🧵⬇️

@clefourrier.bsky.social - a platform that lets you easily compare models as judges side-by-side and vote for the best evaluation

Check out the live leaderboard and start voting now 🤗

@clefourrier.bsky.social - a platform that lets you easily compare models as judges side-by-side and vote for the best evaluation

Check out the live leaderboard and start voting now 🤗