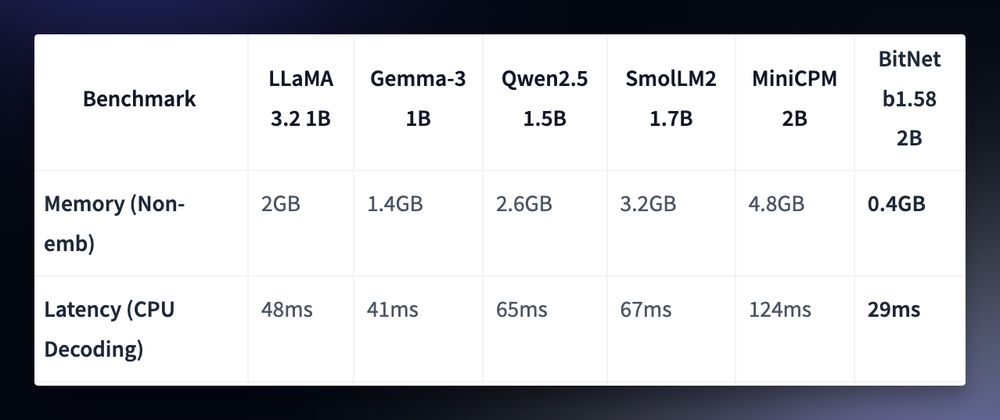

Microsoft released their 1-bit LLM with comparable performance to traditional models (LLaMA, Qwen, Smol), but 0.4GB memory footprint and faster latency

Microsoft released their 1-bit LLM with comparable performance to traditional models (LLaMA, Qwen, Smol), but 0.4GB memory footprint and faster latency