METR

@metr.org

1.4K followers

1 following

120 posts

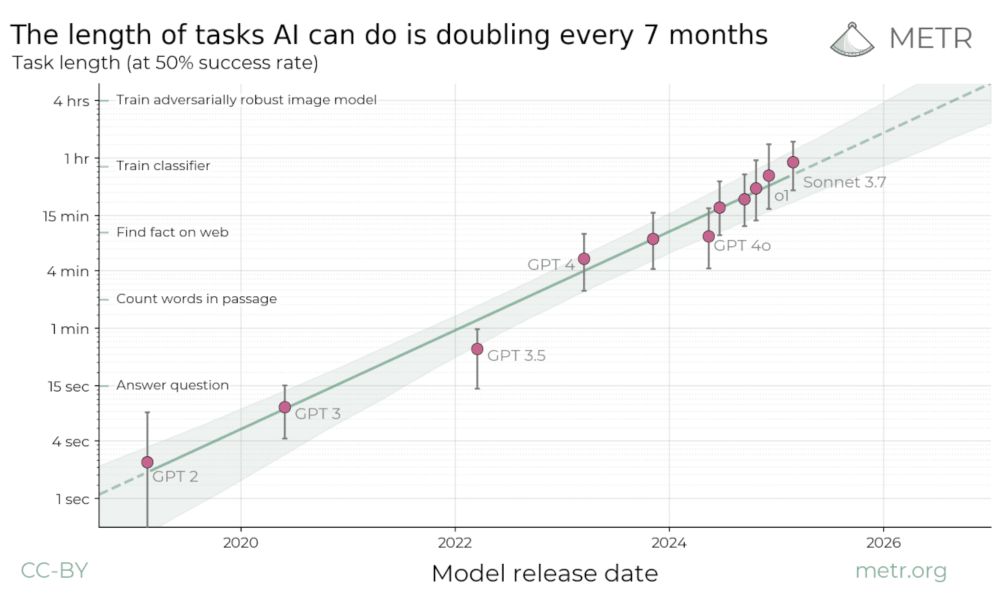

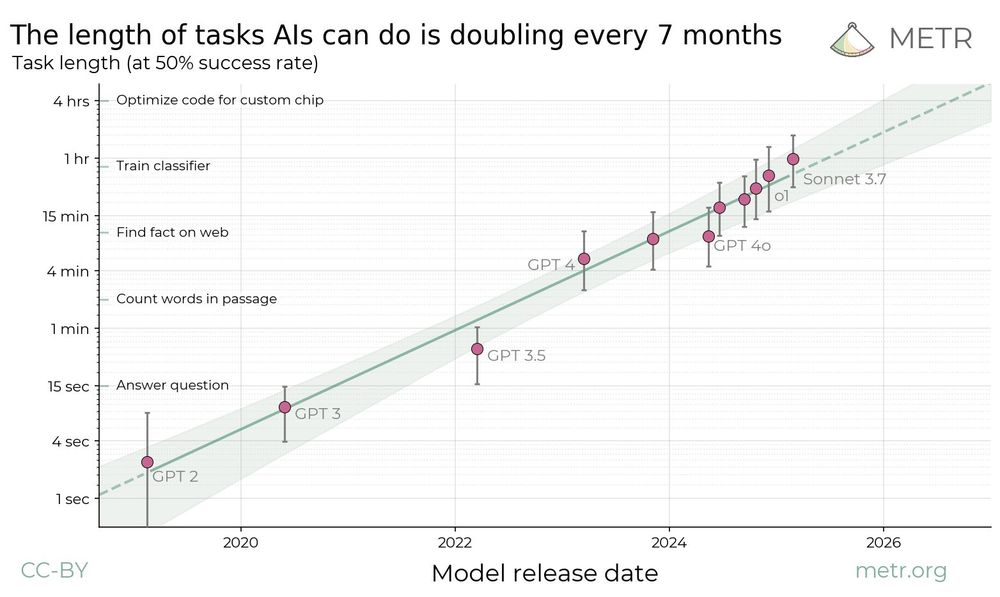

METR is a research nonprofit that builds evaluations to empirically test AI systems for capabilities that could threaten catastrophic harm to society.

Posts

Media

Videos

Starter Packs