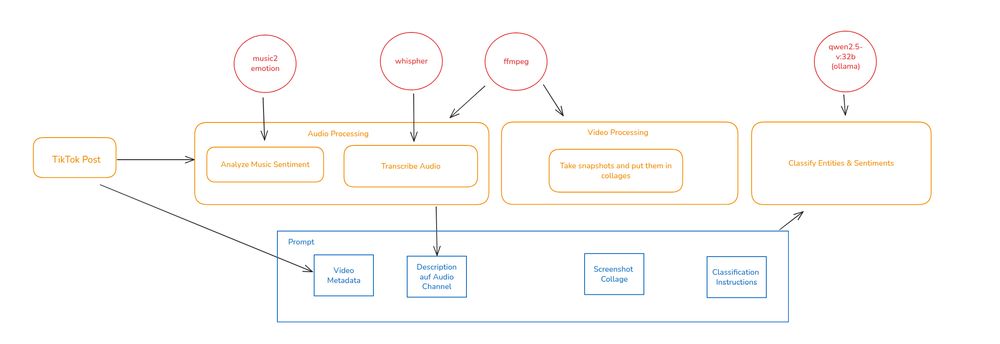

Our resources are limited and I favor local models over intransparent cloud services. So I build a pipeline around Ollama using a Mac with 32GB RAM and an M2 processor to first process the different channels of the posts […]

[Original post on chaos.social]

Our resources are limited and I favor local models over intransparent cloud services. So I build a pipeline around Ollama using a Mac with 32GB RAM and an M2 processor to first process the different channels of the posts […]

[Original post on chaos.social]

🔗 Read more: tinyurl.com/rbfjkfmu

🔗 Read more: tinyurl.com/rbfjkfmu