Shitposter

Data wrangler

- Founded Material Indicators in 2019

- Gave up PhD in Materials Science to pursue own business full-time in 2020

- Wearing all hats from data collection, analysis, processing-pipelines, all the way to data serving

- Self-taught & self-hosting

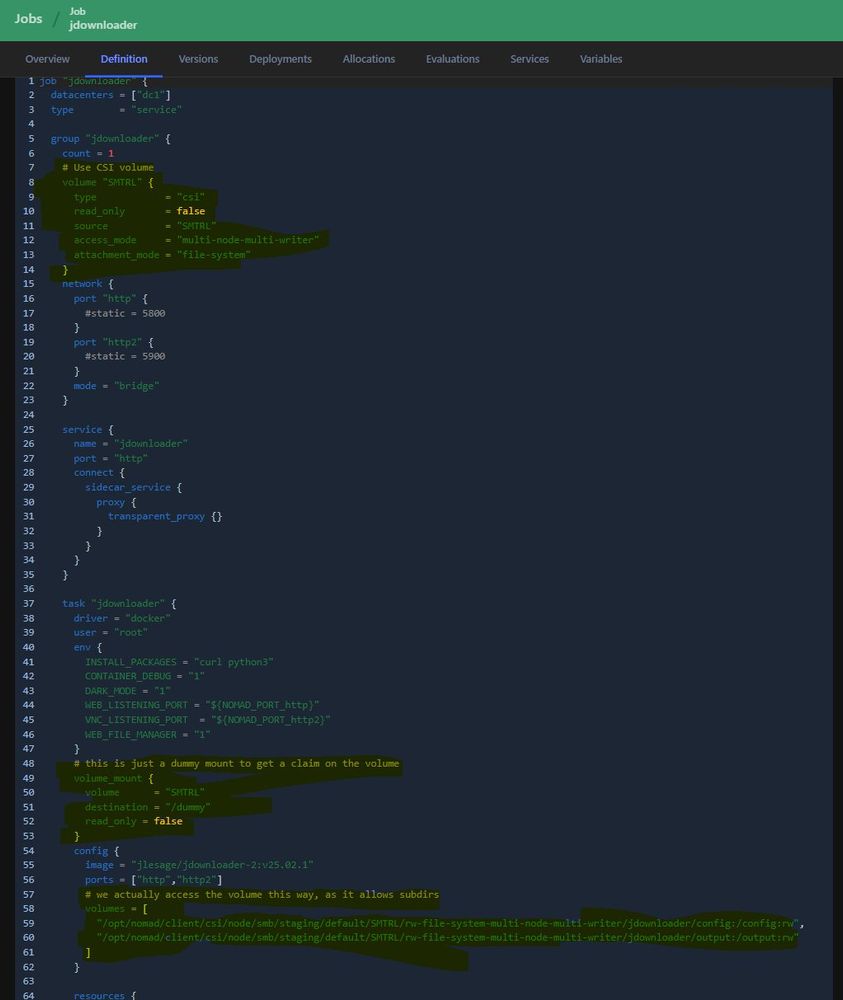

#Nomad #Hashistack #CSI #Docker

#Nomad #Hashistack #CSI #Docker

Today, we got to see the 'Strawberry Moon' and here is a very short video, so you can witness it too.

@mtrl-scientist.bsky.social

#moongazing #strawberrymoon #fullmoon #seestar #telescope

Today, we got to see the 'Strawberry Moon' and here is a very short video, so you can witness it too.

@mtrl-scientist.bsky.social

#moongazing #strawberrymoon #fullmoon #seestar #telescope

#Privacy

www.zeropartydata.es/p/localhost-...

#Privacy

www.zeropartydata.es/p/localhost-...

Docker builds used to take 10min but simply replacing

```sh

python setup.py install

```

with

```sh

uv pip install . --system

```

In my docker file resulted in the build only taking 26s!

Docker builds used to take 10min but simply replacing

```sh

python setup.py install

```

with

```sh

uv pip install . --system

```

In my docker file resulted in the build only taking 26s!

Fish head nebula was particularly nice!

Fish head nebula was particularly nice!

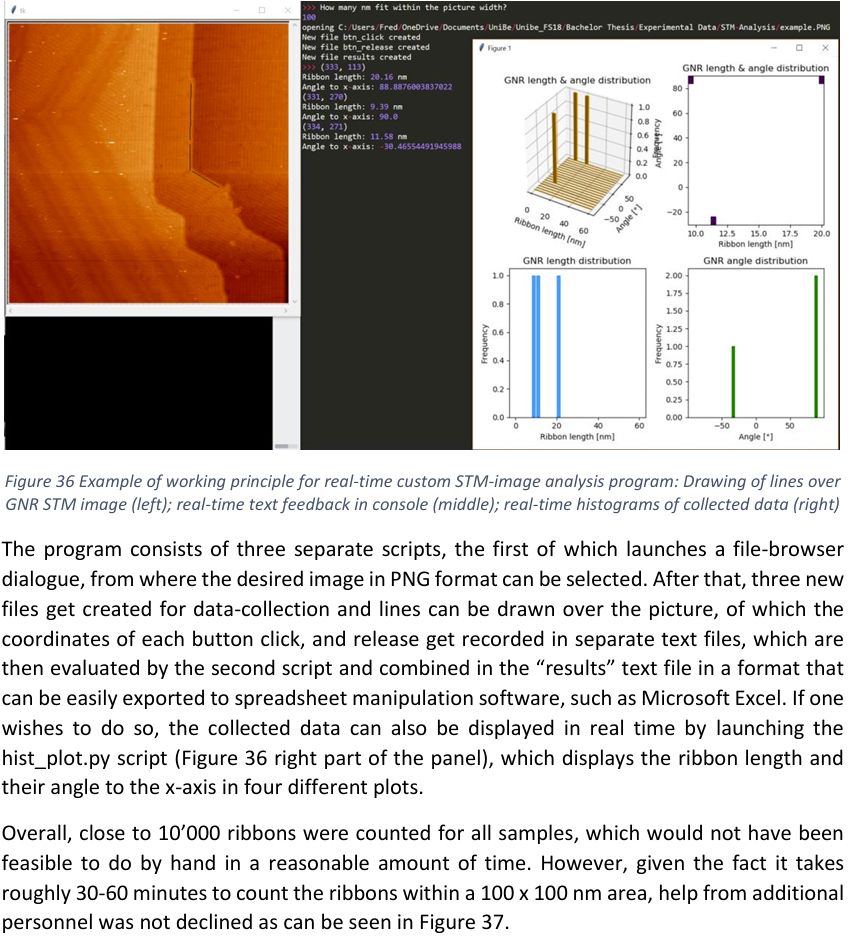

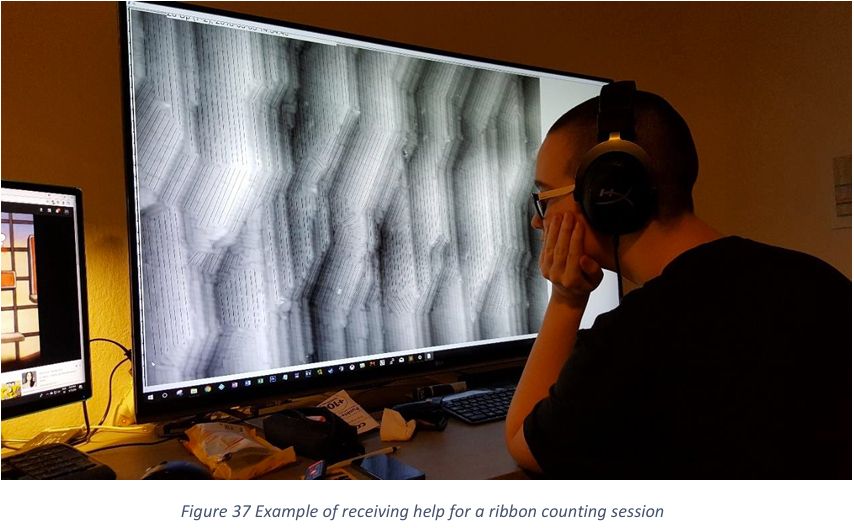

Was looking up something from old thesis and stumbled across my first time writing a Python GUI, and @ninasch.bsky.social helping to mark nanoribbons 😅

Was looking up something from old thesis and stumbled across my first time writing a Python GUI, and @ninasch.bsky.social helping to mark nanoribbons 😅

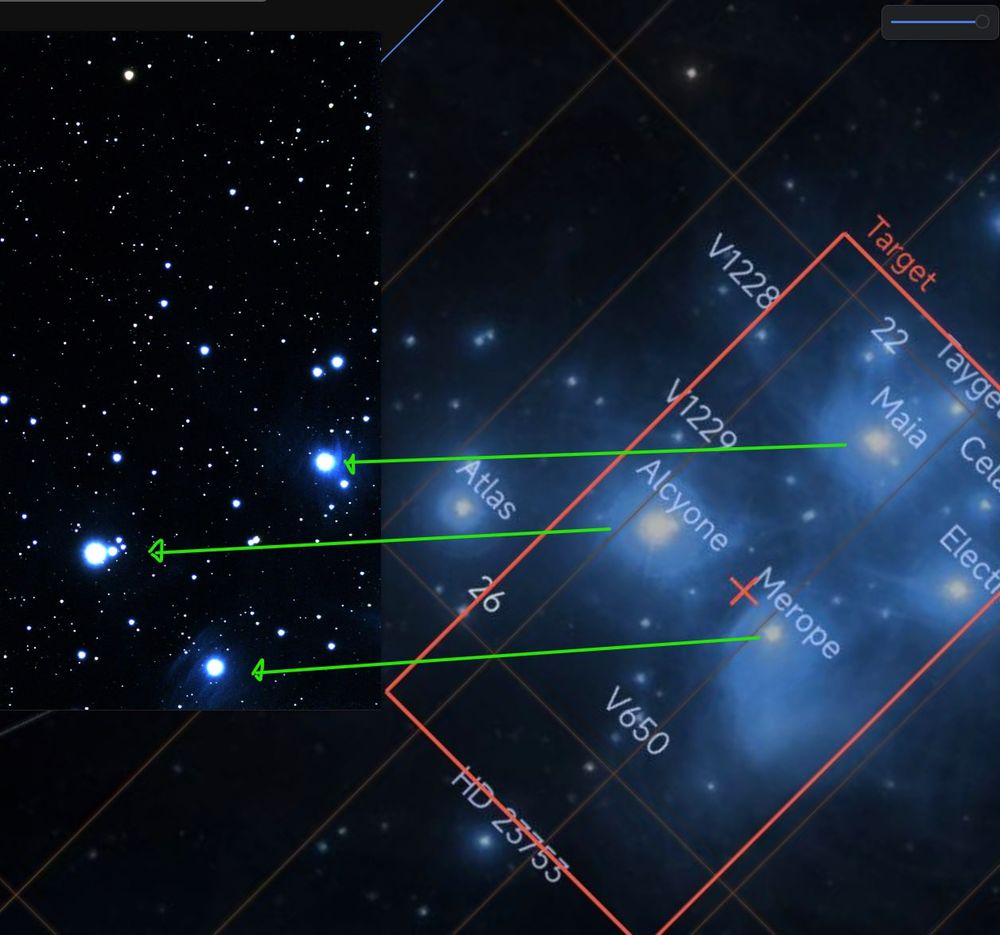

10min integration of pleiades & postprocessing by @ninasch.bsky.social

10min integration of pleiades & postprocessing by @ninasch.bsky.social

```

uv run pastebin.com/raw/2P6sLi0N

```

Why is this cool?

• super compact for sharing

• uv runs a self-contained script (PEP 723) directly from a URL (please inspect the code first!)

• No virtual environments required

```

uv run pastebin.com/raw/2P6sLi0N

```

Why is this cool?

• super compact for sharing

• uv runs a self-contained script (PEP 723) directly from a URL (please inspect the code first!)

• No virtual environments required

- subscribe to Bsky Jetstream

- filter by collections: app.bsky.*

- reset cursor by 30s (in case of restarts)

- filter commit msgs

- use collection as subject name

- create stream

- use CID to deduplicate

- publish to local Jetstream instance

- subscribe to Bsky Jetstream

- filter by collections: app.bsky.*

- reset cursor by 30s (in case of restarts)

- filter commit msgs

- use collection as subject name

- create stream

- use CID to deduplicate

- publish to local Jetstream instance

- Founded Material Indicators in 2019

- Gave up PhD in Materials Science to pursue own business full-time in 2020

- Wearing all hats from data collection, analysis, processing-pipelines, all the way to data serving

- Self-taught & self-hosting

- Founded Material Indicators in 2019

- Gave up PhD in Materials Science to pursue own business full-time in 2020

- Wearing all hats from data collection, analysis, processing-pipelines, all the way to data serving

- Self-taught & self-hosting

Is it possible Kafka just loses segments sometimes?

Pic 1 was live at the time (Kafka local storage).

Pic2 is a replay (Kafka remote storage).

Pic 3 shows that Kafka managed to write meta, but log file is 0 bytes for the missing parts???

Is it possible Kafka just loses segments sometimes?

Pic 1 was live at the time (Kafka local storage).

Pic2 is a replay (Kafka remote storage).

Pic 3 shows that Kafka managed to write meta, but log file is 0 bytes for the missing parts???

🙌

- Apache Kafka 3.9: Tiered Storage is prod-ready

- Soon: Apache Kafka 4.0: ZooKeeper- & MM1 removed (ETA Feb 2025)

- Jepsen report on Bufstream: a Kafka-compatible engine using S3 & etcd

- AWS launched MSK Express

#ApacheKafka #DataStreaming

🙌

SeaweedFS allows change-data-capture at the filesystem level.

Using streamz, I can hook into that and basically get a git-like commit-log of which notebooks I worked on at any given point in time.

Since it's an append-only store, I also get versioning.

SeaweedFS allows change-data-capture at the filesystem level.

Using streamz, I can hook into that and basically get a git-like commit-log of which notebooks I worked on at any given point in time.

Since it's an append-only store, I also get versioning.

Peaks are 5min apart (1M events for each peak).

Aggregating, since it'll be easier to process downstream.

Peaks are 5min apart (1M events for each peak).

Aggregating, since it'll be easier to process downstream.

It'll be fun they said.

It'll be fun they said.