In our new paper, we propose WebOrganizer which *constructs domains* based on the topic and format of CommonCrawl web pages 🌐

Key takeaway: domains help us curate better pre-training data! 🧵/N

In our new paper, we propose WebOrganizer which *constructs domains* based on the topic and format of CommonCrawl web pages 🌐

Key takeaway: domains help us curate better pre-training data! 🧵/N

More accurate detection of cancers (breast, prostate, skin, brain), faster diagnosis of strokes, sepsis, heart attacks, faster MRIs, full-body in 40 minutes.

Much more to come over the next years.

www.washingtonpost.com/opinions/202...

More accurate detection of cancers (breast, prostate, skin, brain), faster diagnosis of strokes, sepsis, heart attacks, faster MRIs, full-body in 40 minutes.

Much more to come over the next years.

www.washingtonpost.com/opinions/202...

But they only work for sequences of discrete symbols: language, proteins...

But they only work for sequences of discrete symbols: language, proteins...

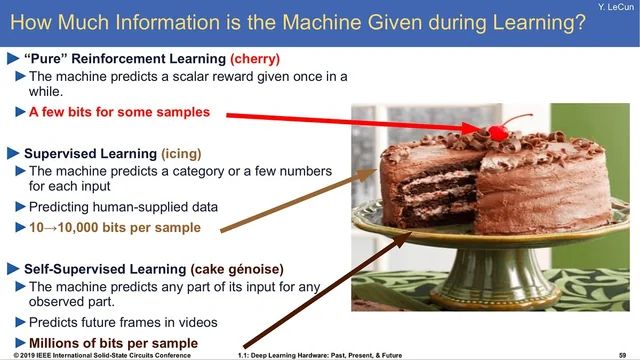

> “If intelligence is a cake, the bulk of the cake is unsupervised learning, the icing on the cake is supervised learning, and the cherry on the cake is reinforcement learning (RL).”