Nathan Lambert

@natolambert.bsky.social

13K followers

270 following

1.6K posts

A LLN - large language Nathan - (RL, RLHF, society, robotics), athlete, yogi, chef

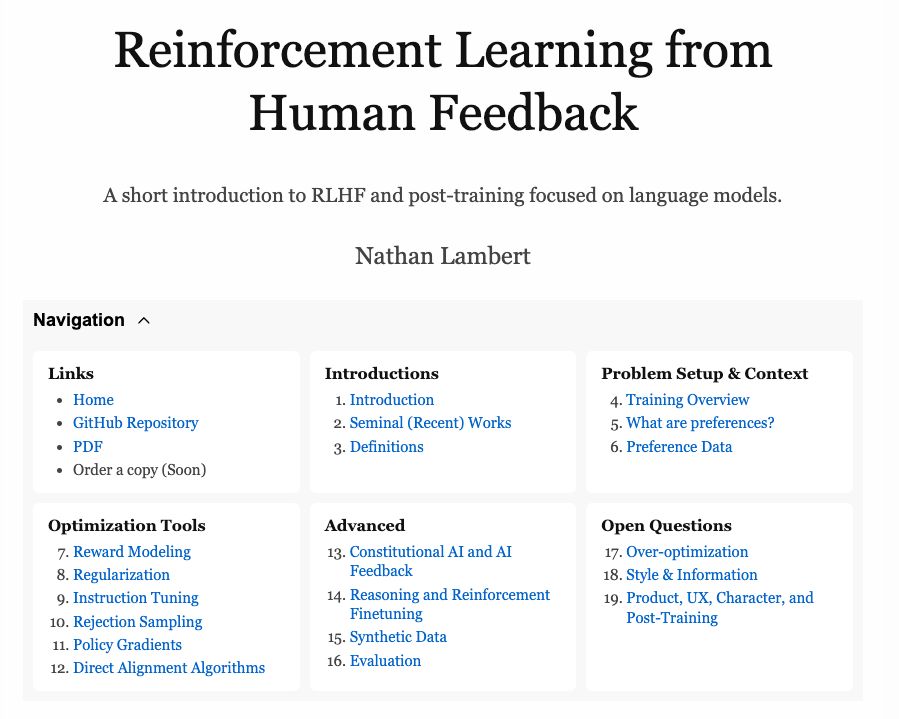

Writes http://interconnects.ai

At Ai2 via HuggingFace, Berkeley, and normal places

Posts

Media

Videos

Starter Packs

Pinned