pc: @Kevin Frans

pc: @Kevin Frans

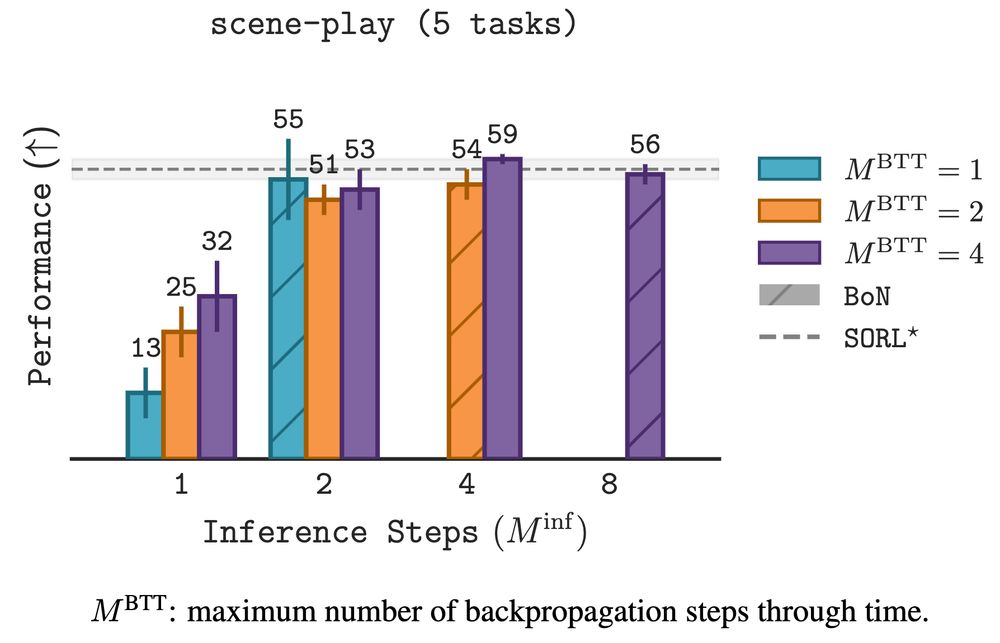

1. efficient training (i.e. limit backprop through time)

2. expressive model classes (e.g. flow matching)

3. inference-time scaling (sequential and parallel)

1. efficient training (i.e. limit backprop through time)

2. expressive model classes (e.g. flow matching)

3. inference-time scaling (sequential and parallel)