You can reproduce this SOTA training with <80 LoC and 2 hours of training and it'll run NanoBEIR during training, report it to W&B and create an informative model card!

Link to the gist: gist.github.com/NohTow/3030f...

You can reproduce this SOTA training with <80 LoC and 2 hours of training and it'll run NanoBEIR during training, report it to W&B and create an informative model card!

Link to the gist: gist.github.com/NohTow/3030f...

While it is bigger, it is still a very lightweight model and benefits from the efficiency of ModernBERT!

Also, it has only been trained on MS MARCO (for late interaction) and should thus generalize pretty well!

While it is bigger, it is still a very lightweight model and benefits from the efficiency of ModernBERT!

Also, it has only been trained on MS MARCO (for late interaction) and should thus generalize pretty well!

To overcome limitations of awesome ModernBERT-based dense models, today @lightonai.bsky.social is releasing GTE-ModernColBERT, the very first state-of-the-art late-interaction (multi-vectors) model trained using PyLate 🚀

To overcome limitations of awesome ModernBERT-based dense models, today @lightonai.bsky.social is releasing GTE-ModernColBERT, the very first state-of-the-art late-interaction (multi-vectors) model trained using PyLate 🚀

Notably, the performance with dimension 256 is only slightly worse than the base version with full dimension 768

Notably, the performance with dimension 256 is only slightly worse than the base version with full dimension 768

ModernBERT-embed-large is trained using the same (two-stage training) recipe as its smaller sibling and expectedly increases the performance, reaching +1.22 in MTEB average

ModernBERT-embed-large is trained using the same (two-stage training) recipe as its smaller sibling and expectedly increases the performance, reaching +1.22 in MTEB average

But the large variant of ModernBERT is also awesome...

So today, @lightonai.bsky.social is releasing ModernBERT-embed-large, the larger and more capable iteration of ModernBERT-embed!

But the large variant of ModernBERT is also awesome...

So today, @lightonai.bsky.social is releasing ModernBERT-embed-large, the larger and more capable iteration of ModernBERT-embed!

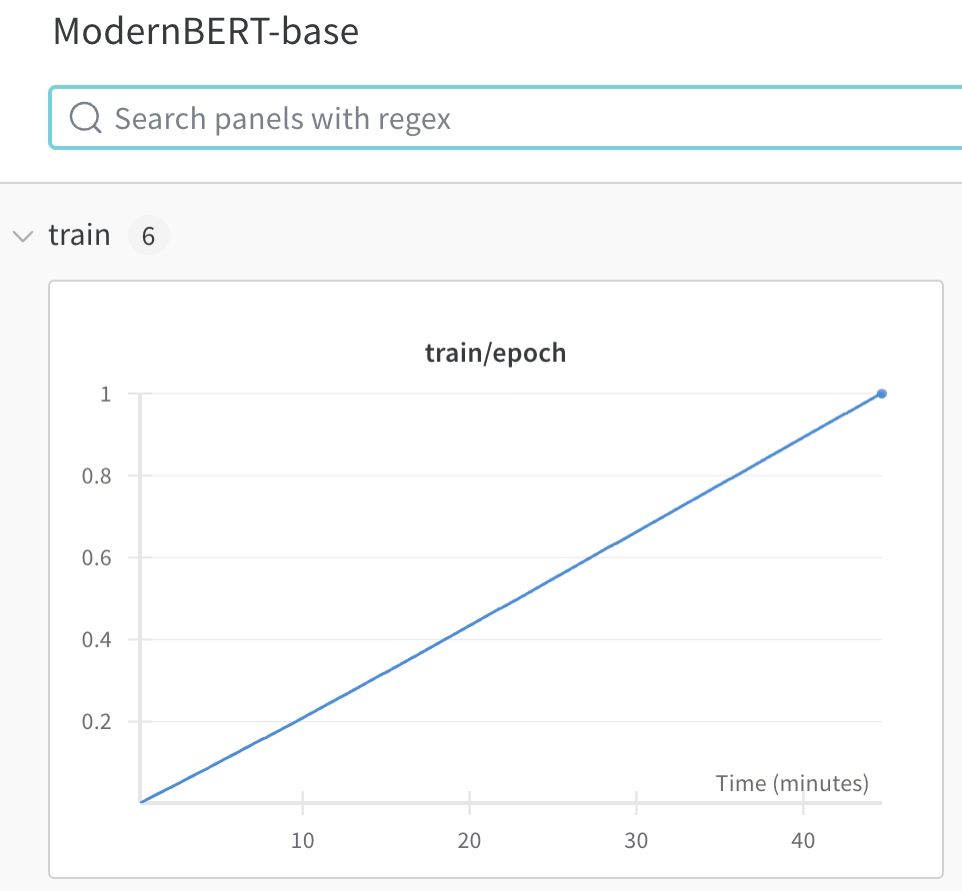

The ModernBERT-base checkpoint achieves 51.3 of BEIR average

This means that we beat e5 in a <45 minutes training on MS MARCO only (using only half of the memory of our 8x100)

The ModernBERT-base checkpoint achieves 51.3 of BEIR average

This means that we beat e5 in a <45 minutes training on MS MARCO only (using only half of the memory of our 8x100)

We have a lot of experiments on ColBERT models in the paper, with tons of different base models

PyLate handled it all, even models using half-baked remote code

This was a really cool stress test and I am really happy it went so smoothly

We have a lot of experiments on ColBERT models in the paper, with tons of different base models

PyLate handled it all, even models using half-baked remote code

This was a really cool stress test and I am really happy it went so smoothly

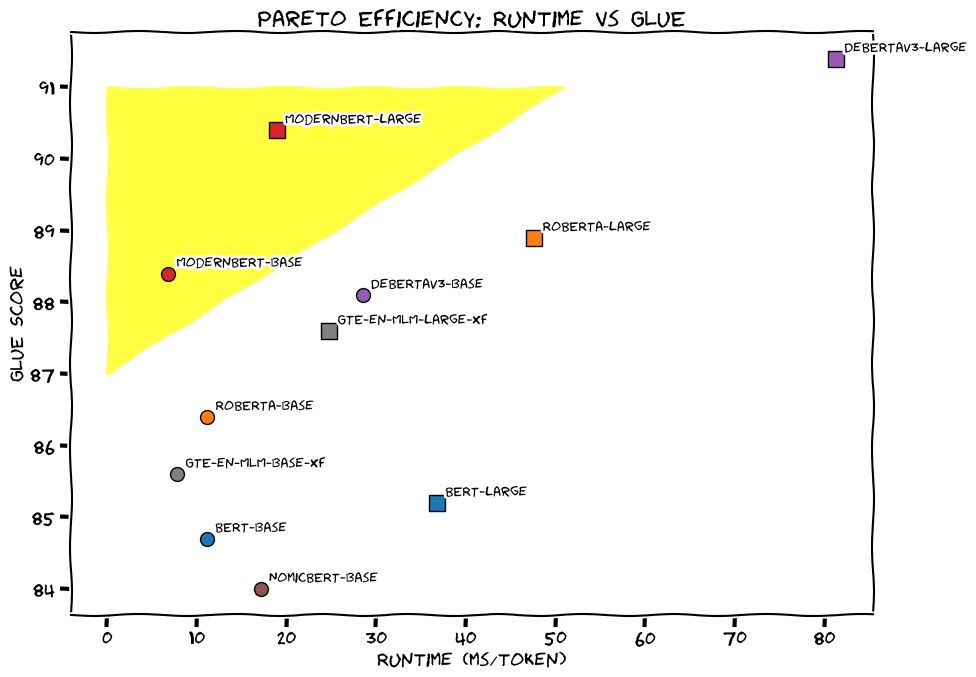

Coupled to unpadding through the full processing flash attention, this allows ModernBERT to be two to three times faster than most encoders on long-context on a RTX 4090

Coupled to unpadding through the full processing flash attention, this allows ModernBERT to be two to three times faster than most encoders on long-context on a RTX 4090

This enables fast processing of long sequences while maintaining accuracy

This enables fast processing of long sequences while maintaining accuracy

ModernBERT yields state-of-the-art results on various tasks, including IR (short and long context text as well as code) and NLU

ModernBERT yields state-of-the-art results on various tasks, including IR (short and long context text as well as code) and NLU

There might be a few LLM releases per week, but there is only one drop-in replacement that brings Pareto improvements over the 6 years old BERT while going at lightspeed

There might be a few LLM releases per week, but there is only one drop-in replacement that brings Pareto improvements over the 6 years old BERT while going at lightspeed