Nathan Godey

@nthngdy.bsky.social

30 followers

18 following

20 posts

Looking to start a post-doc in early 2025!

Working on the representations of LMs and pretraining methods

@InriaParis

https://nathangodey.github.io

Posts

Media

Videos

Starter Packs

Reposted by Nathan Godey

Nathan Godey

@nthngdy.bsky.social

· Mar 6

Nathan Godey

@nthngdy.bsky.social

· Mar 6

Nathan Godey

@nthngdy.bsky.social

· Mar 6

Nathan Godey

@nthngdy.bsky.social

· Mar 6

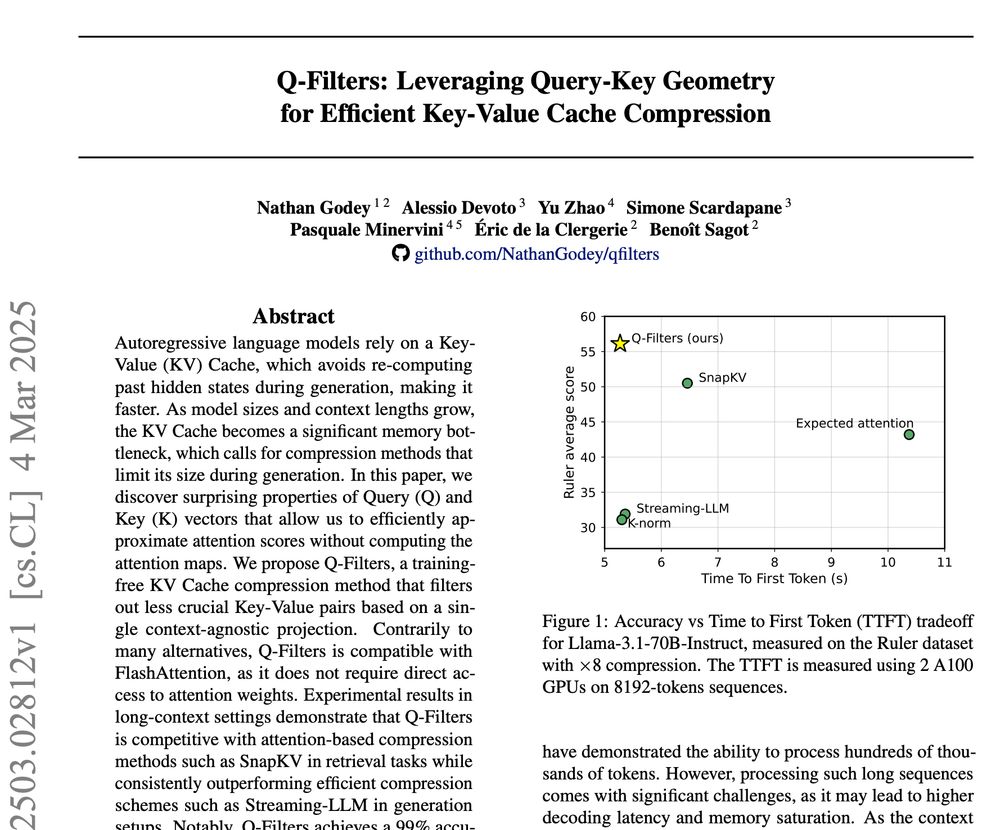

Q-Filters: Leveraging QK Geometry for Efficient KV Cache Compression

Autoregressive language models rely on a Key-Value (KV) Cache, which avoids re-computing past hidden states during generation, making it faster. As model sizes and context lengths grow, the KV Cache b...

arxiv.org