Faruk Gulban

@ofgulban.bsky.social

390 followers

310 following

37 posts

High Resolution Magnetic Resonance Imaging |

Github → http://github.com/ofgulban

Youtube → http://youtube.com/@ofgulban

Blog → http://thingsonthings.org

Art → http://behance.net/ofgulban

Posts

Media

Videos

Starter Packs

Pinned

Reposted by Faruk Gulban

Reposted by Faruk Gulban

Faruk Gulban

@ofgulban.bsky.social

· Jul 25

Reposted by Faruk Gulban

Faruk Gulban

@ofgulban.bsky.social

· Jul 17

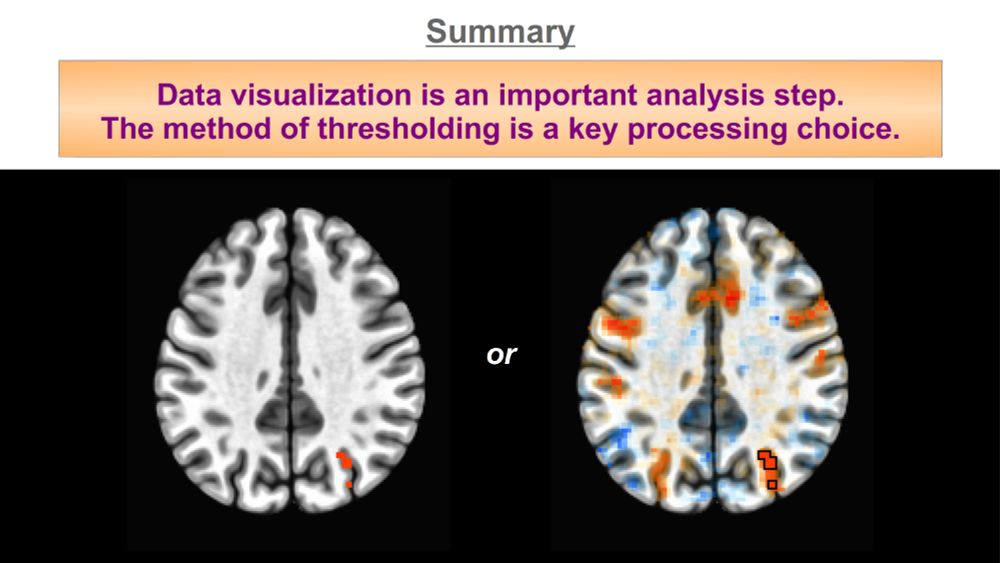

Computing geometric layers and columns on continuously improving human (f)MRI data

Authors: Ömer Faruk Gülban, Renzo Huber | Date: April 2024 Chapter published here: Functional magnetic resonance imaging (fMRI) today is a common method to study the human brain. The popularity of …

layerfmri.com

Reposted by Faruk Gulban

Faruk Gulban

@ofgulban.bsky.social

· Jun 24

Reposted by Faruk Gulban

Faruk Gulban

@ofgulban.bsky.social

· Jun 11

Faruk Gulban

@ofgulban.bsky.social

· May 28

Faruk Gulban

@ofgulban.bsky.social

· May 23

Reposted by Faruk Gulban

Reposted by Faruk Gulban

Lars Muckli

@larsmuckli.bsky.social

· Apr 5

Professor, Senior Lecturer/Lecturer and Lecturer posts - Glasgow City (GB) job with University of Glasgow | 12838283

University of Glasgow College of Medical, Veterinary and Life Sciences School of Psychology & Neuroscience Professor in Cognitive Neuroscience/Psy...

www.nature.com

Reposted by Faruk Gulban