Patrick Liu

@patrickpliu.bsky.social

25 followers

12 following

11 posts

Columbia Political Science | PhD Student

Posts

Media

Videos

Starter Packs

Patrick Liu

@patrickpliu.bsky.social

· Apr 2

Patrick Liu

@patrickpliu.bsky.social

· Apr 2

Patrick Liu

@patrickpliu.bsky.social

· Apr 2

Patrick Liu

@patrickpliu.bsky.social

· Apr 2

Patrick Liu

@patrickpliu.bsky.social

· Apr 2

Patrick Liu

@patrickpliu.bsky.social

· Apr 2

Patrick Liu

@patrickpliu.bsky.social

· Apr 2

Patrick Liu

@patrickpliu.bsky.social

· Apr 2

Patrick Liu

@patrickpliu.bsky.social

· Apr 2

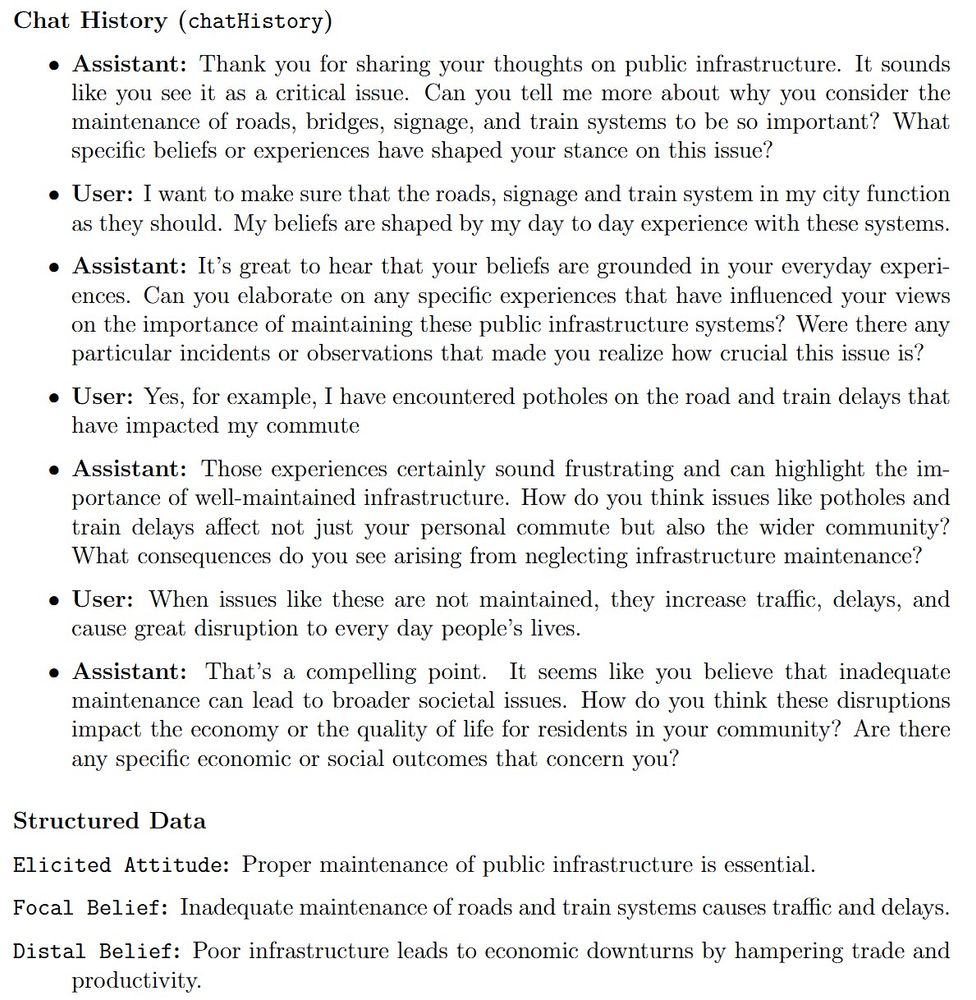

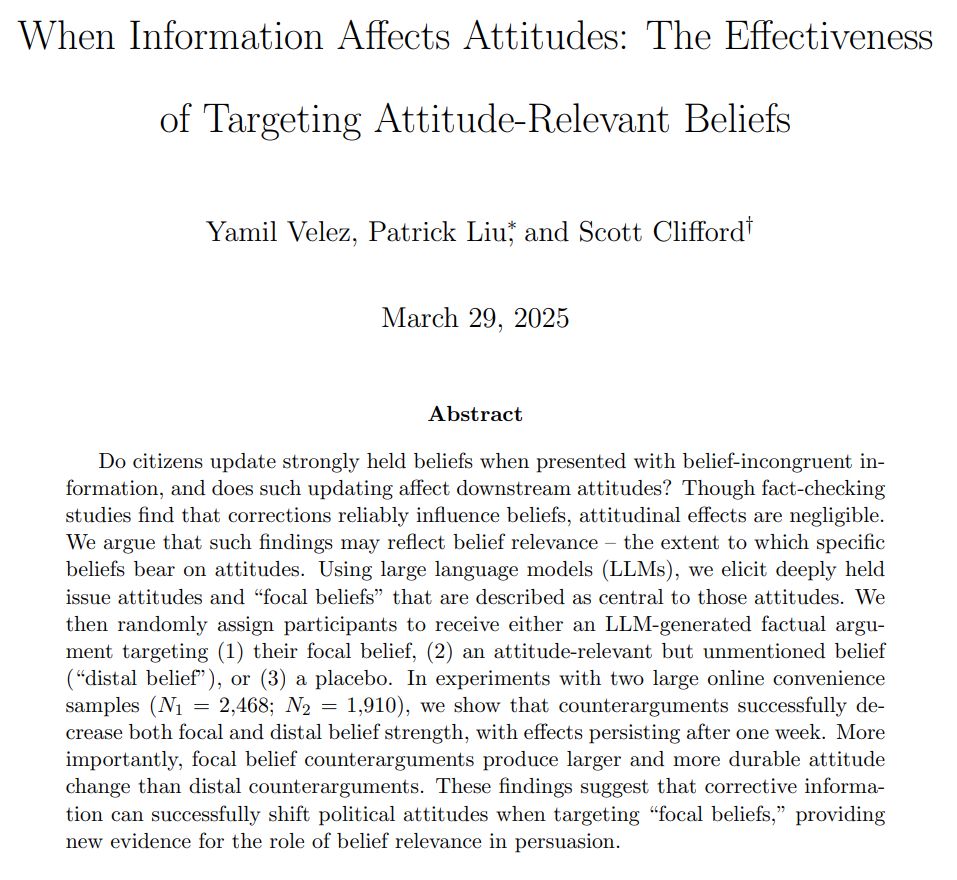

When Information Affects Attitudes: The Effectiveness of Targeting Attitude-Relevant Beliefs

Do citizens update strongly held beliefs when presented with belief-incongruent information, and does such updating affect downstream attitudes? Though fact-checking studies find that corrections reli...

go.shr.lc