Paul Bürkner

@paulbuerkner.com

6K followers

1.8K following

74 posts

Full Professor of Computational Statistics at TU Dortmund University

Scientist | Statistician | Bayesian | Author of brms | Member of the Stan and BayesFlow development teams

Website: https://paulbuerkner.com

Opinions are my own

Posts

Media

Videos

Starter Packs

Paul Bürkner

@paulbuerkner.com

· Jun 1

Paul Bürkner

@paulbuerkner.com

· May 23

Reposted by Paul Bürkner

Paul Bürkner

@paulbuerkner.com

· Mar 21

Reposted by Paul Bürkner

Hadley Wickham

@hadley.nz

· Feb 27

Reposted by Paul Bürkner

Reposted by Paul Bürkner

Reposted by Paul Bürkner

Reposted by Paul Bürkner

Paul Bürkner

@paulbuerkner.com

· Jan 29

Reposted by Paul Bürkner

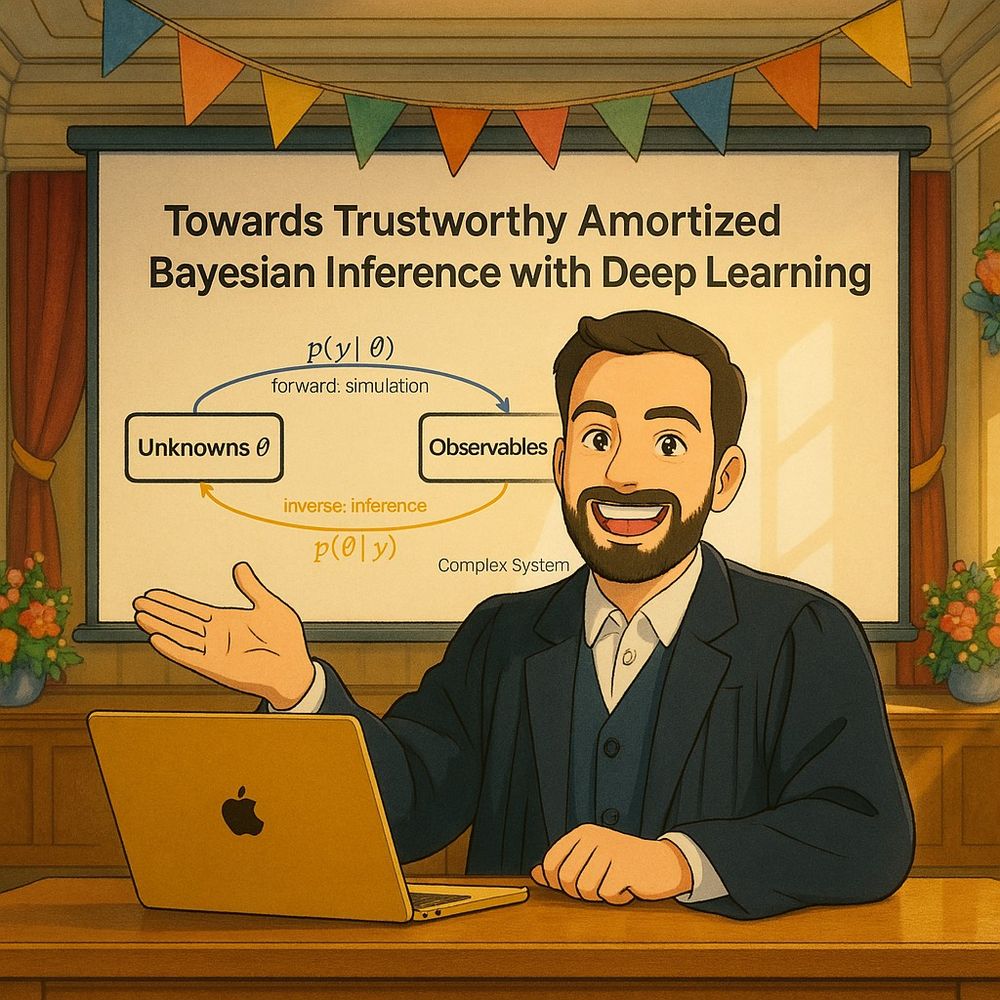

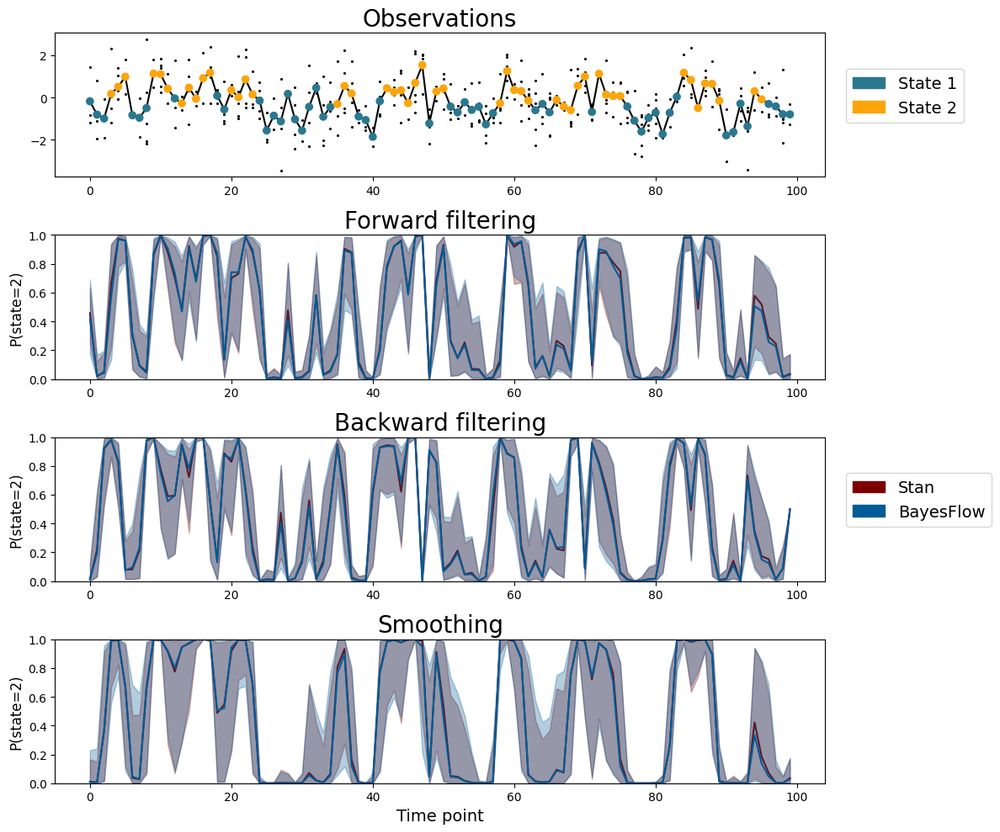

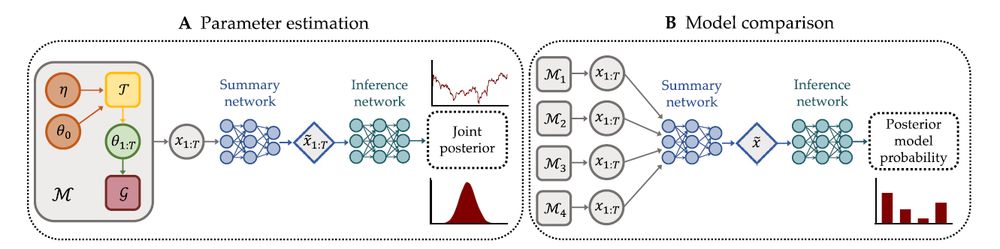

BayesFlow

@bayesflow.org

· Jan 28

Reposted by Paul Bürkner

Reposted by Paul Bürkner

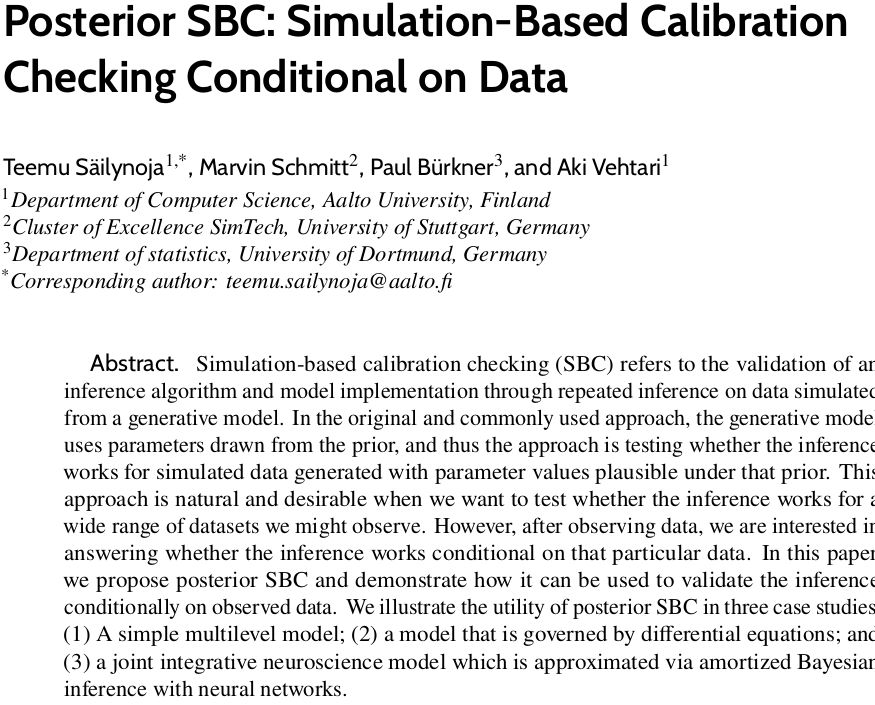

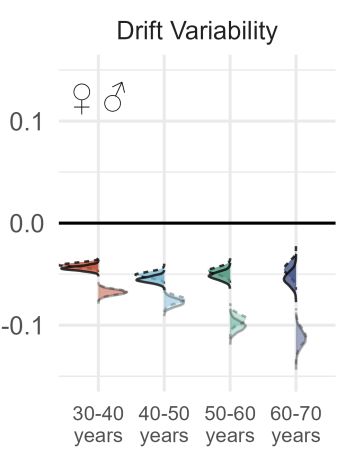

Marvin Schmitt

@marvin-schmitt.com

· Jan 14

Reposted by Paul Bürkner

Riccardo Fusaroli

@fusaroli.bsky.social

· Jan 10

Paul Bürkner

@paulbuerkner.com

· Jan 9

Paul Bürkner

@paulbuerkner.com

· Jan 3

Paul Bürkner

@paulbuerkner.com

· Jan 2

Paul Bürkner

@paulbuerkner.com

· Jan 2

Paul Bürkner

@paulbuerkner.com

· Dec 23

Reposted by Paul Bürkner

Reposted by Paul Bürkner

Reposted by Paul Bürkner

Kevin M. Kruse

@kevinmkruse.bsky.social

· Dec 12

Reposted by Paul Bürkner

Reposted by Paul Bürkner