Subbarao Kambhampati (కంభంపాటి సుబ్బారావు)

@rao2z.bsky.social

890 followers

16 following

77 posts

AI researcher & teacher at SCAI, ASU. Former President of AAAI & Chair of AAAS Sec T. Here to tweach #AI. YouTube Ch: http://bit.ly/38twrAV Twitter: rao2z

Posts

Media

Videos

Starter Packs

Pinned

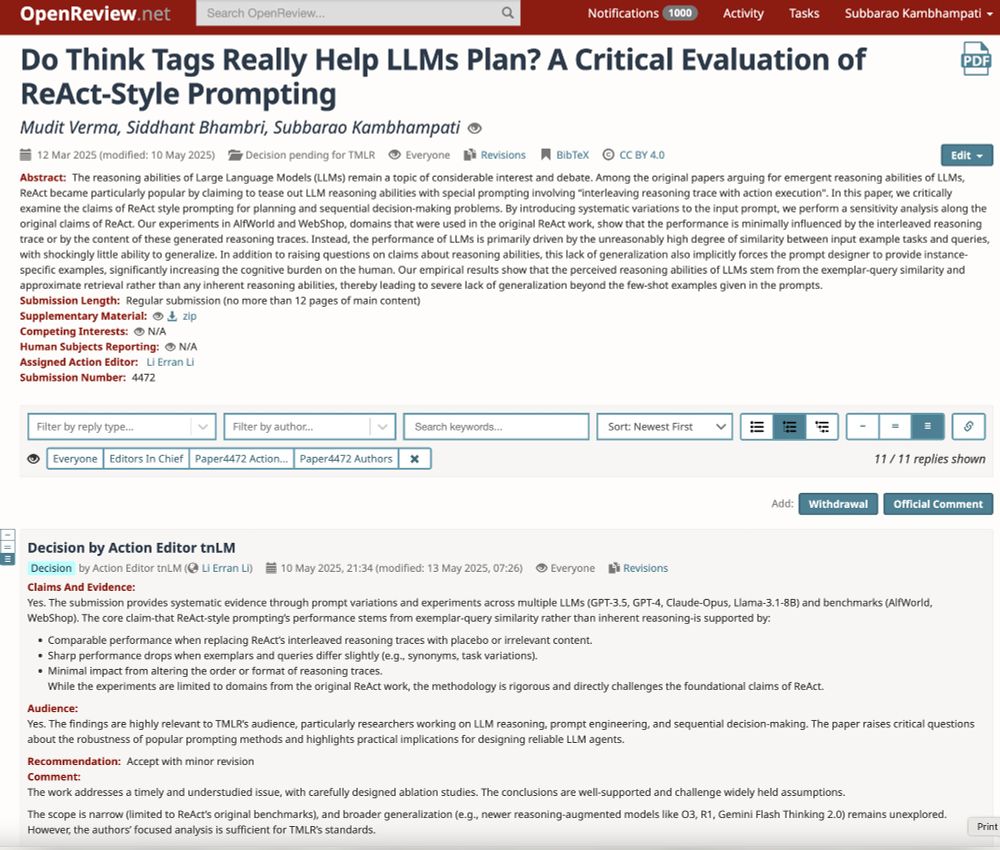

Reposted by Subbarao Kambhampati (కంభంపాటి సుబ్బారావు)

Reposted by Subbarao Kambhampati (కంభంపాటి సుబ్బారావు)

Dr Abeba Birhane

@abeba.bsky.social

· Jun 1

Beyond Semantics: The Unreasonable Effectiveness of Reasonless Intermediate Tokens

Recent impressive results from large reasoning models have been interpreted as a triumph of Chain of Thought (CoT), and especially of the process of training on CoTs sampled from base LLMs in order to...

arxiv.org

Reposted by Subbarao Kambhampati (కంభంపాటి సుబ్బారావు)