Reece Keller

@reecedkeller.bsky.social

84 followers

6 following

10 posts

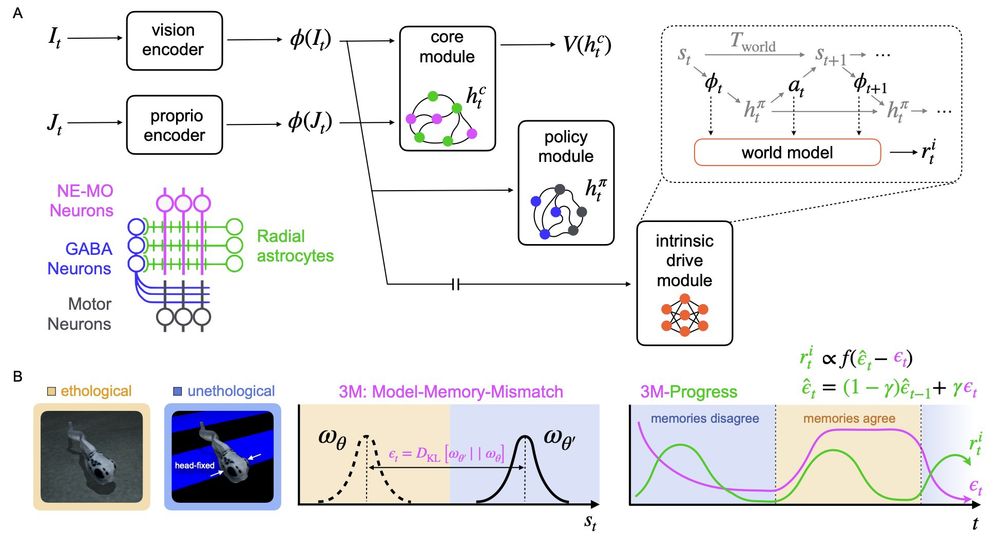

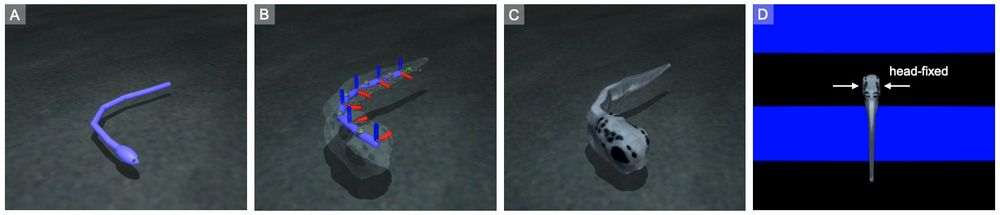

CS+Neuro @cmu.edu PhD Student with Xaq Pitkow and @anayebi.bsky.social working on autonomous embodied AI.

Posts

Media

Videos

Starter Packs