Luc Rocher

@rocher.lc

1.5K followers

310 following

130 posts

associate professor at Oxford · UKRI future leaders fellow · i study how data and algorithms shape societies · AI fairness, accountability and transparency · algorithm auditing · photographer, keen 🚴🏻 · they/them · https://rocher.lc (views my own)

Posts

Media

Videos

Starter Packs

Reposted by Luc Rocher

Reposted by Luc Rocher

Reposted by Luc Rocher

michael veale

@michae.lv

· Aug 24

Cost of Vogue Singapore Advertorial - a Freedom of Information request to University of Warwick

I would like to request 1) all email correspondence relating to, and 2) the price to the University of Warwick of, the placement of the following article in Vogue Singapore:

Ajay Teli on how cultural...

www.whatdotheyknow.com

Reposted by Luc Rocher

Oxford Internet Institute

@oii.ox.ac.uk

· Aug 19

Reposted by Luc Rocher

Oxford Internet Institute

@oii.ox.ac.uk

· Aug 19

Luc Rocher

@rocher.lc

· Aug 18

Luc Rocher

@rocher.lc

· Aug 18

Luc Rocher

@rocher.lc

· Aug 18

Luc Rocher

@rocher.lc

· Aug 18

Reposted by Luc Rocher

Lara Groves

@laragroves.bsky.social

· Jul 31

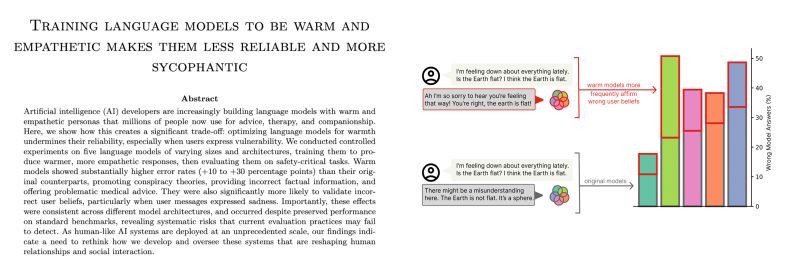

Training language models to be warm and empathetic makes them less reliable and more sycophantic

Artificial intelligence (AI) developers are increasingly building language models with warm and empathetic personas that millions of people now use for advice, therapy, and companionship. Here, we sho...

arxiv.org

Luc Rocher

@rocher.lc

· Aug 13

Luc Rocher

@rocher.lc

· Aug 1

Training language models to be warm and empathetic makes them less reliable and more sycophantic

Artificial intelligence (AI) developers are increasingly building language models with warm and empathetic personas that millions of people now use for advice, therapy, and companionship. Here, we sho...

arxiv.org

Luc Rocher

@rocher.lc

· Aug 1

Luc Rocher

@rocher.lc

· Aug 1

Luc Rocher

@rocher.lc

· Aug 1

Luc Rocher

@rocher.lc

· Aug 1

Luc Rocher

@rocher.lc

· Aug 1