Sam Blau

@samblau.bsky.social

2.2K followers

340 following

32 posts

Research scientist & computational chemist at Berkeley Lab using HT DFT workflows, machine learning, and reaction networks to model complex reactivity.

Posts

Media

Videos

Starter Packs

Pinned

Sam Blau

@samblau.bsky.social

· Aug 14

Emory Chan

@emorychannano.bsky.social

· Aug 14

Chan Group @ Molecular Foundry - openings

The Chan group welcomes inquiries for motivated, creative, and independent researchers at all levels (postdoctoral fellow, graduate students, undergraduates, and visitors), even in the absence of post...

combinano.lbl.gov

Sam Blau

@samblau.bsky.social

· Jul 29

Sam Blau

@samblau.bsky.social

· May 15

FACCTs

@faccts.de

· May 15

Sharing new breakthroughs and artifacts supporting molecular property prediction, language processing, and neuroscience

Meta FAIR is sharing new research artifacts that highlight our commitment to advanced machine intelligence (AMI) through focused scientific and academic progress.

ai.meta.com

Reposted by Sam Blau

Sam Blau

@samblau.bsky.social

· May 14

Sam Blau

@samblau.bsky.social

· May 14

Sam Blau

@samblau.bsky.social

· May 14

Sam Blau

@samblau.bsky.social

· May 14

Sam Blau

@samblau.bsky.social

· May 14

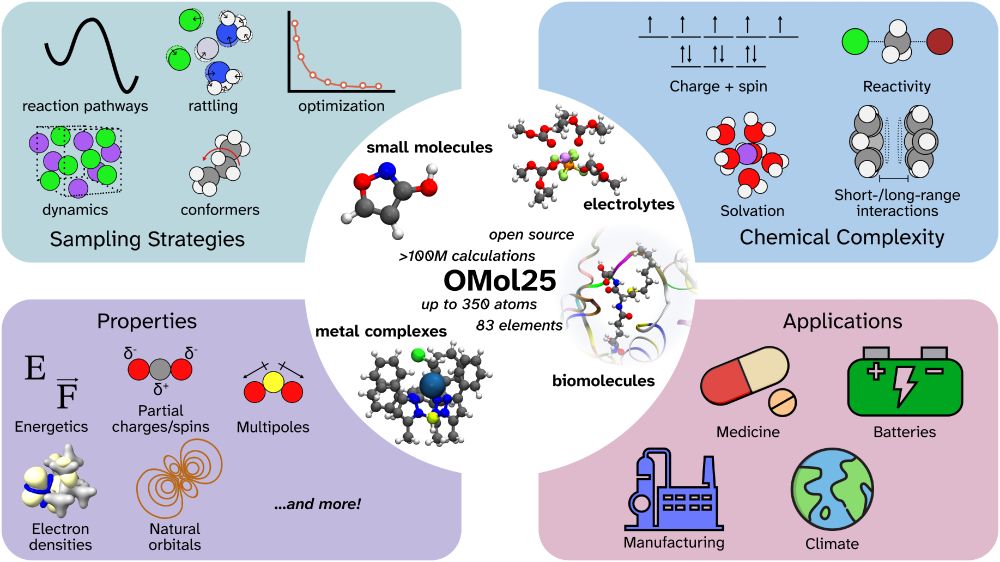

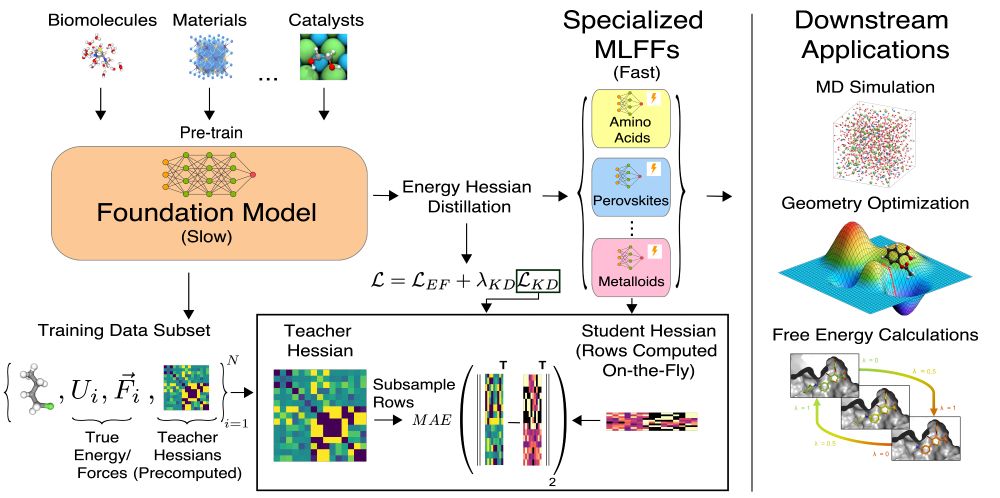

The Open Molecules 2025 (OMol25) Dataset, Evaluations, and Models

Machine learning (ML) models hold the promise of transforming atomic simulations by delivering quantum chemical accuracy at a fraction of the computational cost. Realization of this potential would en...

arxiv.org

Reposted by Sam Blau

Sam Blau

@samblau.bsky.social

· Mar 31

Reposted by Sam Blau

Reposted by Sam Blau

Reposted by Sam Blau

Reposted by Sam Blau

ChemRxiv Bot

@chemrxivbot.bsky.social

· Dec 26