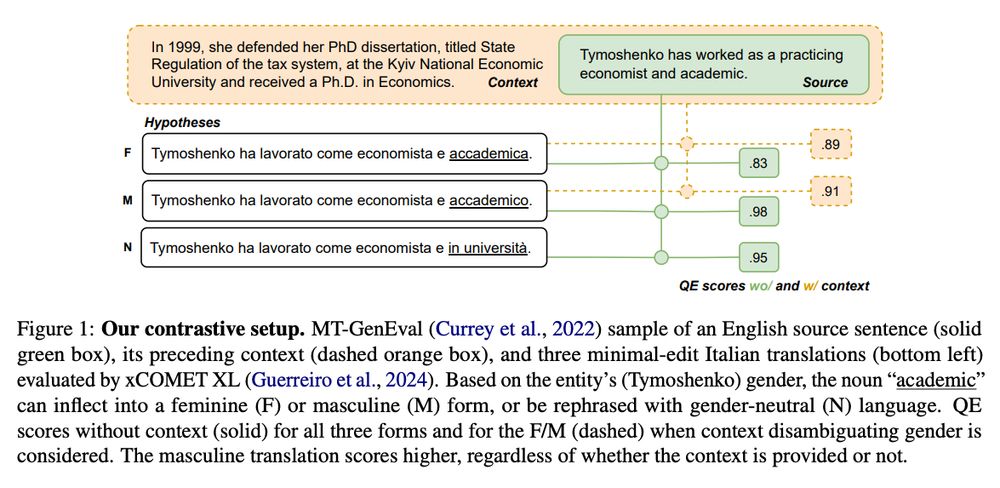

🚨 Our #ACL2025 paper shows they consistently, unduly favor masculine-inflected translations, or gendered forms, over neutral ones.

arxiv.org/pdf/2410.10995

🚨 Our #ACL2025 paper shows they consistently, unduly favor masculine-inflected translations, or gendered forms, over neutral ones.

arxiv.org/pdf/2410.10995

Manos Zaranis, @tozefarinhas.bsky.social

and other sardines!!🚀

Meet MF²: Movie Facts & Fibs: a new benchmark for long-movie understanding

This benchmark focuses on narrative understanding (key events, emotional arcs, causal chains) in long movies.

Paper: arxiv.org/abs/2506.06275

Manos Zaranis, @tozefarinhas.bsky.social

and other sardines!!🚀

Meet MF²: Movie Facts & Fibs: a new benchmark for long-movie understanding

This benchmark focuses on narrative understanding (key events, emotional arcs, causal chains) in long movies.

Paper: arxiv.org/abs/2506.06275

LxMLS is a great opportunity to learn from top speakers and to interact with other students. You can apply for a scholarship.

Apply here:

lxmls.it.pt/2025/

LxMLS is a great opportunity to learn from top speakers and to interact with other students. You can apply for a scholarship.

Apply here:

lxmls.it.pt/2025/

TL;DR: many modern VLMs are unsafe across various types of queries and languages.

arxiv.org/abs/2501.10057

huggingface.co/datasets/fel...

MSTS is exciting because it tests for safety risks *created by multimodality*. Each prompt consists of a text + image that *only in combination* reveal their full unsafe meaning.

🧵

TL;DR: many modern VLMs are unsafe across various types of queries and languages.

arxiv.org/abs/2501.10057

huggingface.co/datasets/fel...

∞-Video: A Training-Free Approach to Long Video Understanding via Continuous-Time Memory Consolidation

Paper: arxiv.org/abs/2501.19098

∞-Video: A Training-Free Approach to Long Video Understanding via Continuous-Time Memory Consolidation

Paper: arxiv.org/abs/2501.19098