Christina Sartzetaki

@sargechris.bsky.social

75 followers

100 following

13 posts

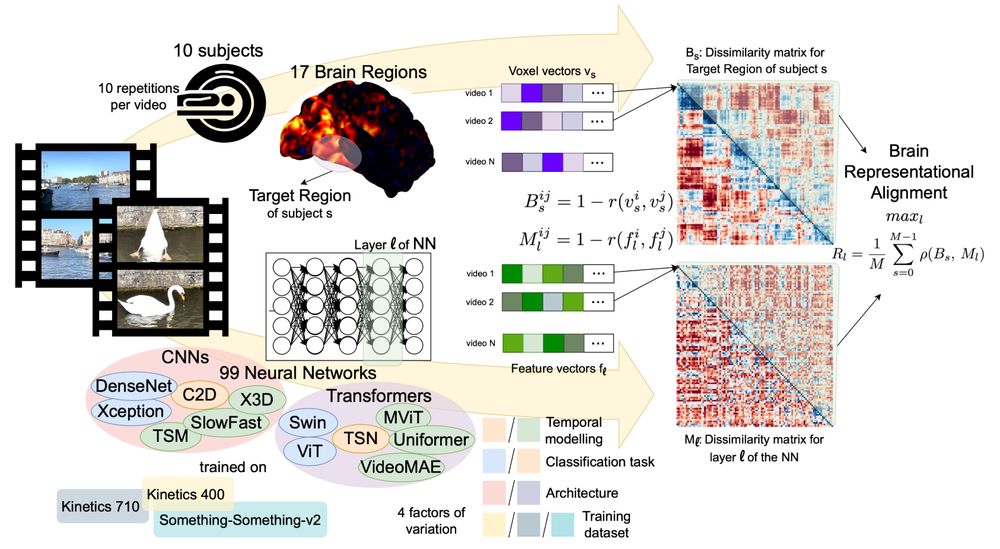

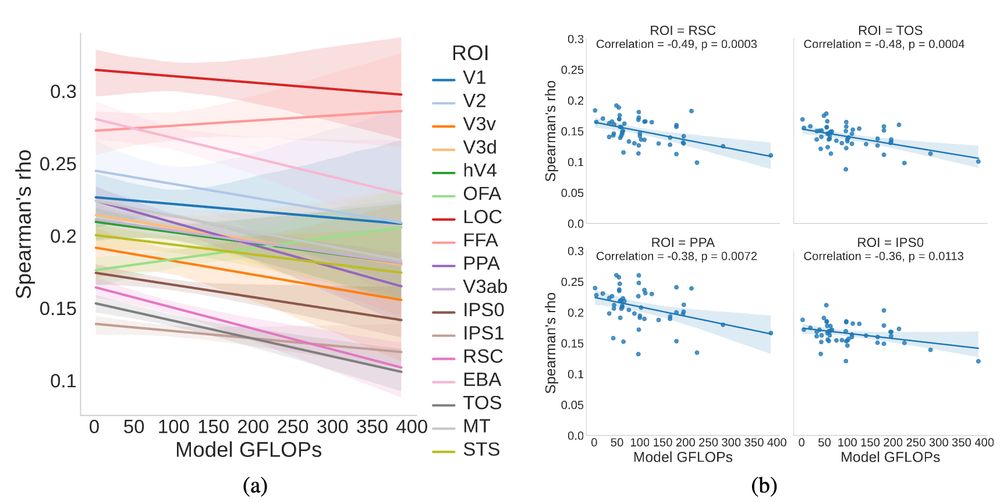

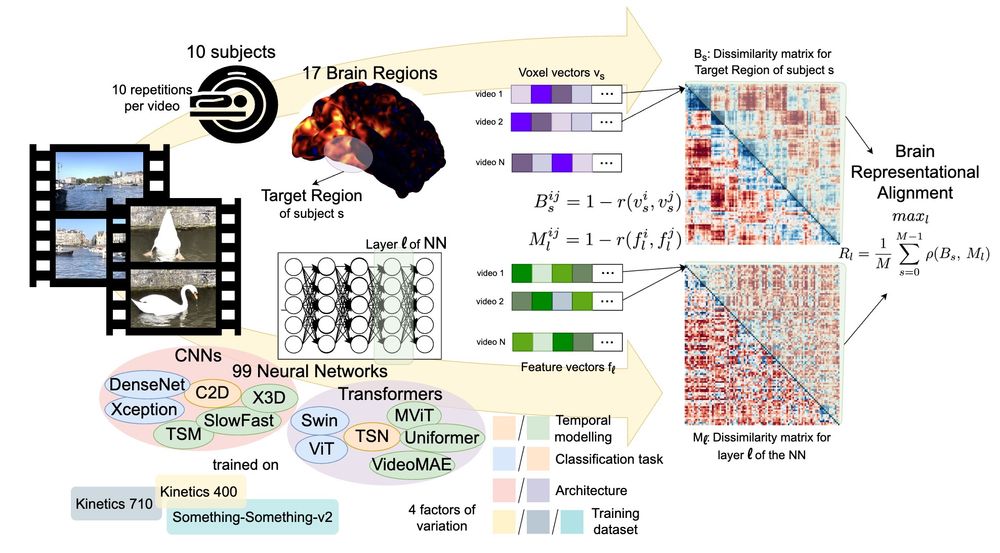

PhD candidate @ UvA 🇳🇱, ELLIS 🇪🇺 | {video, neuro, cognitive}-AI

Neural networks 🤖 and brains 🧠 watching videos

🔗 https://sites.google.com/view/csartzetaki/

Posts

Media

Videos

Starter Packs

Pinned

Reposted by Christina Sartzetaki

Reposted by Christina Sartzetaki

Cees Snoek

@cgmsnoek.bsky.social

· Feb 3

Reposted by Christina Sartzetaki

Reposted by Christina Sartzetaki