Sarthak Chandra

@sarthakc.bsky.social

120 followers

49 following

25 posts

Interested in neuroscience, development and dynamical systems | Faculty member @ ICTS | Previously: @MIT, @UMD, @IITK

Posts

Media

Videos

Starter Packs

Pinned

Reposted by Sarthak Chandra

Blake Richards

@tyrellturing.bsky.social

· Mar 20

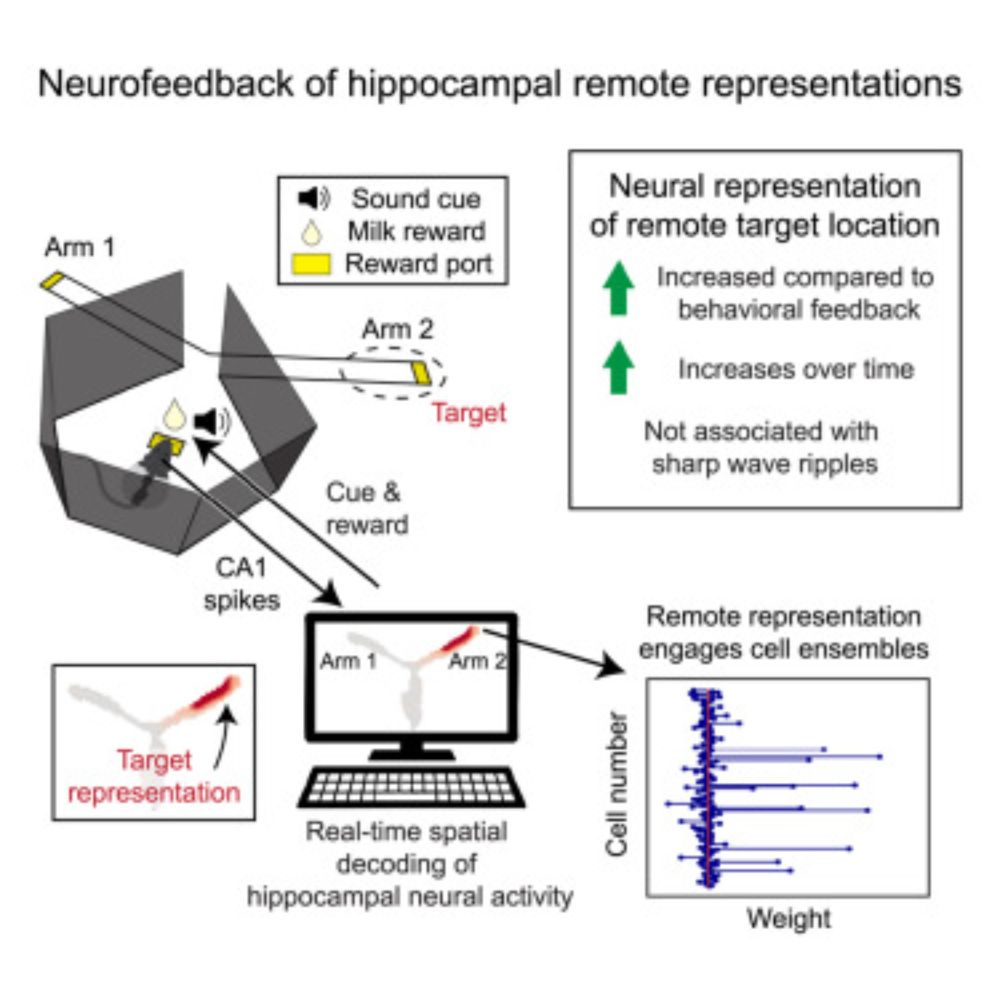

Closed-loop modulation of remote hippocampal representations with neurofeedback

Animal models of memory retrieval trigger retrieval with cues and measure retrieval

using behavior. Coulter et al. developed a neurofeedback paradigm that rewards hippocampal

activity patterns associa...

www.cell.com

Sarthak Chandra

@sarthakc.bsky.social

· Feb 19

Sarthak Chandra

@sarthakc.bsky.social

· Feb 19

Sarthak Chandra

@sarthakc.bsky.social

· Feb 19

Sarthak Chandra

@sarthakc.bsky.social

· Feb 19

Sarthak Chandra

@sarthakc.bsky.social

· Feb 19

Sarthak Chandra

@sarthakc.bsky.social

· Feb 19

Sarthak Chandra

@sarthakc.bsky.social

· Feb 19

Sarthak Chandra

@sarthakc.bsky.social

· Feb 19

Sarthak Chandra

@sarthakc.bsky.social

· Jan 18

Sarthak Chandra

@sarthakc.bsky.social

· Jan 18

Sarthak Chandra

@sarthakc.bsky.social

· Jan 17

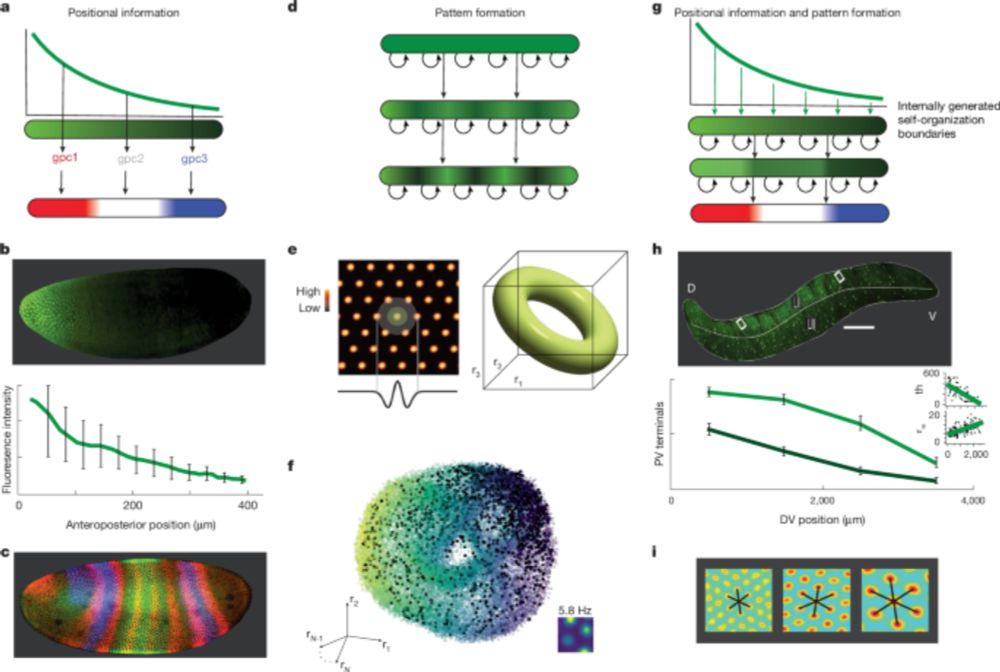

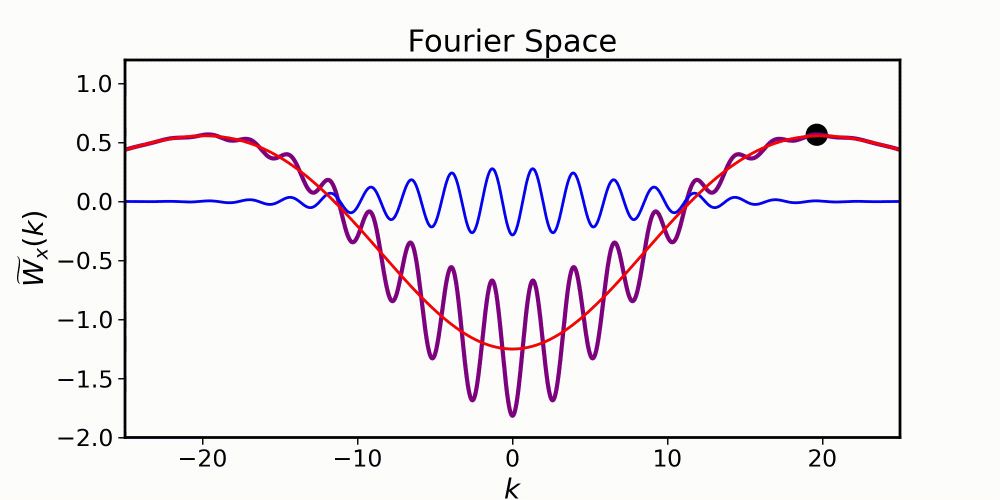

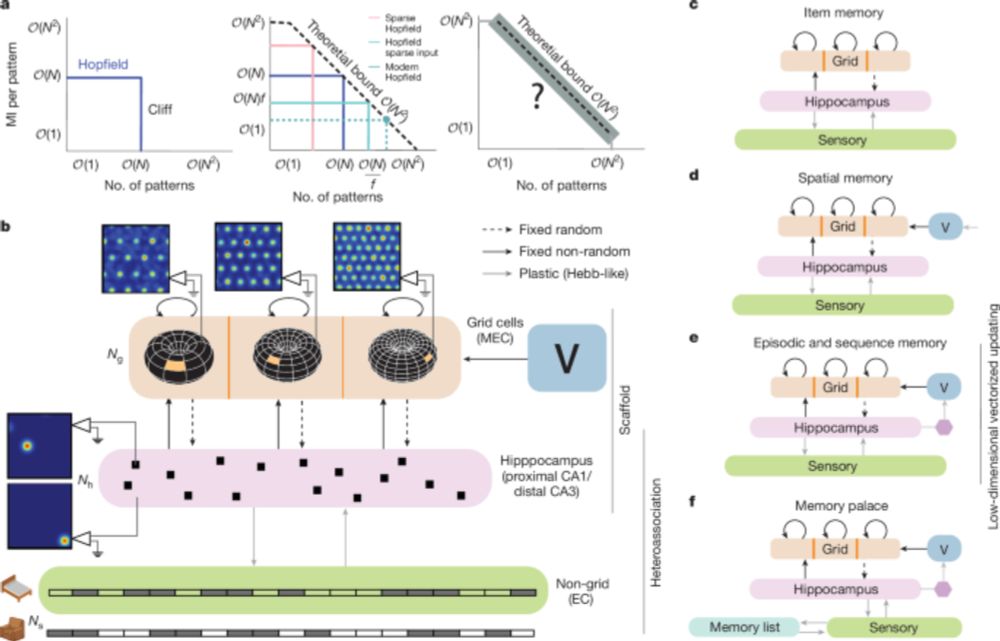

Episodic and associative memory from spatial scaffolds in the hippocampus - Nature

A neocortical–entorhinal–hippocampal network model based on grid cell states recapitulates experimental results and reconciles the spatial, associative and episodic memory roles of the hippocampus.

www.nature.com

Sarthak Chandra

@sarthakc.bsky.social

· Jan 17

Sarthak Chandra

@sarthakc.bsky.social

· Jan 17

Sarthak Chandra

@sarthakc.bsky.social

· Jan 17

Sarthak Chandra

@sarthakc.bsky.social

· Jan 17

Sarthak Chandra

@sarthakc.bsky.social

· Jan 17

Sarthak Chandra

@sarthakc.bsky.social

· Jan 17

Sarthak Chandra

@sarthakc.bsky.social

· Jan 17

Sarthak Chandra

@sarthakc.bsky.social

· Jan 17

Sarthak Chandra

@sarthakc.bsky.social

· Jan 17

Sarthak Chandra

@sarthakc.bsky.social

· Jan 17

Sarthak Chandra

@sarthakc.bsky.social

· Jan 17