Sam Jaques

@sejaques.bsky.social

190 followers

110 following

74 posts

Assistant prof at U Waterloo. Aspiring full-stack cryptographer. Loves math, plants, flashcards. Opinions reflect those of all past, present, and future employers.

Posts

Media

Videos

Starter Packs

Sam Jaques

@sejaques.bsky.social

· Sep 3

Sam Jaques

@sejaques.bsky.social

· Sep 3

Sam Jaques

@sejaques.bsky.social

· Sep 3

Reposted by Sam Jaques

Reposted by Sam Jaques

Sam Jaques

@sejaques.bsky.social

· Jul 31

Sam Jaques

@sejaques.bsky.social

· Jul 22

Sam Jaques

@sejaques.bsky.social

· Jul 8

Sam Jaques

@sejaques.bsky.social

· Jul 3

Sam Jaques

@sejaques.bsky.social

· Jul 3

Sam Jaques

@sejaques.bsky.social

· Jul 3

Sam Jaques

@sejaques.bsky.social

· Jun 21

Sam Jaques

@sejaques.bsky.social

· Jun 20

Sam Jaques

@sejaques.bsky.social

· Jun 20

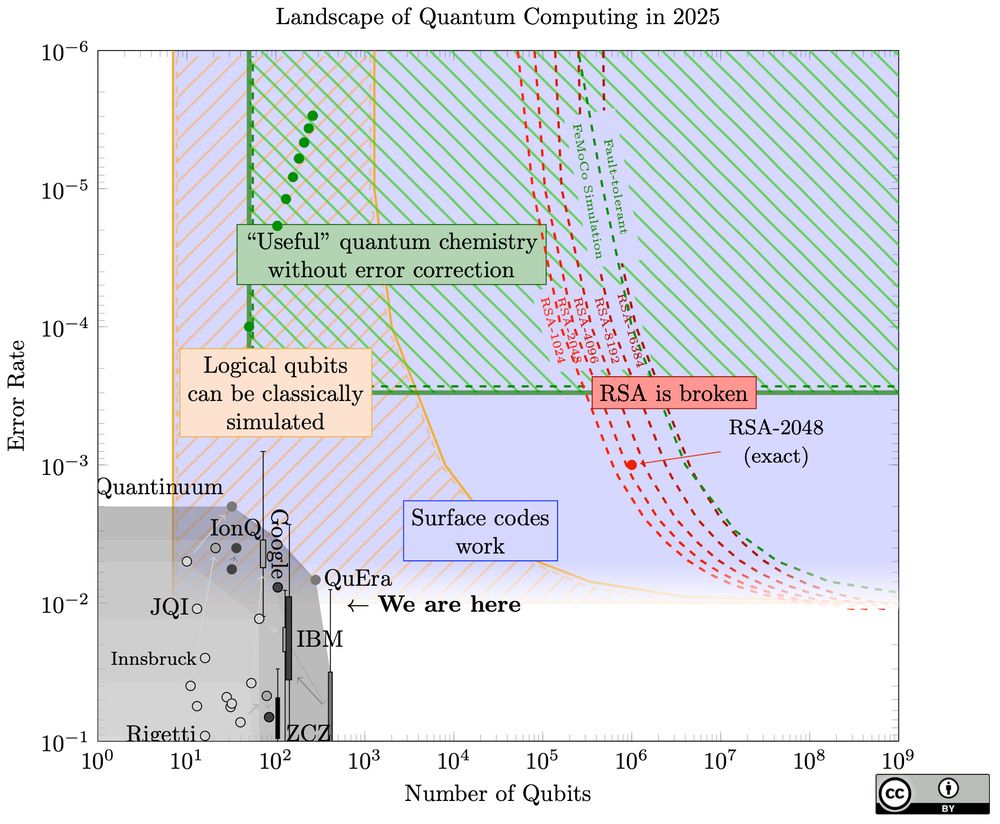

Reducing the Number of Qubits in Quantum Factoring

This paper focuses on the optimization of the number of logical qubits in quantum algorithms for factoring and computing discrete logarithms in $\mathbb{Z}_N^*$. These algorithms contain an exponentia...

eprint.iacr.org

Sam Jaques

@sejaques.bsky.social

· Jun 20

Sam Jaques

@sejaques.bsky.social

· Jun 19

Sam Jaques

@sejaques.bsky.social

· Jun 19

Sam Jaques

@sejaques.bsky.social

· Jun 19