Seth Karten

@sethkarten.ai

160 followers

270 following

140 posts

Autonomous Agents | PhD @ Princeton | World Gen @ Waymo | Prev: CMU, Amazon | NSF GRFP Fellow

Posts

Media

Videos

Starter Packs

Pinned

Seth Karten

@sethkarten.ai

· 11d

Seth Karten

@sethkarten.ai

· 13d

Seth Karten

@sethkarten.ai

· 14d

Reposted by Seth Karten

Seth Karten

@sethkarten.ai

· Sep 1

Seth Karten

@sethkarten.ai

· Aug 28

Seth Karten

@sethkarten.ai

· Aug 20

Seth Karten

@sethkarten.ai

· Aug 20

Seth Karten

@sethkarten.ai

· Aug 20

Seth Karten

@sethkarten.ai

· Aug 20

Seth Karten

@sethkarten.ai

· Aug 20

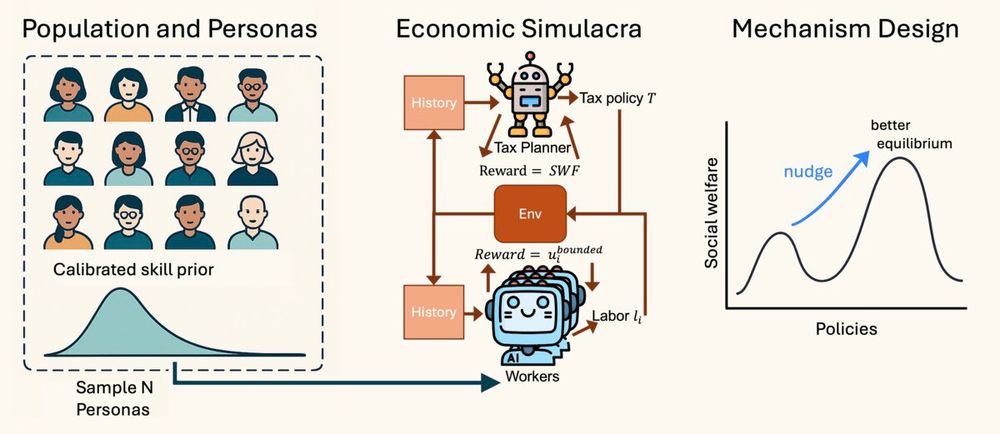

LLM Economist: Large Population Models and Mechanism Design in Multi-Agent Generative Simulacra

We present the LLM Economist, a novel framework that uses agent-based modeling to design and assess economic policies in strategic environments with hierarchical decision-making. At the lower level, b...

arxiv.org

Seth Karten

@sethkarten.ai

· Aug 18

Seth Karten

@sethkarten.ai

· Aug 15

Seth Karten

@sethkarten.ai

· Aug 13

Seth Karten

@sethkarten.ai

· Aug 13

Seth Karten

@sethkarten.ai

· Aug 12

Seth Karten

@sethkarten.ai

· Aug 12