Multimodality and multilinguality

prev. predoc Google Deepmind

Trained on large-scale animal vocalization, human speech & music datasets, the model enables zero-shot classification, detection & querying across diverse species & environments 👇🏽

Trained on large-scale animal vocalization, human speech & music datasets, the model enables zero-shot classification, detection & querying across diverse species & environments 👇🏽

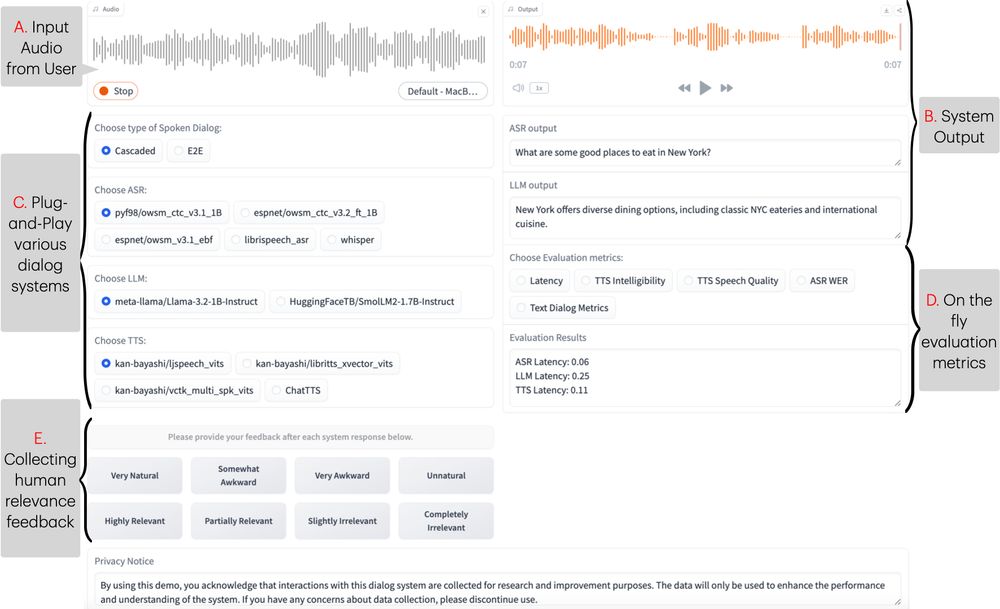

📂 Codebase (part of ESPnet): github.com/espnet/espnet

📖 README & User Guide: github.com/espnet/espne...

🎥 Demo Video: www.youtube.com/watch?v=kI_D...

📂 Codebase (part of ESPnet): github.com/espnet/espnet

📖 README & User Guide: github.com/espnet/espne...

🎥 Demo Video: www.youtube.com/watch?v=kI_D...

📜: arxiv.org/abs/2503.08533

Live Demo: huggingface.co/spaces/Siddh...

📜: arxiv.org/abs/2503.08533

Live Demo: huggingface.co/spaces/Siddh...

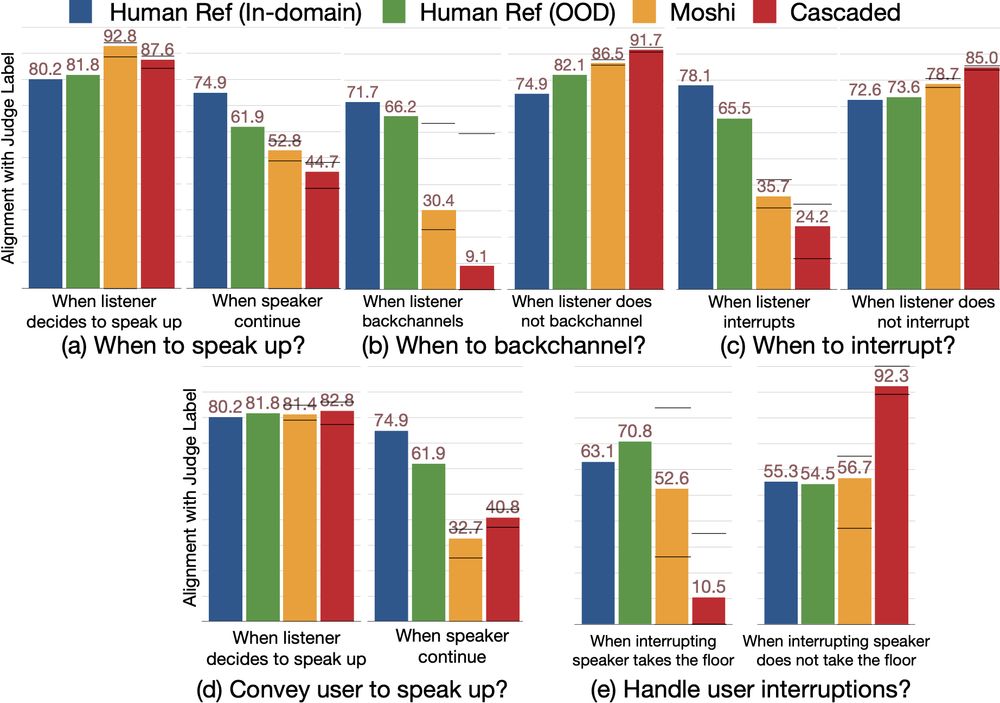

Can Audio Foundation Models like Moshi and GPT-4o truly engage in natural conversations? 🗣️🔊

We benchmark their turn-taking abilities and uncover major gaps in conversational AI. 🧵👇

📜: arxiv.org/abs/2503.01174

Can Audio Foundation Models like Moshi and GPT-4o truly engage in natural conversations? 🗣️🔊

We benchmark their turn-taking abilities and uncover major gaps in conversational AI. 🧵👇

📜: arxiv.org/abs/2503.01174

Tokenisation is NP-Complete

https://arxiv.org/abs/2412.15210

Tokenisation is NP-Complete

https://arxiv.org/abs/2412.15210

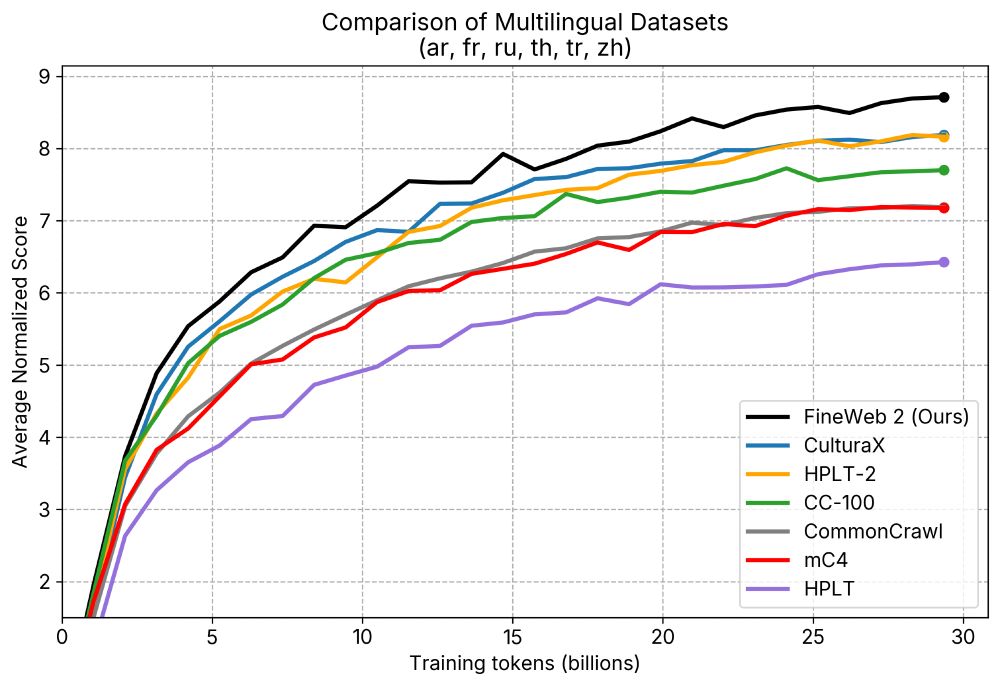

We applied the same data-driven approach that led to SOTA English performance in🍷 FineWeb to thousands of languages.

🥂 FineWeb2 has 8TB of compressed text data and outperforms other datasets.

We applied the same data-driven approach that led to SOTA English performance in🍷 FineWeb to thousands of languages.

🥂 FineWeb2 has 8TB of compressed text data and outperforms other datasets.

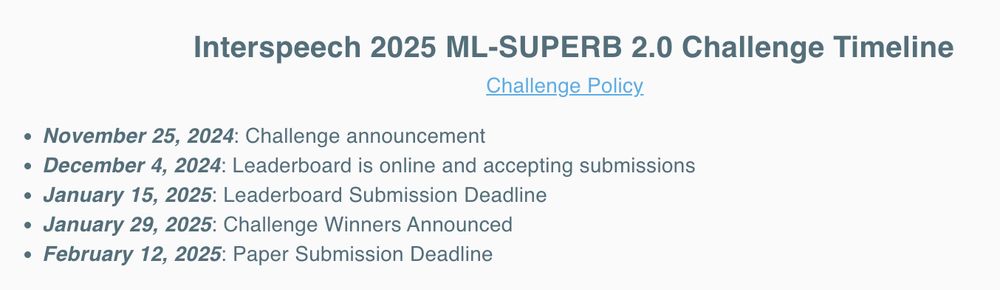

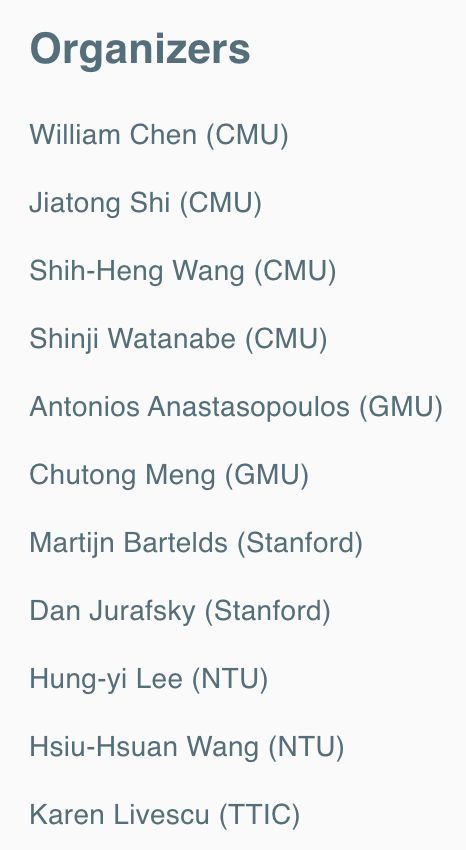

#Interspeech2025

#Interspeech2025

(Self-)nominations welcome!

(Self-)nominations welcome!

openreview.net/forum?id=QCY...

github.com/visipedia/in...

#prattle 💬

#bioacoustics

openreview.net/forum?id=QCY...

github.com/visipedia/in...

#prattle 💬

#bioacoustics