Sigal Samuel

@sigalsamuel.bsky.social

420 followers

130 following

60 posts

I write about the future of consciousness for Vox

Submit a question to my philosophical advice column! https://www.vox.com/your-mileage-may-vary-advice-column

Author of the children's book OSNAT AND HER DOVE and the novel THE MYSTICS OF MILE END

Posts

Media

Videos

Starter Packs

Sigal Samuel

@sigalsamuel.bsky.social

· Jul 22

Sigal Samuel

@sigalsamuel.bsky.social

· Jul 14

Sigal Samuel

@sigalsamuel.bsky.social

· Jul 8

Let us know what moral dilemmas you’re grappling with. You could be featured in the next Your Mileage May Vary column!

I'm Sigal Samuel, a senior reporter for Future Perfect, Vox’s section about how to do good in today’s confusing world.

My advice column — Your Mileage May Vary — offers people a unique framework for t...

docs.google.com

Sigal Samuel

@sigalsamuel.bsky.social

· Jul 8

Sigal Samuel

@sigalsamuel.bsky.social

· Jun 25

Anna Ciaunica

@annaciaunica.bsky.social

· Jun 25

The No Body Problem: Intelligence and Selfhood in Biological and Artificial Systems

Do you need a body to be an intelligent system? Clearly not. Artificial systems such as Large Language Models do not have a body and yet they are promptly qualified as intelligent and even conscious. ...

sciety-labs.elifesciences.org

Sigal Samuel

@sigalsamuel.bsky.social

· Jun 25

LSE Philosophy

@lsephilosophy.bsky.social

· Jun 25

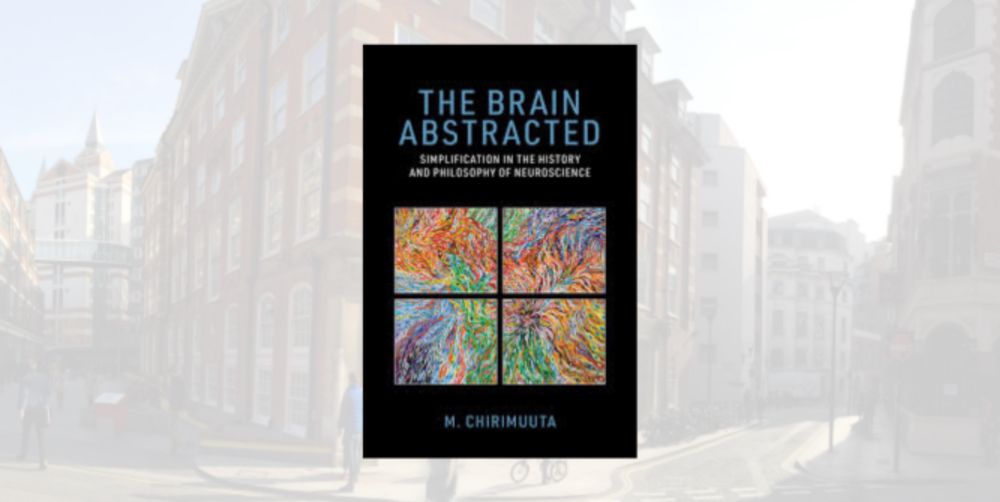

Mazviita Chirimuuta wins the 2025 Lakatos Award!

The London School of Economics and Political Science (LSE) is pleased to announce the 2025 Lakatos Award winner Mazviita Chirimuuta, who receives the award for her book “The Brain Abstracted:…

www.lse.ac.uk