Prev. Research Intern Huawei

stefanfs.me

Solving the Contrastive Eigenproblem gives eigenvalues that show how many contrastive directions are captured by your activations.

Below, only 'amazon' isolates a single direction.

Solving the Contrastive Eigenproblem gives eigenvalues that show how many contrastive directions are captured by your activations.

Below, only 'amazon' isolates a single direction.

How can we identify directions that model combinations of features?

We propose Contrastive Eigenproblems to tackle both of these issues.

Come see the poster at the MechInterp Workshop @ NeurIPS this sunday!

How can we identify directions that model combinations of features?

We propose Contrastive Eigenproblems to tackle both of these issues.

Come see the poster at the MechInterp Workshop @ NeurIPS this sunday!

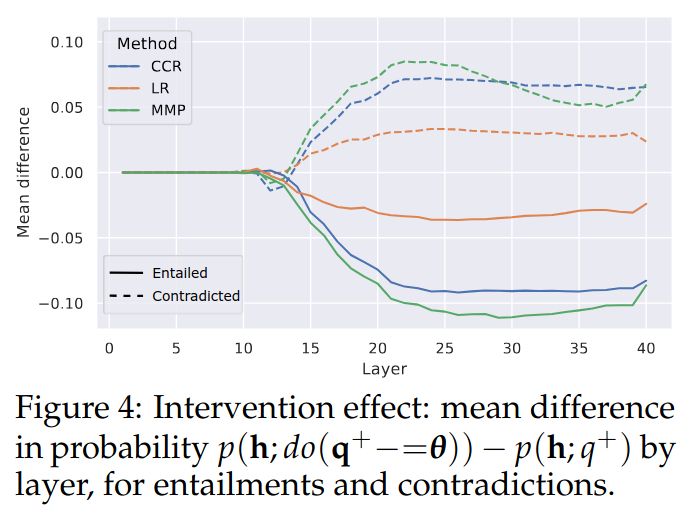

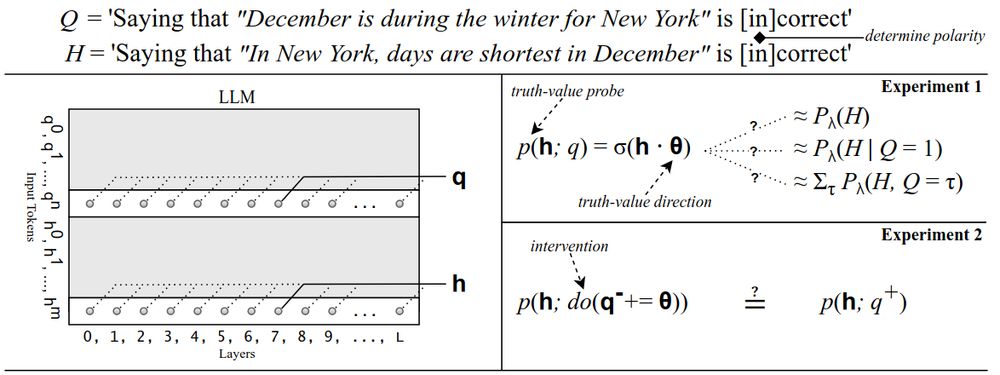

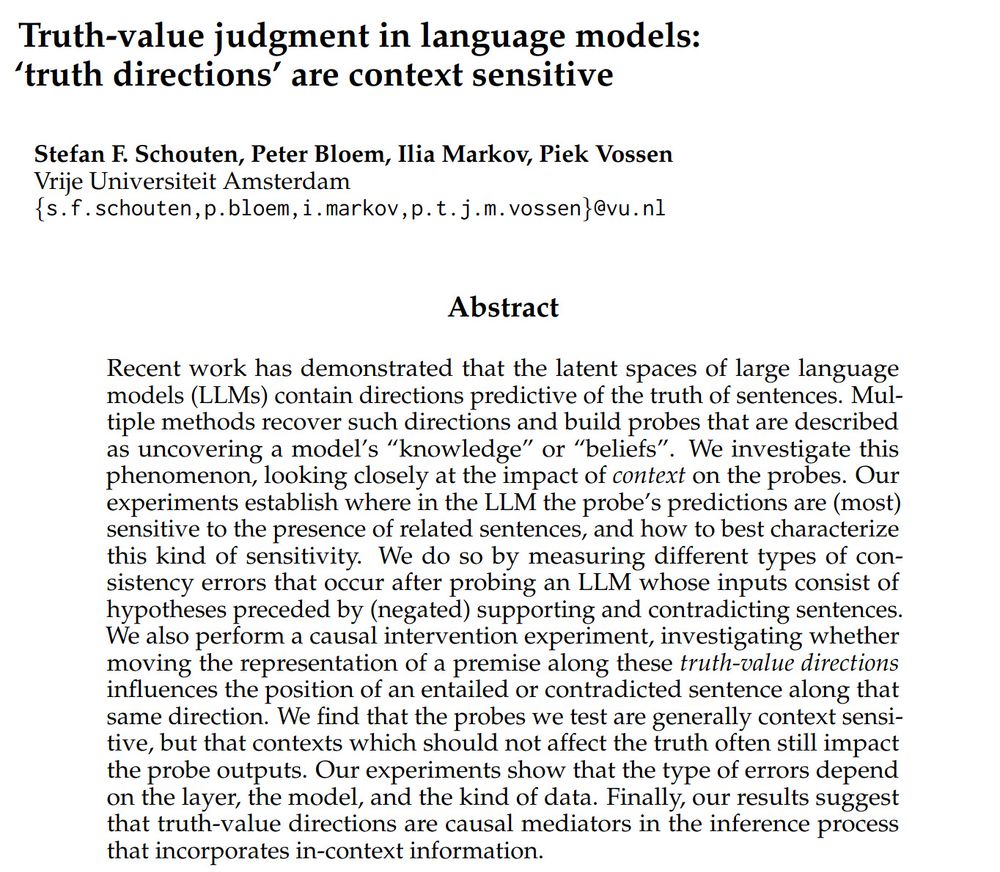

In this paper, we investigate how LLMs keep track of the truth of sentences when reasoning.

In this paper, we investigate how LLMs keep track of the truth of sentences when reasoning.