https://www.thomasrdavidson.com/

Thanks @gligoric.bsky.social for providing an expert opinion!

Thanks @gligoric.bsky.social for providing an expert opinion!

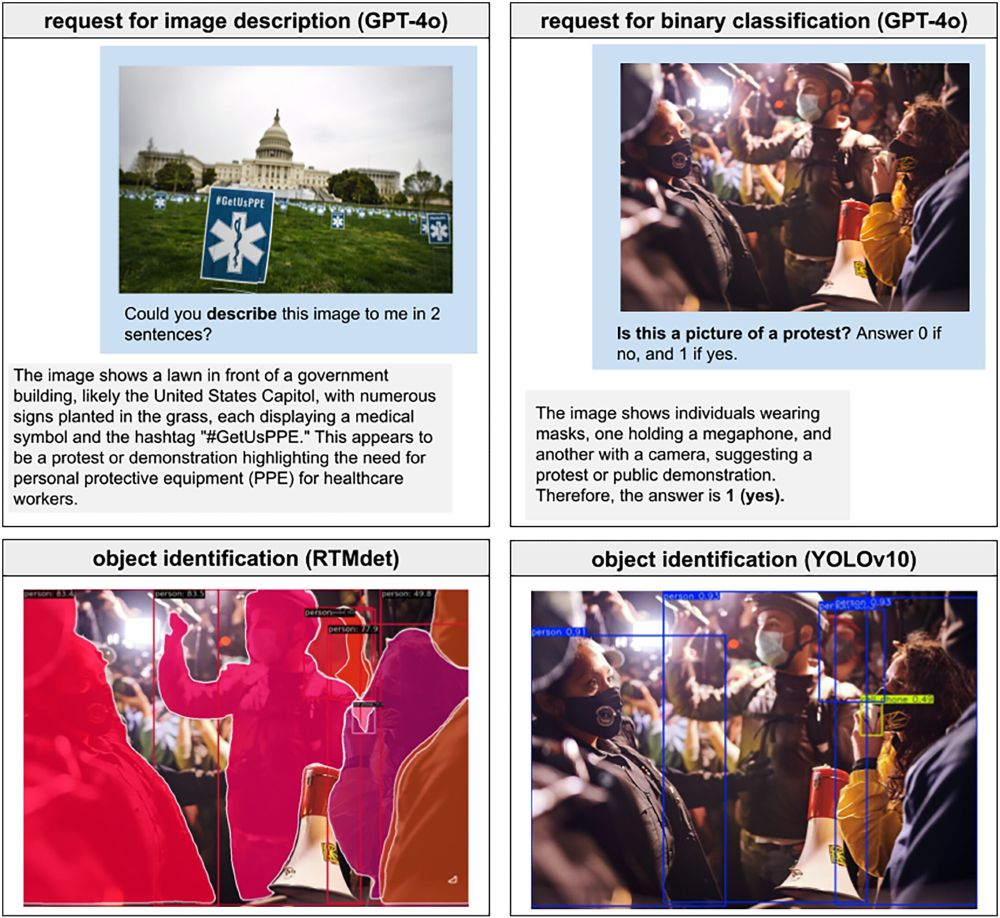

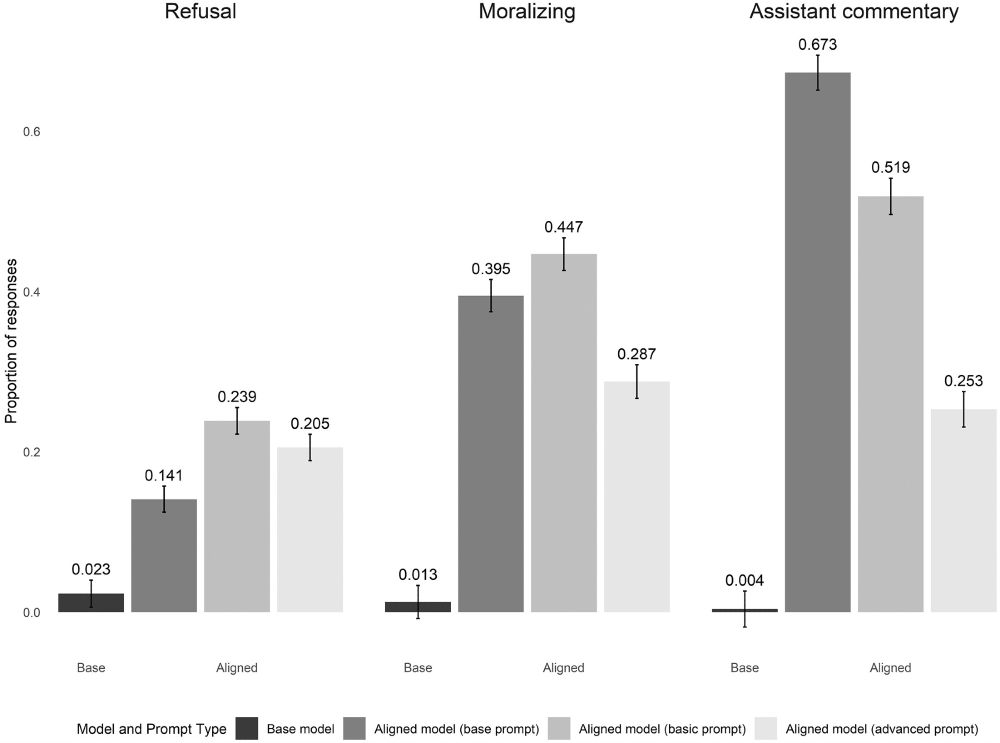

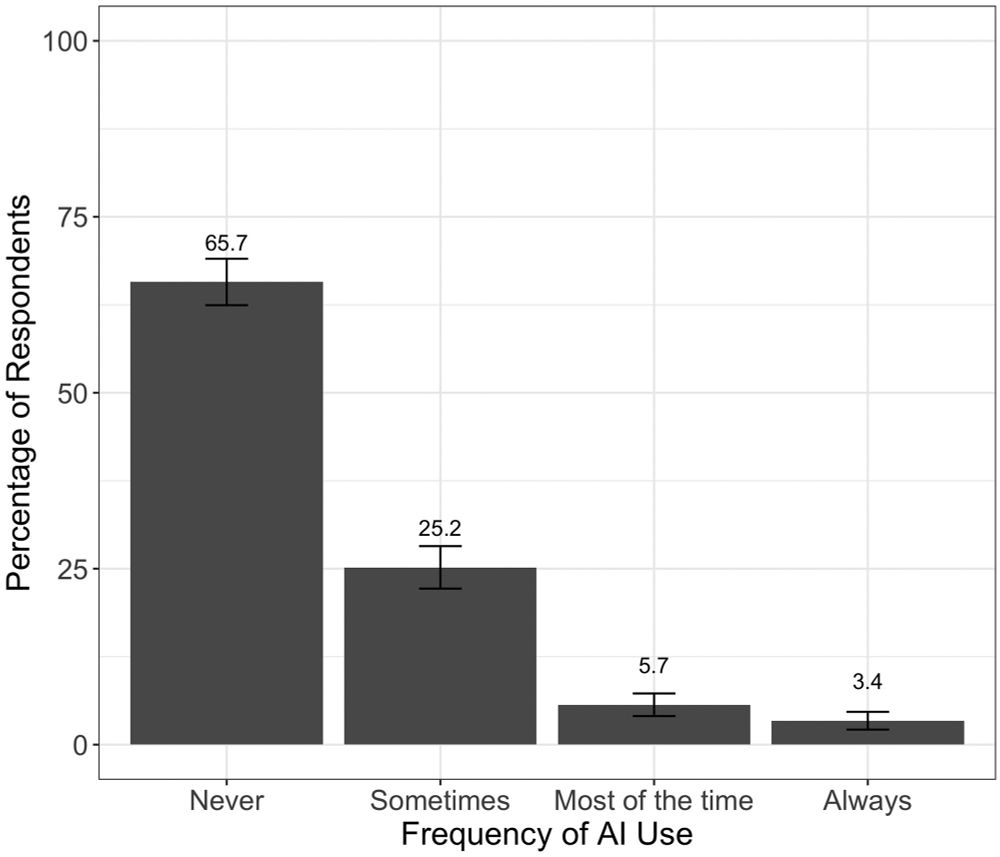

I use a conjoint experiment to test multimodal large language models (MLLMs) for context-sensitive content moderation and compare with human subjects. Methodologically, this demonstrates how social science techniques can enhance AI auditing. 💻🤖💬

I use a conjoint experiment to test multimodal large language models (MLLMs) for context-sensitive content moderation and compare with human subjects. Methodologically, this demonstrates how social science techniques can enhance AI auditing. 💻🤖💬

This suggests that LRM behavior is consistent with dual process theories of cognition, as the models expend more reasoning effort when simple heuristics are insufficient

This suggests that LRM behavior is consistent with dual process theories of cognition, as the models expend more reasoning effort when simple heuristics are insufficient

To what extent does LRM behavior resemble human reasoning processes?

I find that LRM reasoning effort predicts human decision time on a pairwise comparison task, and both humans and LRMs require more time/effort on challenging tasks

To what extent does LRM behavior resemble human reasoning processes?

I find that LRM reasoning effort predicts human decision time on a pairwise comparison task, and both humans and LRMs require more time/effort on challenging tasks

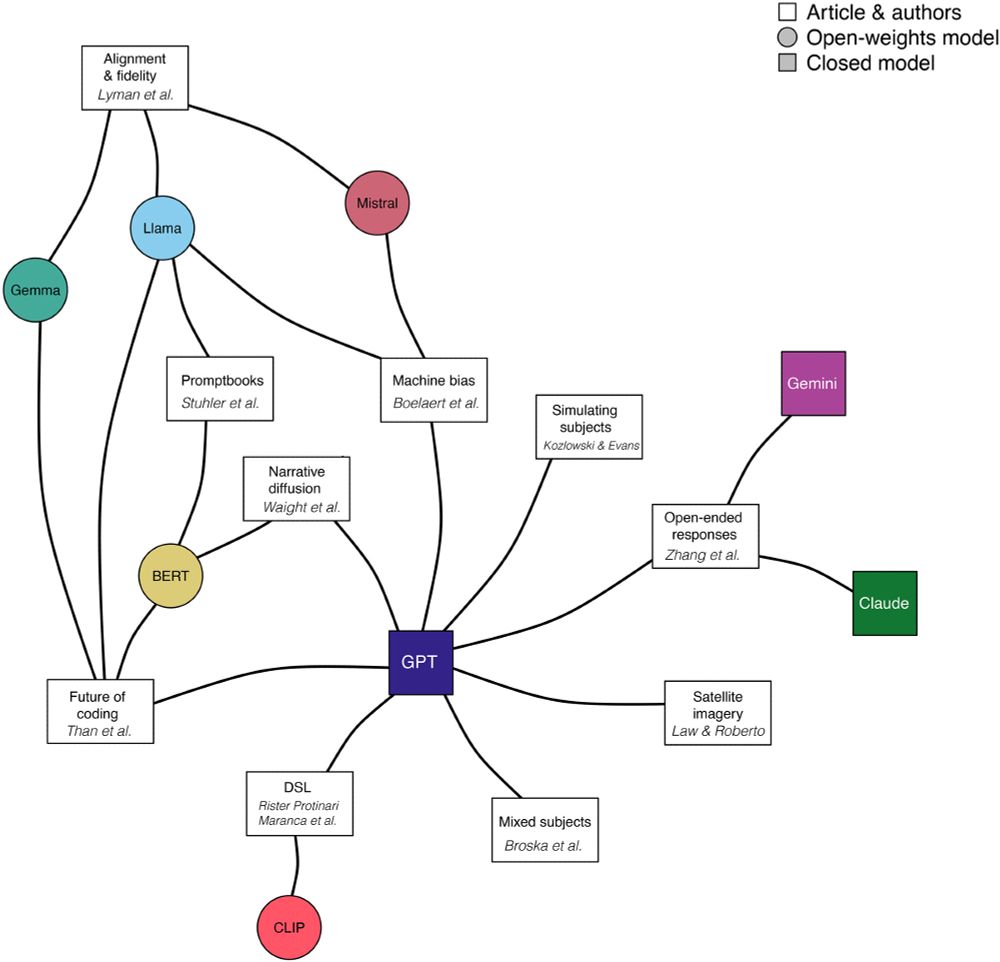

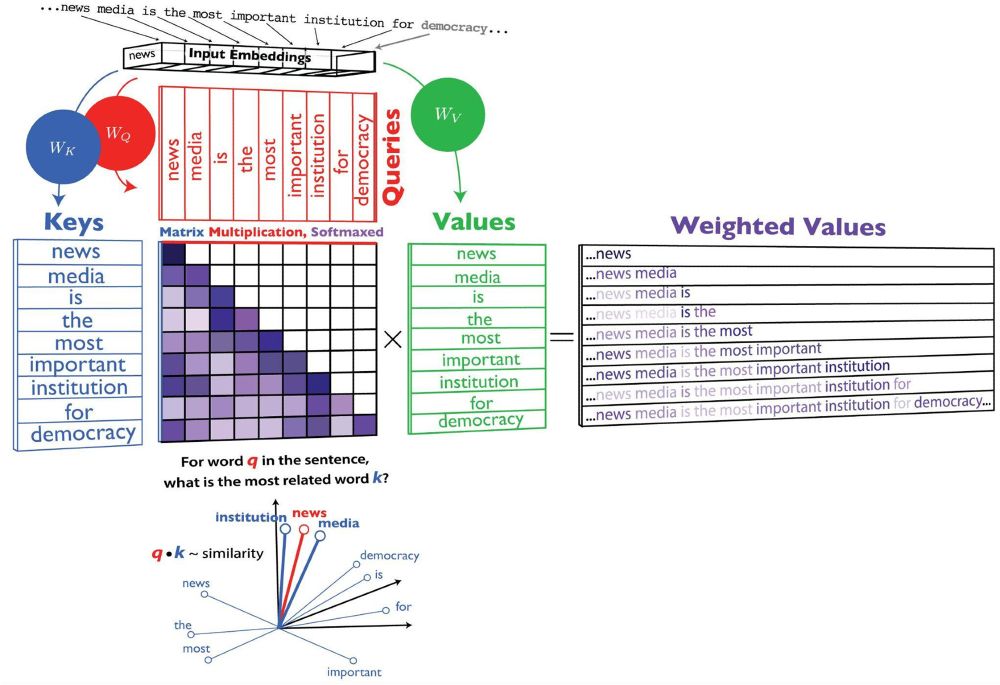

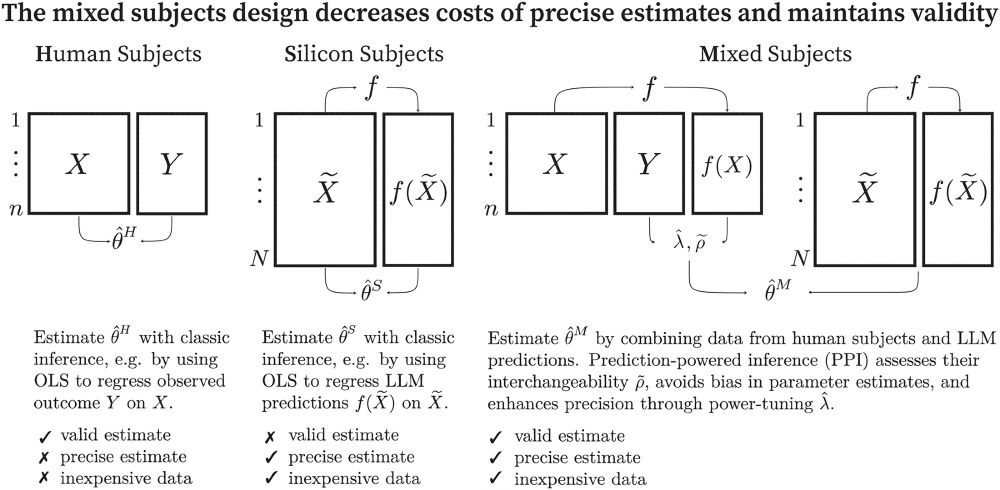

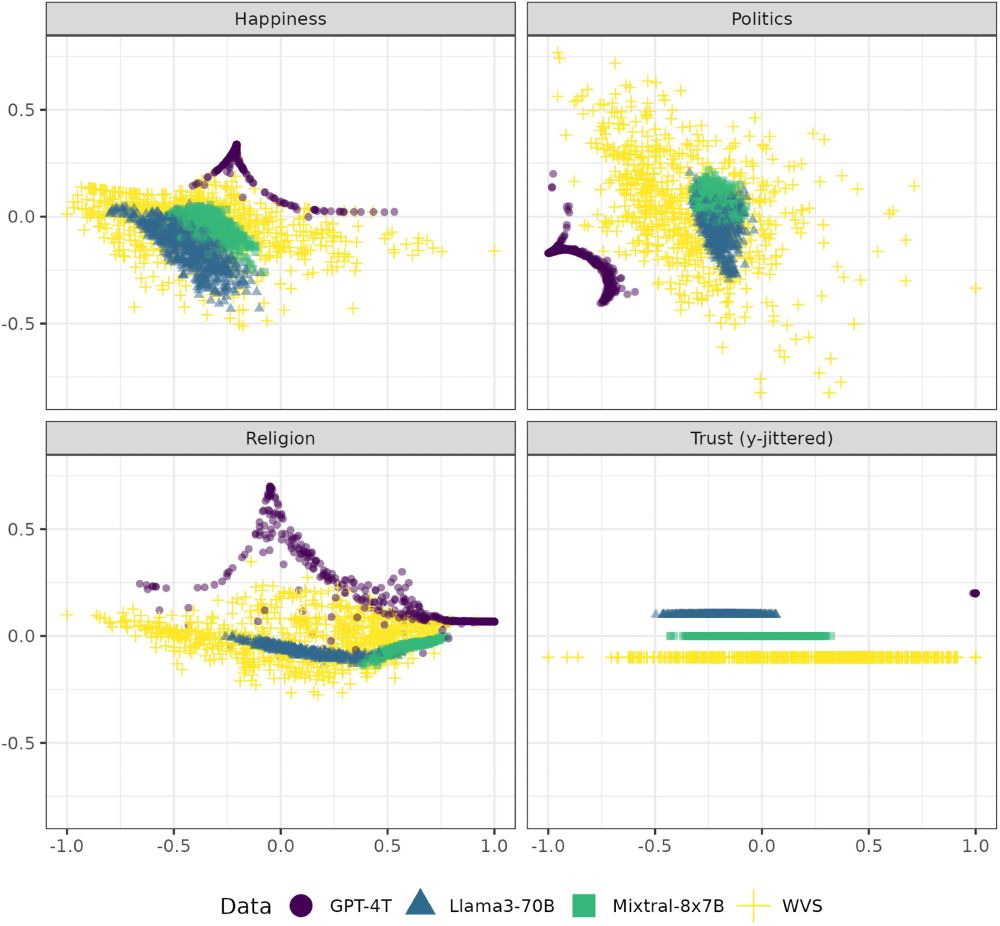

One point we emphasize is how the model ecosystem has matured & open-weight models are viable for many problems journals.sagepub.com/doi/10.1177/...

One point we emphasize is how the model ecosystem has matured & open-weight models are viable for many problems journals.sagepub.com/doi/10.1177/...

Here's a thread on each of the ten papers:

Here's a thread on each of the ten papers:

I had a great time learning about the department and the legacy of Frank Blackmar, who taught the first sociology class in the US, which has continued for 135 years www.asanet.org/frank-w-blac...

I had a great time learning about the department and the legacy of Frank Blackmar, who taught the first sociology class in the US, which has continued for 135 years www.asanet.org/frank-w-blac...

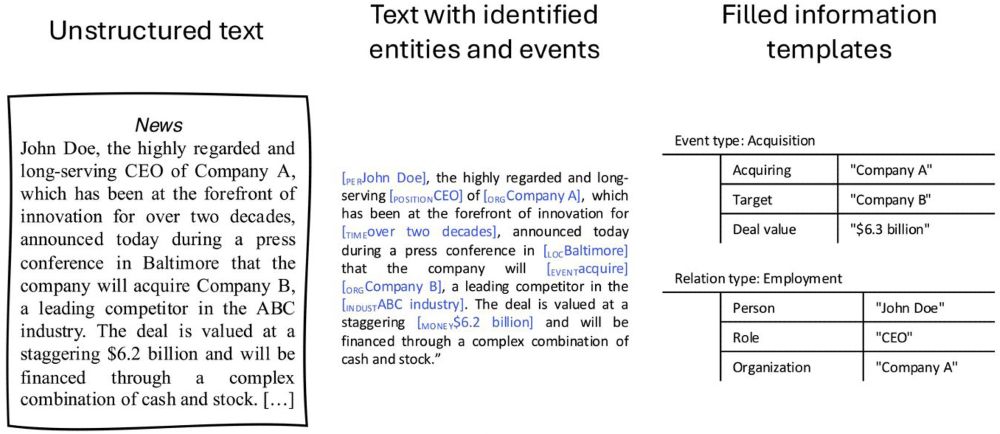

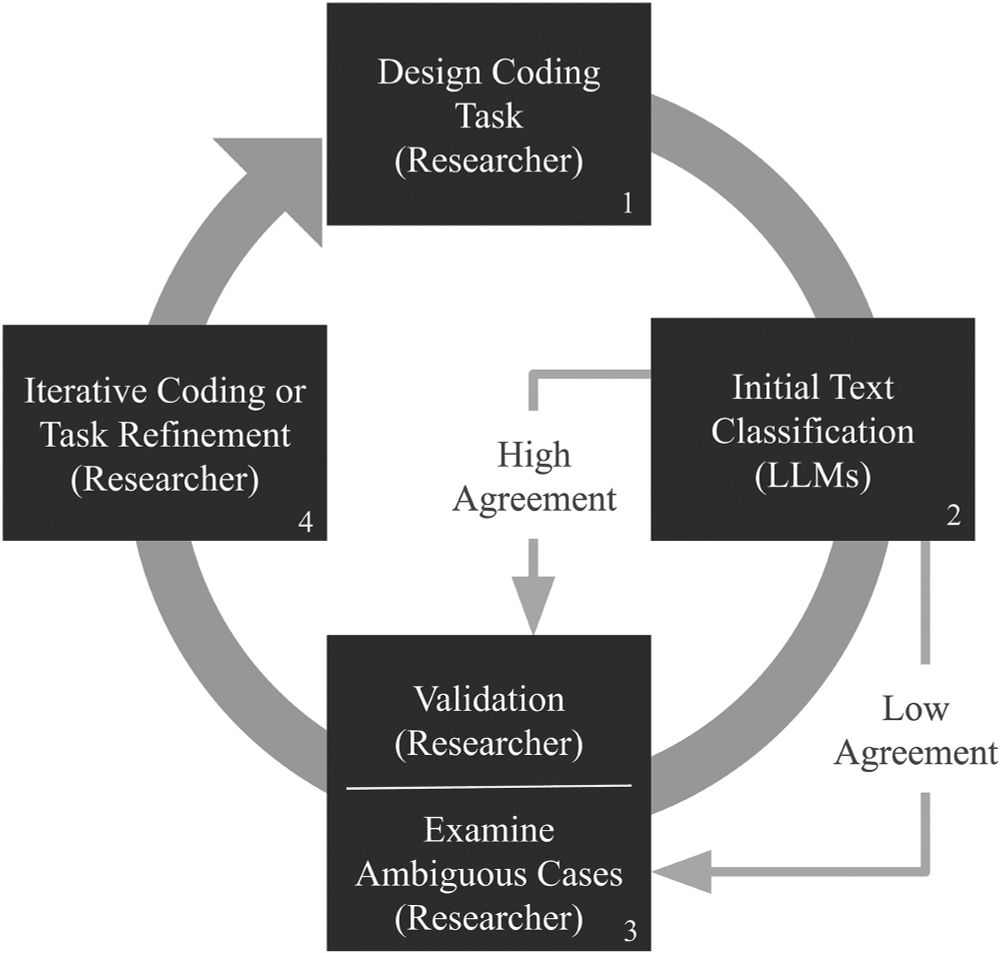

We compare different learning regimes, from zero-shot to instruction-tuning, and share recommendations for sociologists and other social scientists interested in using these models.

doi.org/10.1177/0049...

We compare different learning regimes, from zero-shot to instruction-tuning, and share recommendations for sociologists and other social scientists interested in using these models.

doi.org/10.1177/0049...