Tim Kietzmann

@timkietzmann.bsky.social

3.2K followers

320 following

91 posts

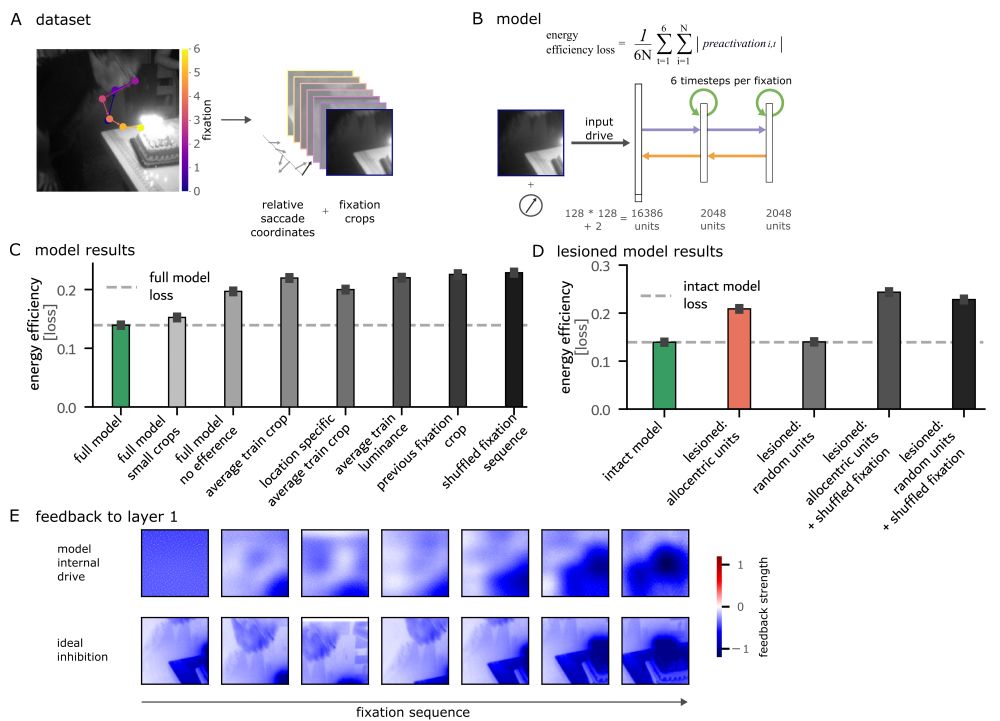

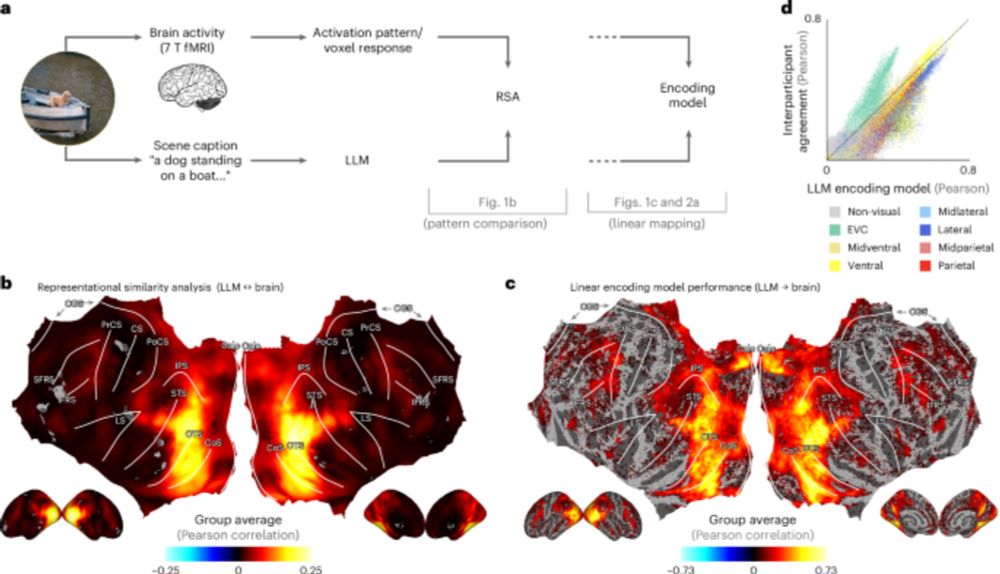

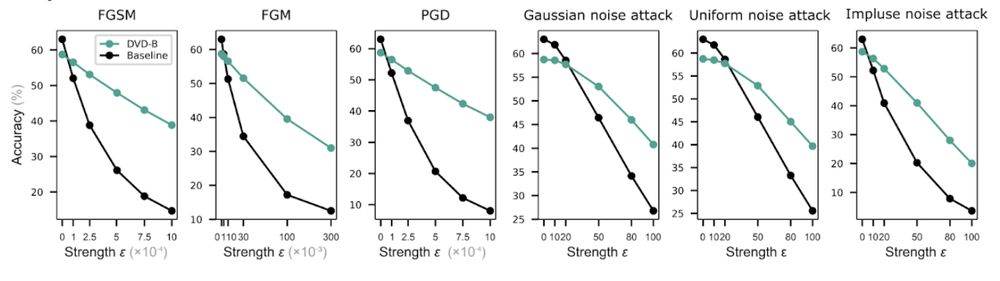

ML meets Neuroscience #NeuroAI, Full Professor at the Institute of Cognitive Science (Uni Osnabrück), prev. @ Donders Inst., Cambridge University

Posts

Media

Videos

Starter Packs

Pinned

Tim Kietzmann

@timkietzmann.bsky.social

· Jul 26

Tim Kietzmann

@timkietzmann.bsky.social

· Jul 26

Reposted by Tim Kietzmann