Mashbayar Tugsbayar

@tmshbr.bsky.social

530 followers

150 following

19 posts

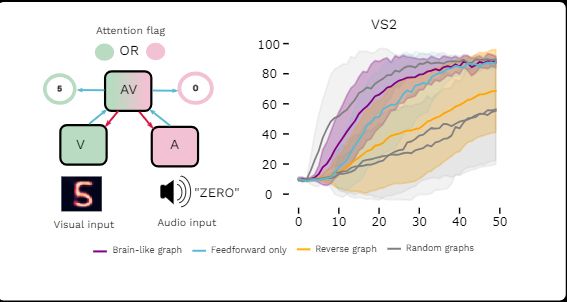

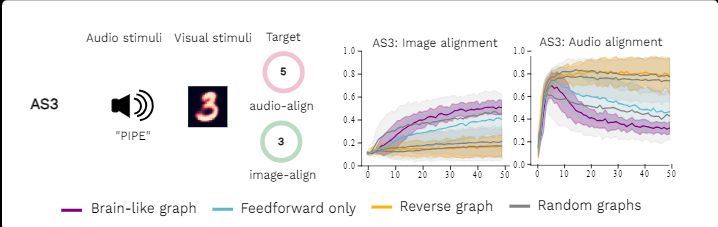

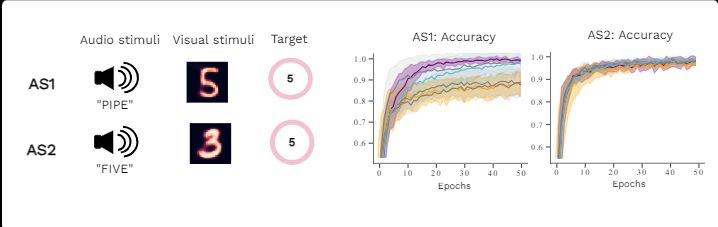

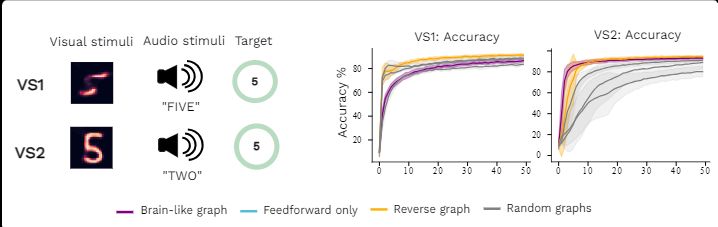

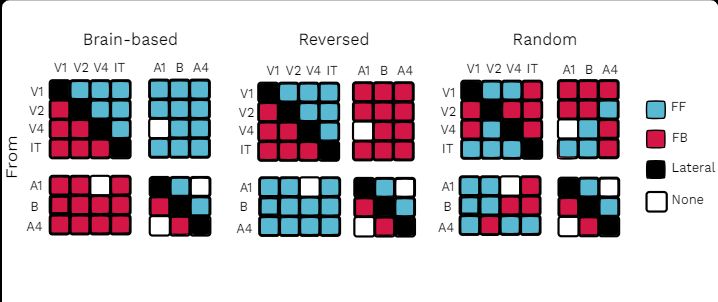

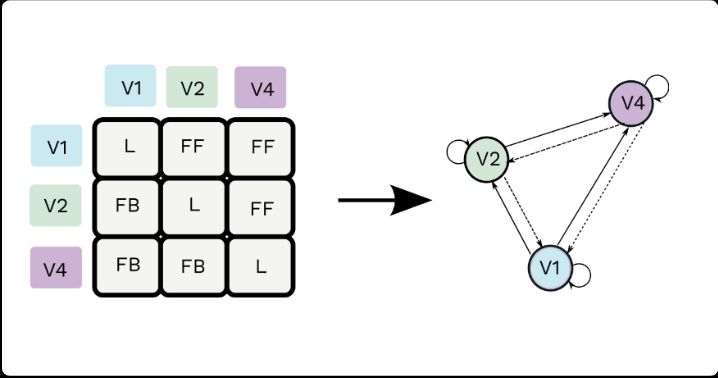

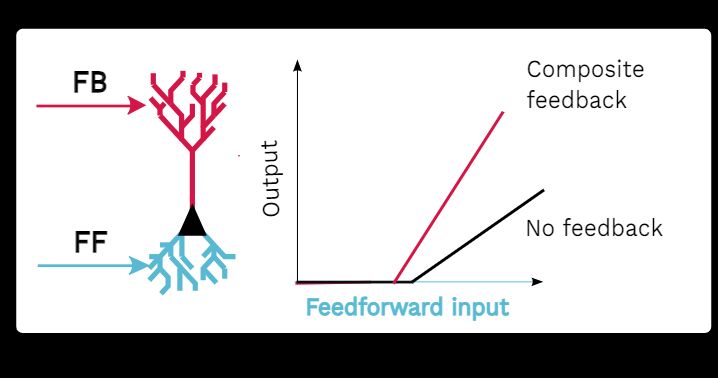

PhD student in NeuroAI @Mila & McGill w/ Blake Richards. Top-down feedback and brainlike connectivity in ANNs.

Posts

Media

Videos

Starter Packs

Reposted by Mashbayar Tugsbayar

Reposted by Mashbayar Tugsbayar

Mashbayar Tugsbayar

@tmshbr.bsky.social

· Apr 16

Mashbayar Tugsbayar

@tmshbr.bsky.social

· Apr 16

Mashbayar Tugsbayar

@tmshbr.bsky.social

· Apr 15

Mashbayar Tugsbayar

@tmshbr.bsky.social

· Apr 15

Mashbayar Tugsbayar

@tmshbr.bsky.social

· Apr 15

Mashbayar Tugsbayar

@tmshbr.bsky.social

· Apr 15

Mashbayar Tugsbayar

@tmshbr.bsky.social

· Apr 15

Mashbayar Tugsbayar

@tmshbr.bsky.social

· Apr 15

Mashbayar Tugsbayar

@tmshbr.bsky.social

· Apr 15

Reposted by Mashbayar Tugsbayar

Reposted by Mashbayar Tugsbayar

Reposted by Mashbayar Tugsbayar

Reposted by Mashbayar Tugsbayar

Olivier Codol

@oliviercodol.bsky.social

· Mar 26

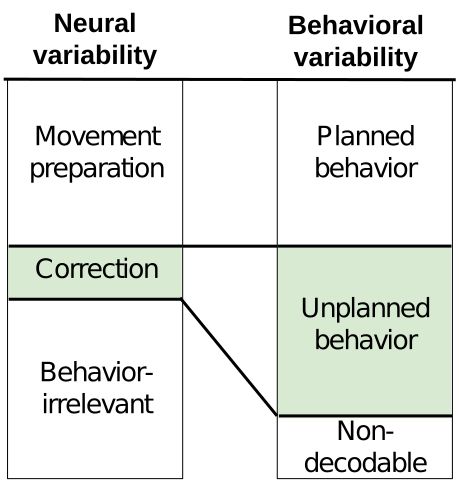

Brain-like neural dynamics for behavioral control develop through reinforcement learning

During development, neural circuits are shaped continuously as we learn to control our bodies. The ultimate goal of this process is to produce neural dynamics that enable the rich repertoire of behavi...

www.biorxiv.org

Reposted by Mashbayar Tugsbayar

Shahab Bakhtiari

@shahabbakht.bsky.social

· Mar 14