Tom Everitt

@tom4everitt.bsky.social

1.1K followers

350 following

96 posts

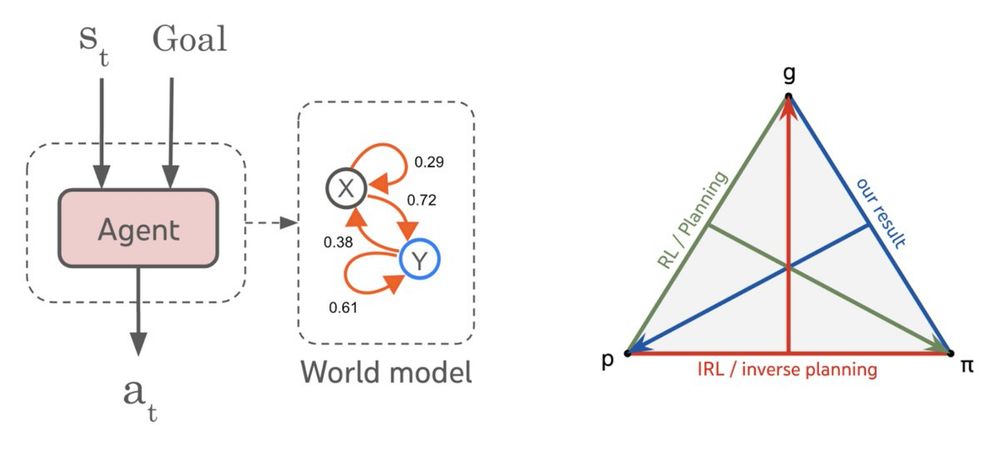

AGI safety researcher at Google DeepMind, leading causalincentives.com

Personal website: tomeveritt.se

Posts

Media

Videos

Starter Packs

Pinned

Reposted by Tom Everitt

Reposted by Tom Everitt

Edward Grefenstette

@egrefen.bsky.social

· Jul 21

Reposted by Tom Everitt

Reposted by Tom Everitt

Tom Everitt

@tom4everitt.bsky.social

· Jun 27

Tom Everitt

@tom4everitt.bsky.social

· Jun 10

Tom Everitt

@tom4everitt.bsky.social

· Jun 4

Tom Everitt

@tom4everitt.bsky.social

· Jun 4

Tom Everitt

@tom4everitt.bsky.social

· Jun 4

Tom Everitt

@tom4everitt.bsky.social

· Jun 4

Tom Everitt

@tom4everitt.bsky.social

· Jun 4

Tom Everitt

@tom4everitt.bsky.social

· Jun 4