Antonin Fourcade

@toninfrc.bsky.social

500 followers

100 following

19 posts

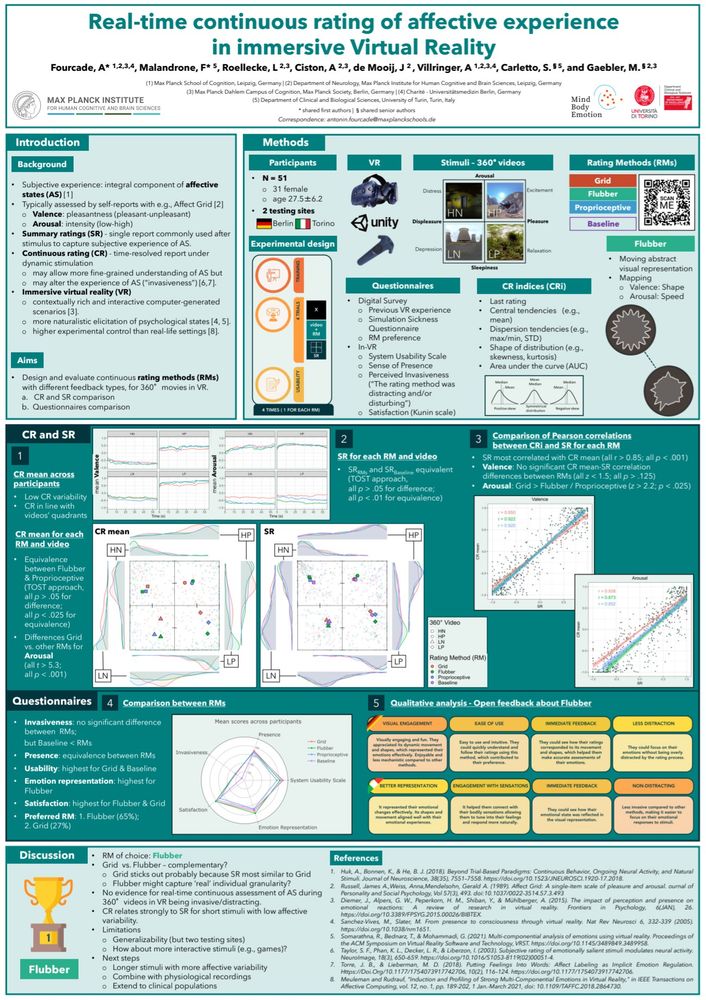

PhD student at Max Planck School of Cognition and MPI CBS/MPDCC (Berlin). Background in Biomedical Engineering and Neuroscience. Interested in brain-heart interactions, emotions and VR

Posts

Media

Videos

Starter Packs

Reposted by Antonin Fourcade

Reposted by Antonin Fourcade

Reposted by Antonin Fourcade

Antonin Fourcade

@toninfrc.bsky.social

· Dec 17

Antonin Fourcade

@toninfrc.bsky.social

· Dec 16

Antonin Fourcade

@toninfrc.bsky.social

· Dec 16

Reposted by Antonin Fourcade

Marta Gerosa

@martager.bsky.social

· Sep 24

Reposted by Antonin Fourcade

Reposted by Antonin Fourcade

Antonin Fourcade

@toninfrc.bsky.social

· Jan 12

Antonin Fourcade

@toninfrc.bsky.social

· Jan 12

Antonin Fourcade

@toninfrc.bsky.social

· Jan 11

Antonin Fourcade

@toninfrc.bsky.social

· Jan 11