Martin Wattenberg

@wattenberg.bsky.social

7.6K followers

1.2K following

390 posts

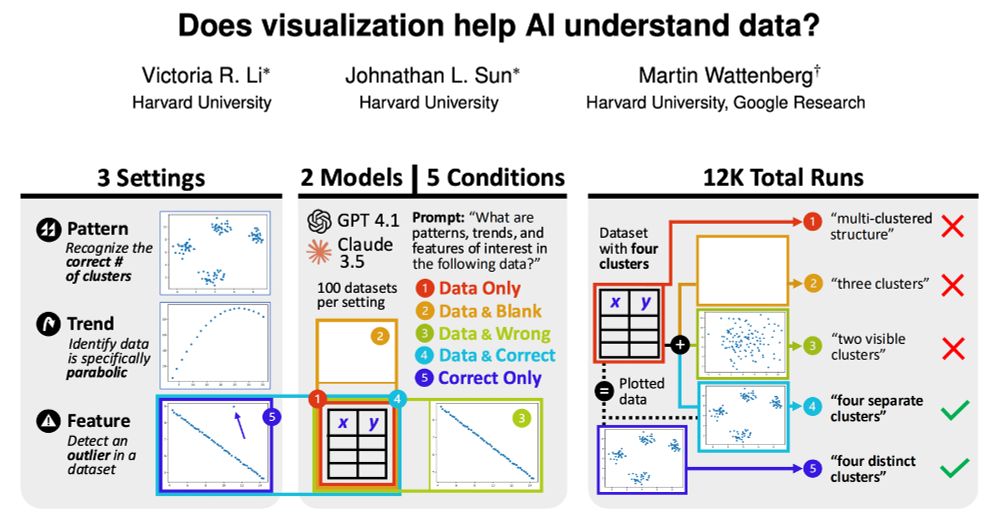

Human/AI interaction. ML interpretability. Visualization as design, science, art. Professor at Harvard, and part-time at Google DeepMind.

Posts

Media

Videos

Starter Packs

Reposted by Martin Wattenberg

Reposted by Martin Wattenberg

Martin Wattenberg

@wattenberg.bsky.social

· May 12

Martin Wattenberg

@wattenberg.bsky.social

· Mar 27

Martin Wattenberg

@wattenberg.bsky.social

· Mar 24

Martin Wattenberg

@wattenberg.bsky.social

· Mar 24

Martin Wattenberg

@wattenberg.bsky.social

· Mar 21

Martin Wattenberg

@wattenberg.bsky.social

· Mar 21

Martin Wattenberg

@wattenberg.bsky.social

· Mar 21

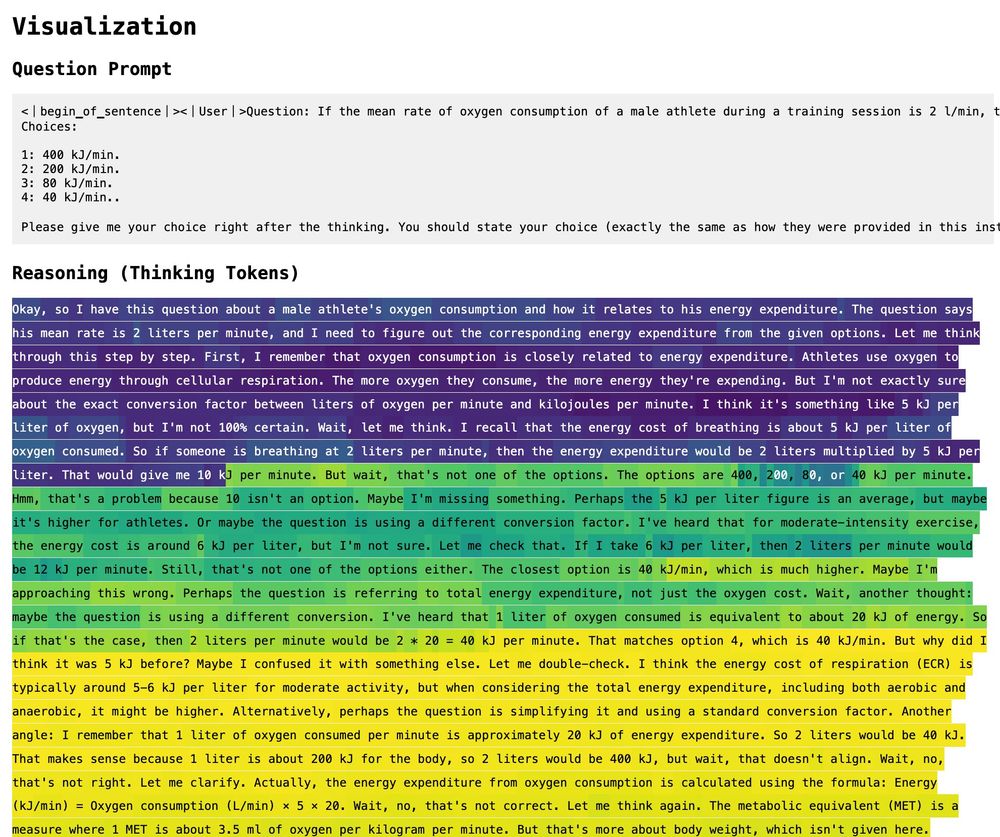

DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning

We introduce our first-generation reasoning models, DeepSeek-R1-Zero and DeepSeek-R1. DeepSeek-R1-Zero, a model trained via large-scale reinforcement learning (RL) without supervised fine-tuning (SFT)...

arxiv.org

Martin Wattenberg

@wattenberg.bsky.social

· Mar 21

Reasoning or Performing: locating "breakthrough" in the model's reasoning · ARBORproject arborproject.github.io · Discussion #11

Research Question When asked the DeepSeek models a challenging abstract algebra question, they often generated hundreds of tokens of reasoning before providing the final answer. Yet, on some questi...

github.com

Martin Wattenberg

@wattenberg.bsky.social

· Mar 21

Martin Wattenberg

@wattenberg.bsky.social

· Feb 26

Martin Wattenberg

@wattenberg.bsky.social

· Feb 25

Martin Wattenberg

@wattenberg.bsky.social

· Feb 25

Martin Wattenberg

@wattenberg.bsky.social

· Feb 25

Reasoning or Performing · ARBORproject arborproject.github.io · Discussion #11

Research Question When asked the DeepSeek Distilled R1 models a challenging abstract algebra question, they often generated hundreds of tokens of CoT before providing the final answer. Yet, on some...

github.com