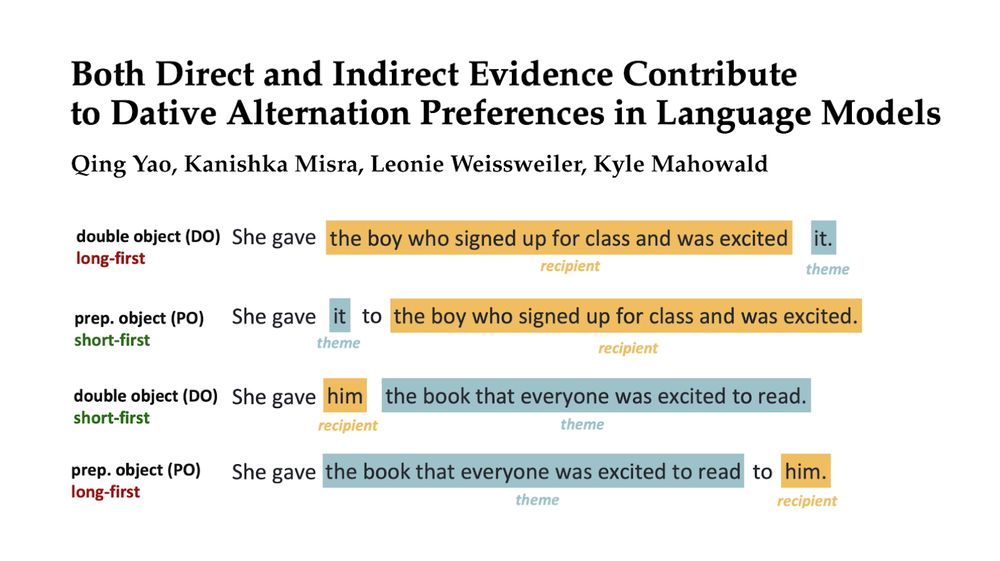

Leonie Weissweiler

@weissweiler.bsky.social

690 followers

200 following

33 posts

Postdoc at Uppsala University Computational Linguistics with Joakim Nivre

PhD from LMU Munich, prev. UT Austin, Princeton, @ltiatcmu.bsky.social, Cambridge

computational linguistics, construction grammar, morphosyntax

leonieweissweiler.github.io

Posts

Media

Videos

Starter Packs

Pinned

Reposted by Leonie Weissweiler

Reposted by Leonie Weissweiler

Reposted by Leonie Weissweiler

Ai2

@ai2.bsky.social

· May 9

Reposted by Leonie Weissweiler

Reposted by Leonie Weissweiler

Reposted by Leonie Weissweiler

Reposted by Leonie Weissweiler

Reposted by Leonie Weissweiler