Interested in NLP, interpretability, syntax, language acquisition and typology.

Introducing 🌍MultiBLiMP 1.0: A Massively Multilingual Benchmark of Minimal Pairs for Subject-Verb Agreement, covering 101 languages!

We present over 125,000 minimal pairs and evaluate 17 LLMs, finding that support is still lacking for many languages.

🧵⬇️

In each of these sentences, a verb that doesn't usually encode motion is being used to convey that an object is moving to a destination.

Given that these usages are rare, complex, and creative, we ask:

Do LLMs understand what's going on in them?

🧵1/7

In each of these sentences, a verb that doesn't usually encode motion is being used to convey that an object is moving to a destination.

Given that these usages are rare, complex, and creative, we ask:

Do LLMs understand what's going on in them?

🧵1/7

Language models (LMs) are remarkably good at generating novel well-formed sentences, leading to claims that they have mastered grammar.

Yet they often assign higher probability to ungrammatical strings than to grammatical strings.

How can both things be true? 🧵👇

Language models (LMs) are remarkably good at generating novel well-formed sentences, leading to claims that they have mastered grammar.

Yet they often assign higher probability to ungrammatical strings than to grammatical strings.

How can both things be true? 🧵👇

Come check it out if your interested in multilingual linguistic evaluation of LLMs (there will be parse trees on the slides! There's still use for syntactic structure!)

arxiv.org/abs/2504.02768

Come check it out if your interested in multilingual linguistic evaluation of LLMs (there will be parse trees on the slides! There's still use for syntactic structure!)

arxiv.org/abs/2504.02768

LLMs learn from vastly more data than humans ever experience. BabyLM challenges this paradigm by focusing on developmentally plausible data

We extend this effort to 45 new languages!

LLMs learn from vastly more data than humans ever experience. BabyLM challenges this paradigm by focusing on developmentally plausible data

We extend this effort to 45 new languages!

BLIMP-NL, in which we create a large new dataset for syntactic evaluation of Dutch LLMs, and learn a lot about dataset creation, LLM evaluation and grammatical abilities on the way.

I will present our new BLiMP-NL dataset for evaluating language models on Dutch syntactic minimal pairs and human acceptability judgments ⬇️

🗓️ Tuesday, July 29th, 16:00-17:30, Hall X4 / X5 (Austria Center Vienna)

BLIMP-NL, in which we create a large new dataset for syntactic evaluation of Dutch LLMs, and learn a lot about dataset creation, LLM evaluation and grammatical abilities on the way.

Pre-print: arxiv.org/abs/2506.13487

Fruit of an almost year-long project by amazing MS student @ezgibasar.bsky.social in collab w/ @frap98.bsky.social and @jumelet.bsky.social

Pre-print: arxiv.org/abs/2506.13487

Fruit of an almost year-long project by amazing MS student @ezgibasar.bsky.social in collab w/ @frap98.bsky.social and @jumelet.bsky.social

Het binnenhalen van Amerikaanse wetenschappers wordt betaalt door Nederlandse academici geen inflatiecorrectie op hun salaris te geven.

1/2

Het binnenhalen van Amerikaanse wetenschappers wordt betaalt door Nederlandse academici geen inflatiecorrectie op hun salaris te geven.

1/2

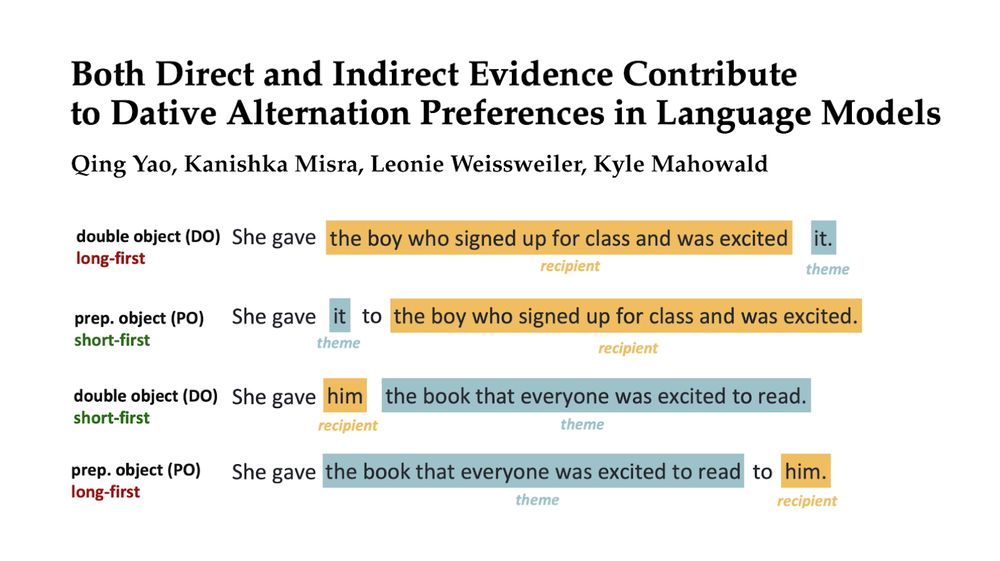

I’m happy to share that the preprint of my first PhD project is now online!

🎊 Paper: arxiv.org/abs/2505.23689

I’m happy to share that the preprint of my first PhD project is now online!

🎊 Paper: arxiv.org/abs/2505.23689

Marvellous defence of the increasingly maligned university experience by @patporter76.bsky.social

thecritic.co.uk/university-a...

Marvellous defence of the increasingly maligned university experience by @patporter76.bsky.social

thecritic.co.uk/university-a...

PhD candidate position in Göttingen, Germany: www.uni-goettingen.de/de/644546.ht...

PostDoc position in Leuven, Belgium:

www.kuleuven.be/personeel/jo...

Deadline 6th of June

PhD candidate position in Göttingen, Germany: www.uni-goettingen.de/de/644546.ht...

PostDoc position in Leuven, Belgium:

www.kuleuven.be/personeel/jo...

Deadline 6th of June

This edition will feature a new shared task on circuits/causal variable localization in LMs, details here: blackboxnlp.github.io/2025/task

This edition will feature a new shared task on circuits/causal variable localization in LMs, details here: blackboxnlp.github.io/2025/task

The evaluation pipelines are out, baselines are released & the challenge is on

There is still time to join and

We are excited to learn from you on pretraining and human-model gaps

*Don't forget to fastEval on checkpoints

github.com/babylm/evalu...

📈🤖🧠

#AI #LLMS

The evaluation pipelines are out, baselines are released & the challenge is on

There is still time to join and

We are excited to learn from you on pretraining and human-model gaps

*Don't forget to fastEval on checkpoints

github.com/babylm/evalu...

📈🤖🧠

#AI #LLMS

arxiv.org/abs/2409.19151

arxiv.org/abs/2409.19151

[1/] Retrieving passages from many languages can boost retrieval augmented generation (RAG) performance, but how good are LLMs at dealing with multilingual contexts in the prompt?

📄 Check it out: arxiv.org/abs/2504.00597

(w/ @arianna-bis.bsky.social @Raquel_Fernández)

#NLProc

[1/] Retrieving passages from many languages can boost retrieval augmented generation (RAG) performance, but how good are LLMs at dealing with multilingual contexts in the prompt?

📄 Check it out: arxiv.org/abs/2504.00597

(w/ @arianna-bis.bsky.social @Raquel_Fernández)

#NLProc

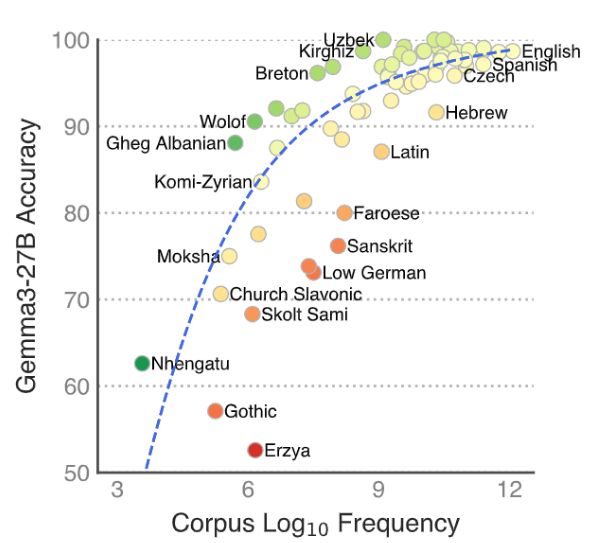

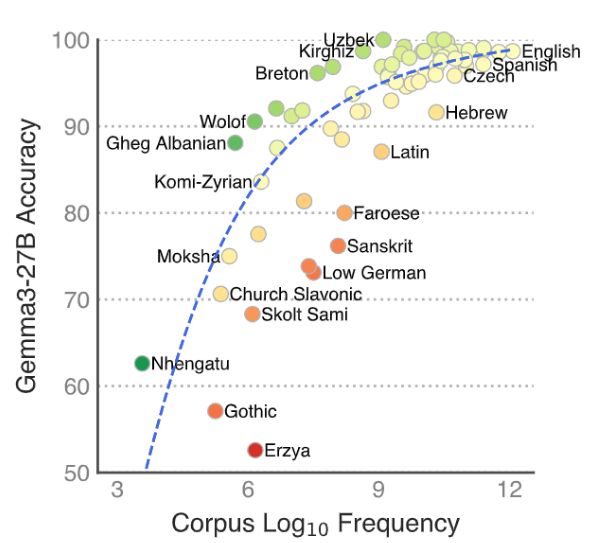

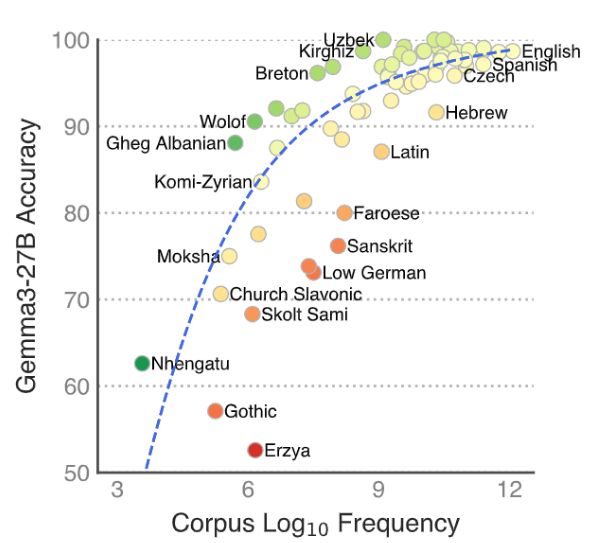

Introducing 🌍MultiBLiMP 1.0: A Massively Multilingual Benchmark of Minimal Pairs for Subject-Verb Agreement, covering 101 languages!

We present over 125,000 minimal pairs and evaluate 17 LLMs, finding that support is still lacking for many languages.

🧵⬇️

Introducing 🌍MultiBLiMP 1.0: A Massively Multilingual Benchmark of Minimal Pairs for Subject-Verb Agreement, covering 101 languages!

We present over 125,000 minimal pairs and evaluate 17 LLMs, finding that support is still lacking for many languages.

🧵⬇️

Multilinguality claims are often based on downstream tasks like QA & MT, while *formal* linguistic competence remains hard to gauge in lots of languages

Meet MultiBLiMP!

(joint work w/ @jumelet.bsky.social & @weissweiler.bsky.social)

Introducing 🌍MultiBLiMP 1.0: A Massively Multilingual Benchmark of Minimal Pairs for Subject-Verb Agreement, covering 101 languages!

We present over 125,000 minimal pairs and evaluate 17 LLMs, finding that support is still lacking for many languages.

🧵⬇️

Multilinguality claims are often based on downstream tasks like QA & MT, while *formal* linguistic competence remains hard to gauge in lots of languages

Meet MultiBLiMP!

(joint work w/ @jumelet.bsky.social & @weissweiler.bsky.social)

Introducing 🌍MultiBLiMP 1.0: A Massively Multilingual Benchmark of Minimal Pairs for Subject-Verb Agreement, covering 101 languages!

We present over 125,000 minimal pairs and evaluate 17 LLMs, finding that support is still lacking for many languages.

🧵⬇️

Introducing 🌍MultiBLiMP 1.0: A Massively Multilingual Benchmark of Minimal Pairs for Subject-Verb Agreement, covering 101 languages!

We present over 125,000 minimal pairs and evaluate 17 LLMs, finding that support is still lacking for many languages.

🧵⬇️

arxiv.org/abs/2503.20850

arxiv.org/abs/2503.20850

Across models and domains, we did not find evidence that LLMs have privileged access to their own predictions. 🧵(1/8)

Across models and domains, we did not find evidence that LLMs have privileged access to their own predictions. 🧵(1/8)

A teaser: It won't be about LLMs 🙃

Also I've just moved from X, so this was my very first post... Pls help out by connecting with me!

A teaser: It won't be about LLMs 🙃

Also I've just moved from X, so this was my very first post... Pls help out by connecting with me!

Linguistic evaluations of LLMs often implicitly assume that language is generated by symbolic rules.

In a new position paper, @adelegoldberg.bsky.social, @kmahowald.bsky.social and I argue that languages are not Lego sets, and evaluations should reflect this!

arxiv.org/pdf/2502.13195

Linguistic evaluations of LLMs often implicitly assume that language is generated by symbolic rules.

In a new position paper, @adelegoldberg.bsky.social, @kmahowald.bsky.social and I argue that languages are not Lego sets, and evaluations should reflect this!

arxiv.org/pdf/2502.13195

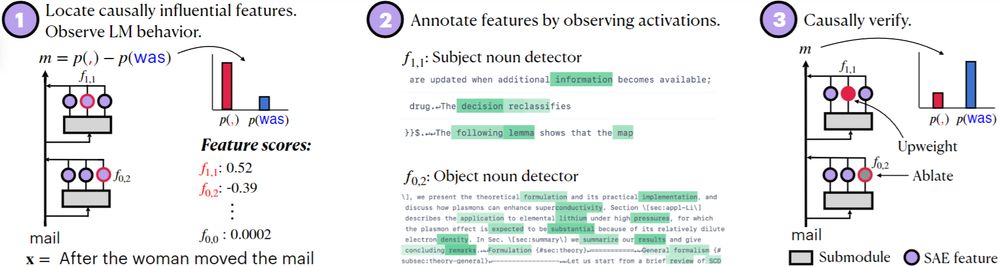

In a new paper, @amuuueller.bsky.social and I use mech interp tools to study how LMs process structurally ambiguous sentences. We show LMs rely on both syntactic & spurious features! 1/10

In a new paper, @amuuueller.bsky.social and I use mech interp tools to study how LMs process structurally ambiguous sentences. We show LMs rely on both syntactic & spurious features! 1/10