Previously PleIAs, Edinburgh University.

Interested in multilingual NLP, tokenizers, open science.

📍Boston. She/her.

https://catherinearnett.github.io/

🧠 Join us for the 2025 Workshop on "Evaluating AI in Practice Bridging Statistical Rigor, Sociotechnical Insights, and Ethical Boundaries" (Co-hosted with UKAISI)

📅 Dec 8, 2025

📝 Abstract due: Nov 20, 2025

Details below! ⬇️

evalevalai.com/events/works...

🧠 Join us for the 2025 Workshop on "Evaluating AI in Practice Bridging Statistical Rigor, Sociotechnical Insights, and Ethical Boundaries" (Co-hosted with UKAISI)

📅 Dec 8, 2025

📝 Abstract due: Nov 20, 2025

Details below! ⬇️

evalevalai.com/events/works...

❌77% of language models on @hf.co are not tagged for any language

📈For 95% of languages, most models are multilingual

🚨88% of models with tags are trained on English

In a new blog post, @tylerachang.bsky.social and I dig into these trends and why they matter! 👇

❌77% of language models on @hf.co are not tagged for any language

📈For 95% of languages, most models are multilingual

🚨88% of models with tags are trained on English

In a new blog post, @tylerachang.bsky.social and I dig into these trends and why they matter! 👇

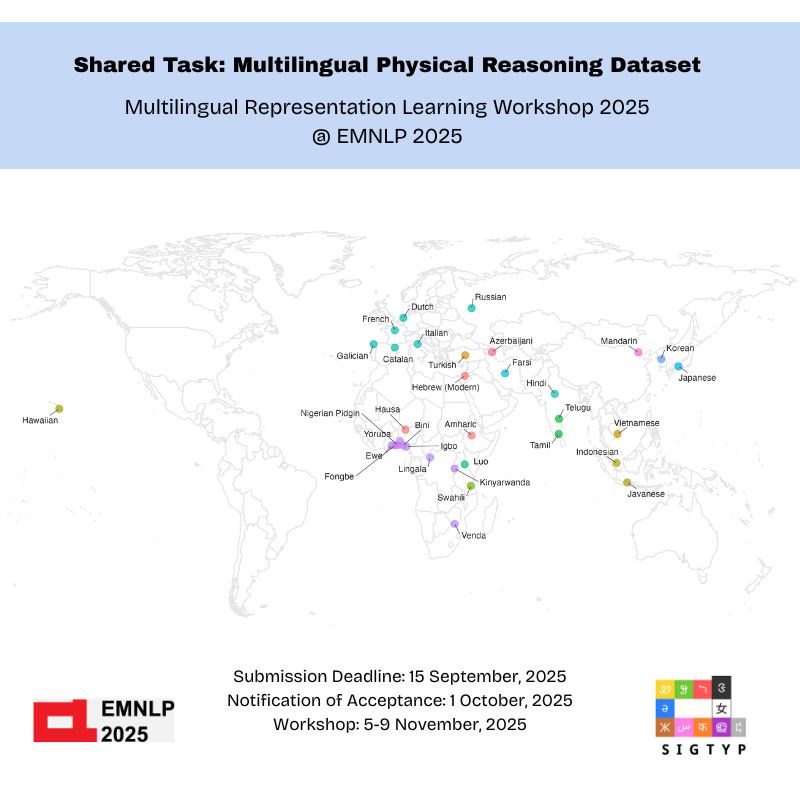

⏰ Submission Deadline: August 23rd (AoE)

🔗 CfP: sigtyp.github.io/ws2025-mrl.h...

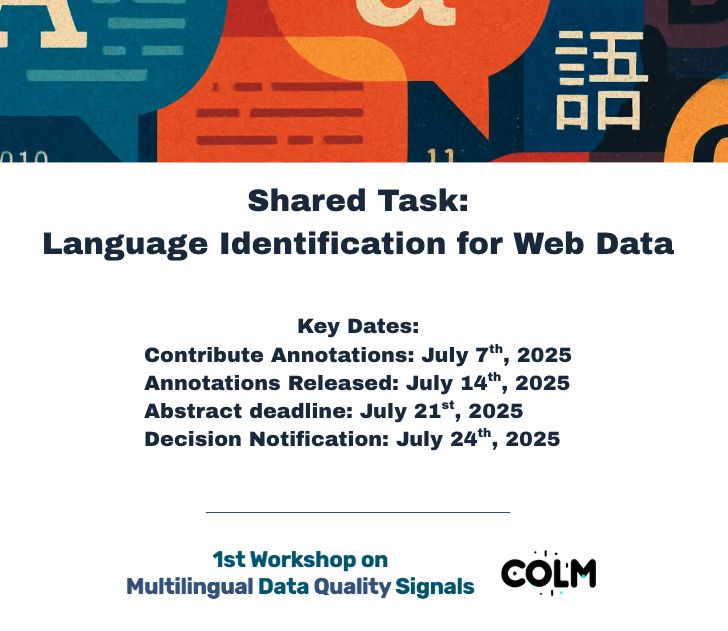

Registering is easy! All the details are on the shared task webpage: wmdqs.org/shared-task/

Deadline: July 23, 2025 (AoE) ⏰

Registering is easy! All the details are on the shared task webpage: wmdqs.org/shared-task/

Deadline: July 23, 2025 (AoE) ⏰

🥇 Winner: "BPE Stays on SCRIPT: Structured Encoding for Robust Multilingual Pretokenization" openreview.net/forum?id=AO7...

🥈 Runner-up: "One-D-Piece: Image Tokenizer Meets Quality-Controllable Compression" openreview.net/forum?id=lC4...

Congrats! 🎉

"Evaluating Morphological Alignment of Tokenizers in 70 Languages" and "BPE Stays on SCRIPT: Structured Encoding for Robust Multilingual Pretokenization". Check out the paper threads below!

"Evaluating Morphological Alignment of Tokenizers in 70 Languages" and "BPE Stays on SCRIPT: Structured Encoding for Robust Multilingual Pretokenization". Check out the paper threads below!

Our first talk is by @catherinearnett.bsky.social on tokenizers, their limitations, and how to improve them.