Zejin Lu

@zejinlu.bsky.social

79 followers

92 following

18 posts

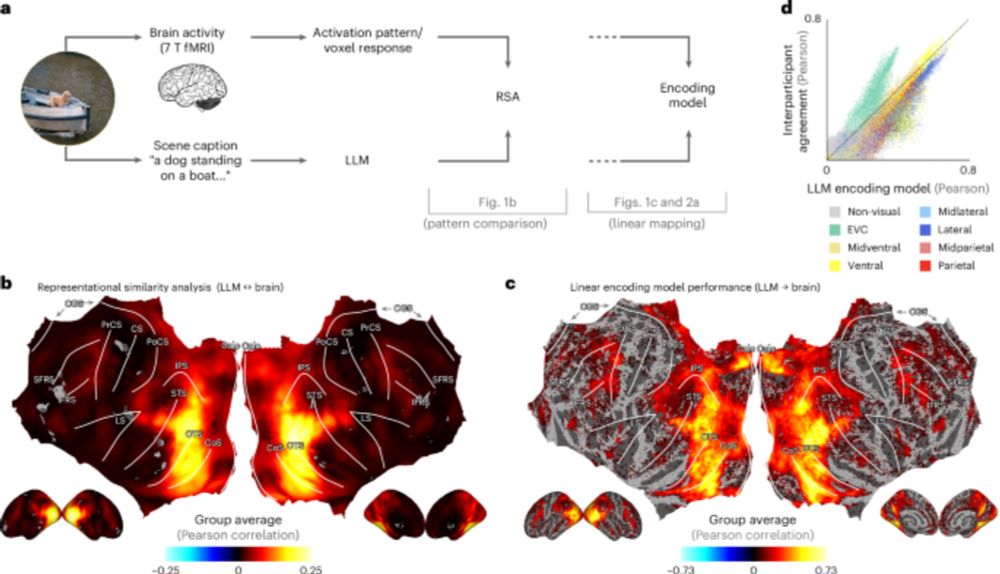

PhD Student @FU_Berlin co-supervised by Prof. Radoslaw M. Cichy and Prof. Tim Kietzmann, interested in machine learning and cognitive science.

Posts

Media

Videos

Starter Packs

Pinned

Zejin Lu

@zejinlu.bsky.social

· Aug 10

Reposted by Zejin Lu

Zejin Lu

@zejinlu.bsky.social

· Jul 8

Zejin Lu

@zejinlu.bsky.social

· Jun 6

Zejin Lu

@zejinlu.bsky.social

· Jun 6

Zejin Lu

@zejinlu.bsky.social

· Jun 6

Zejin Lu

@zejinlu.bsky.social

· Jun 6

Zejin Lu

@zejinlu.bsky.social

· Jun 6

Zejin Lu

@zejinlu.bsky.social

· Jun 6

Zejin Lu

@zejinlu.bsky.social

· Jun 6