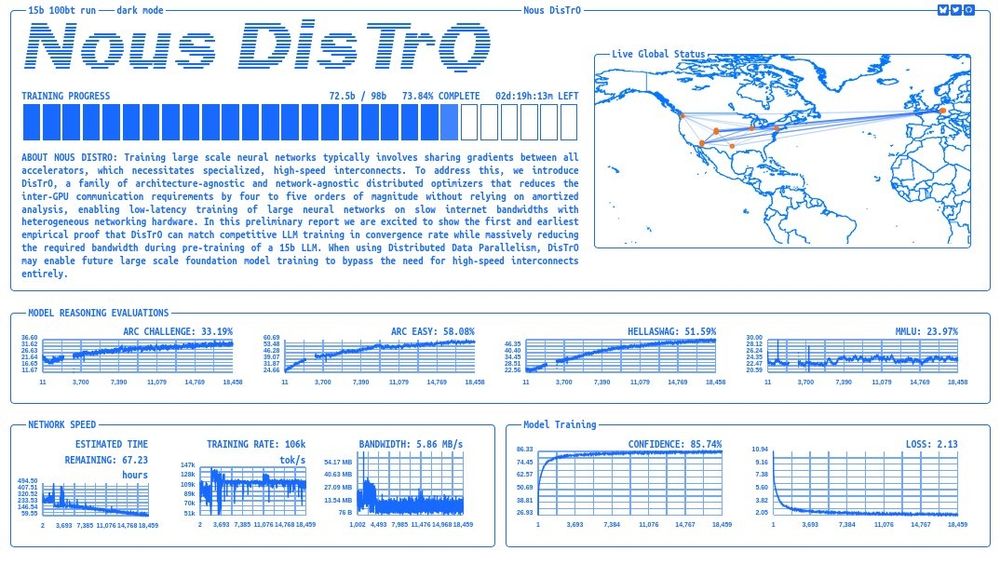

You can watch the run LIVE: distro.nousresearch.com

But it also shows the future is soon. arxiv.org/pdf/2411.103...

But it also shows the future is soon. arxiv.org/pdf/2411.103...

becoming more public over the past few months.

Curious if any big labs will release their datasets/pipelines in the next few months 🙏

🔗 huggingface.co/papers/2411....

becoming more public over the past few months.

Curious if any big labs will release their datasets/pipelines in the next few months 🙏

🔗 huggingface.co/papers/2411....

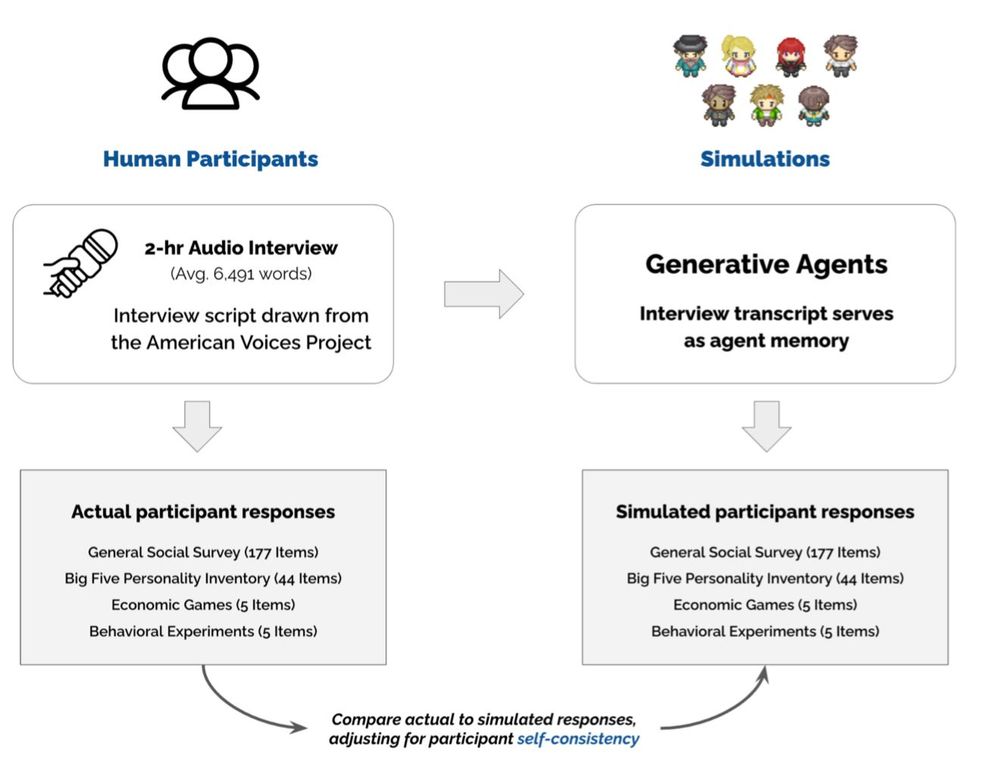

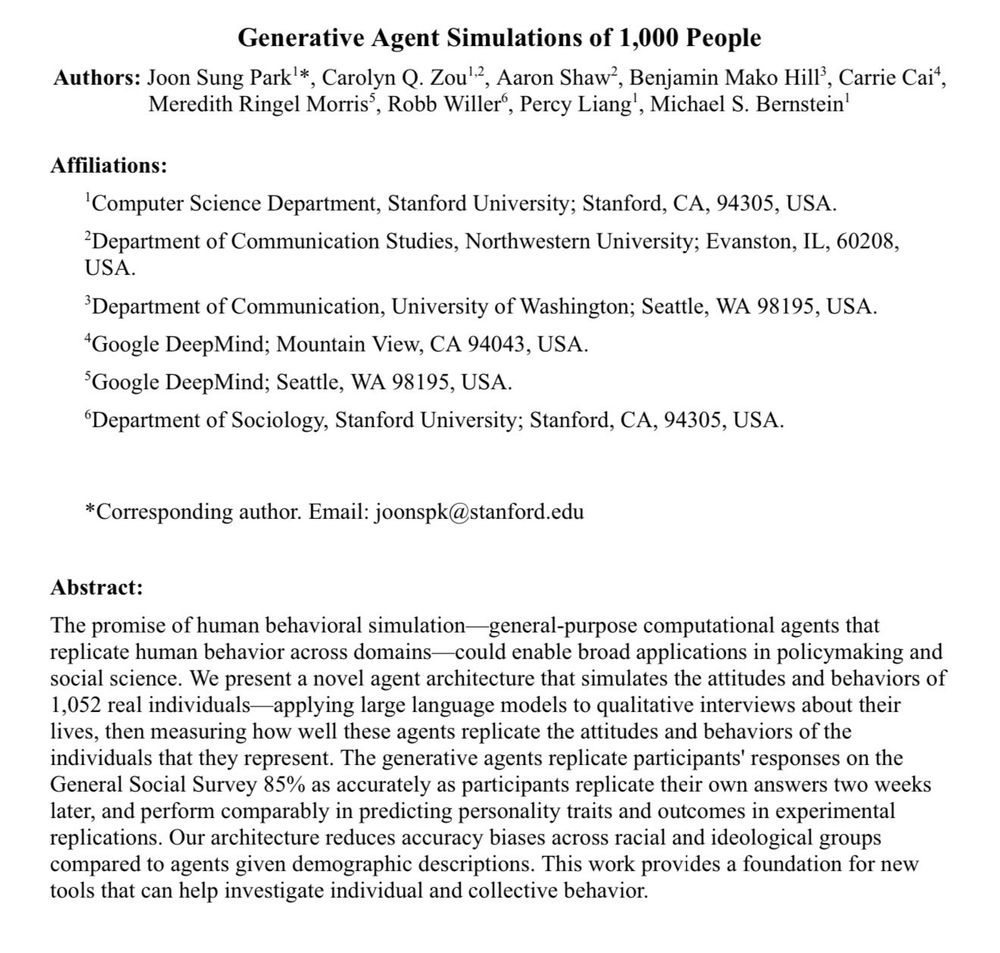

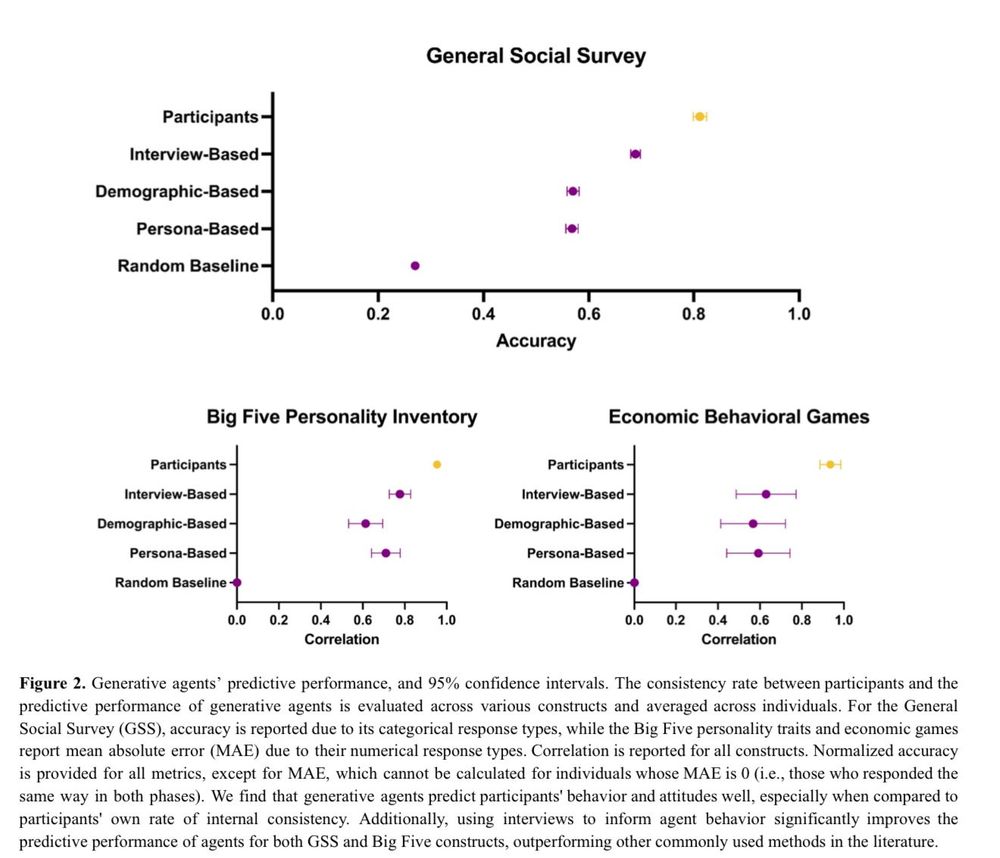

1) Voice-enabled GPT-4o conducted 2 hour

interviews of 1,052 people

2) GPT-4o agents were given the transcripts & prompted to simulate the people

3) The agents were given surveys & tasks. They achieved 85% accuracy in simulating interviewees real answers!

1) Voice-enabled GPT-4o conducted 2 hour

interviews of 1,052 people

2) GPT-4o agents were given the transcripts & prompted to simulate the people

3) The agents were given surveys & tasks. They achieved 85% accuracy in simulating interviewees real answers!