Interest | Match | Feed

Interest | Match | Feed

It details setup examples and use cases for each framework

➤ https://ku.bz/0SkgVw7Fz

It details setup examples and use cases for each framework

➤ https://ku.bz/0SkgVw7Fz

BentoML has just lately launched llm-optimizer, an open-source framework designed to streamline the benchmarking and efficiency tuning of self-hosted giant language fashions (LLMs). The…

BentoML has just lately launched llm-optimizer, an open-source framework designed to streamline the benchmarking and efficiency tuning of self-hosted giant language fashions (LLMs). The…

BentoML has just lately launched llm-optimizer, an open-source framework designed to streamline the benchmarking and efficiency tuning of self-hosted giant language fashions (LLMs). The…

BentoML has just lately launched llm-optimizer, an open-source framework designed to streamline the benchmarking and efficiency tuning of self-hosted giant language fashions (LLMs). The…

BentoML has recently released llm-optimizer, an open-source framework designed to streamline the benchmarking and performance tuning of self-hosted large language models (LLMs). The tool addresses…

BentoML has recently released llm-optimizer, an open-source framework designed to streamline the benchmarking and performance tuning of self-hosted large language models (LLMs). The tool addresses…

BentoML has recently released llm-optimizer, an open-source framework designed to streamline the benchmarking and performance tuning of self-hosted large language models (LLMs). The tool addresses…

BentoML has recently released llm-optimizer, an open-source framework designed to streamline the benchmarking and performance tuning of self-hosted large language models (LLMs). The tool addresses…

BentoML has recently released llm-optimizer, an open-source framework designed to streamline the benchmarking and performance tuning of self-hosted large language models (LLMs). The tool addresses…

BentoML has recently released llm-optimizer, an open-source framework designed to streamline the benchmarking and performance tuning of self-hosted large language models (LLMs). The tool addresses…

#AI #Shorts #Applications #Artificial #Intelligence […]

[Original post on marktechpost.com]

#AI #Shorts #Applications #Artificial #Intelligence […]

[Original post on marktechpost.com]

Interest | Match | Feed

Origin | Interest | Match

#ai #ml #bentoml #atdev

docs.bentoml.com/en/latest/in...

#ai #ml #bentoml #atdev

docs.bentoml.com/en/latest/in...

Interest | Match | Feed

Interest | Match | Feed

It details setup examples and use cases for each framework

➜ https://ku.bz/0SkgVw7Fz

It details setup examples and use cases for each framework

➜ https://ku.bz/0SkgVw7Fz

Creating the deployment pipeline! The optimized model is now in production, and I’m using BentoML to serve the model as an API. Time to make it scalable in Docker 🐳. #MLOpsZoomcamp #DataTalksClub

Creating the deployment pipeline! The optimized model is now in production, and I’m using BentoML to serve the model as an API. Time to make it scalable in Docker 🐳. #MLOpsZoomcamp #DataTalksClub

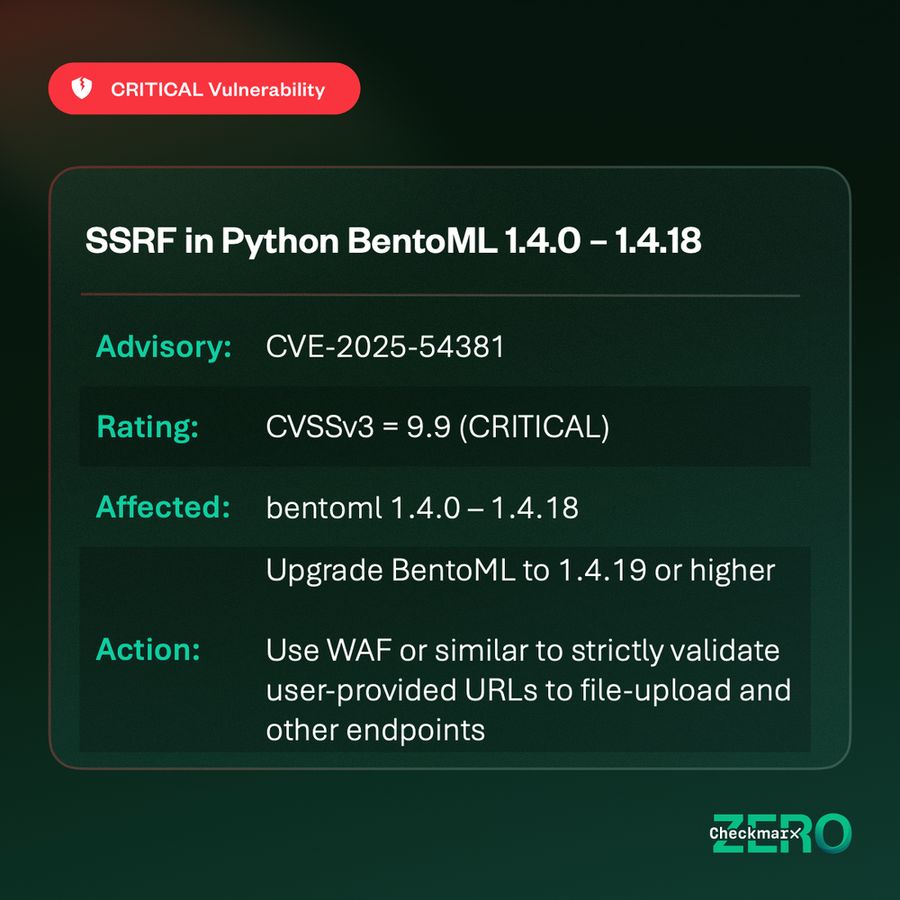

機械学習モデルのデプロイに利用されるフレームワーク「BentoML」に脆弱性が明らかとなった。アップデートで修正されている。

「JSON」や「multipart/form-data」におけるファイルアップロード機能の処理にサーバサイドリクエストフォージェリ(SSRF)の脆弱性「CVE-2025-54381」が明らかとなったもの。

ファイルのアップロードを処理するほとんどの「MLサービス」に影響を与える脆弱性だという。

機械学習モデルのデプロイに利用されるフレームワーク「BentoML」に脆弱性が明らかとなった。アップデートで修正されている。

「JSON」や「multipart/form-data」におけるファイルアップロード機能の処理にサーバサイドリクエストフォージェリ(SSRF)の脆弱性「CVE-2025-54381」が明らかとなったもの。

ファイルのアップロードを処理するほとんどの「MLサービス」に影響を与える脆弱性だという。

🛡️ Implement strict validation for all user-provided URLs, especially in file upload functionalities.

📛 Internal exposure is dangerous; attackers can compromise all hosted code and or services! (🧵 3/3)

🛡️ Implement strict validation for all user-provided URLs, especially in file upload functionalities.

📛 Internal exposure is dangerous; attackers can compromise all hosted code and or services! (🧵 3/3)

CVE ID : CVE-2025-54381

Published : July 29, 2025, 11:15 p.m. | 3 hours, 44 minutes ago

Description : BentoML is a Python library for building online serving systems optimized for AI apps and model inference. In versions 1.4.0 ...

CVE ID : CVE-2025-54381

Published : July 29, 2025, 11:15 p.m. | 3 hours, 44 minutes ago

Description : BentoML is a Python library for building online serving systems optimized for AI apps and model inference. In versions 1.4.0 ...