🔗 aidailypost.com/news/human-a...

🔗 aidailypost.com/news/human-a...

Remarkably, TTM enables SigLIP-B16 (~ 0.2B params) to surpass GPT-4.1 on MMVP-VLM.

Shout out to the awesome authors behind SigLIP! @giffmana.ai @xzhai.bsky.social @kolesnikov.ch and Basil Mustafa

Remarkably, TTM enables SigLIP-B16 (~ 0.2B params) to surpass GPT-4.1 on MMVP-VLM.

Shout out to the awesome authors behind SigLIP! @giffmana.ai @xzhai.bsky.social @kolesnikov.ch and Basil Mustafa

TTM enables SigLIP-B16 (~0.2B params) to outperform GPT-4.1 on MMVP-VLM, establishing a new SOTA.

TTM enables SigLIP-B16 (~0.2B params) to outperform GPT-4.1 on MMVP-VLM, establishing a new SOTA.

事前に学習されたオートエンコーダがピクセルを拡散プロセスのための潜在空間にマッピングする潜在生成モデリングは、拡散トランスフォーマー(DiT)の標準的な戦略となっているが、オートエンコーダコンポーネントはほとんど進化していない。ほとんどのDiTは、オリジナルのVAEエンコーダーに依存し続けてい...

事前に学習されたオートエンコーダがピクセルを拡散プロセスのための潜在空間にマッピングする潜在生成モデリングは、拡散トランスフォーマー(DiT)の標準的な戦略となっているが、オートエンコーダコンポーネントはほとんど進化していない。ほとんどのDiTは、オリジナルのVAEエンコーダーに依存し続けてい...

2510.11690, cs․CV | cs․LG, 13 Oct 2025

🆕Diffusion Transformers with Representation Autoencoders

Boyang Zheng, Nanye Ma, Shengbang Tong, Saining Xie

2510.11690, cs․CV | cs․LG, 13 Oct 2025

🆕Diffusion Transformers with Representation Autoencoders

Boyang Zheng, Nanye Ma, Shengbang Tong, Saining Xie

Mercari fine-tunes SigLIP on product image-title pairs to get 9.1% offline improvement and 50% CTR increase in production for visual similarity-based recommendations.

📝 arxiv.org/abs/2510.13359

Mercari fine-tunes SigLIP on product image-title pairs to get 9.1% offline improvement and 50% CTR increase in production for visual similarity-based recommendations.

📝 arxiv.org/abs/2510.13359

We extend classical unimodal active learning to the multimodal AL with unaligned data, allowing data-efficient finetuning and pretraining of vision-language models such as CLIP and SigLIP.

1/3

We extend classical unimodal active learning to the multimodal AL with unaligned data, allowing data-efficient finetuning and pretraining of vision-language models such as CLIP and SigLIP.

1/3

Powered by Flux.1 diffusion transformer.

Dual-branch conditioning.

Adaptive modulation.

SigLIP semantic alignment.

Read more:

aiadoptionagency.com/lucidflux-ca...

Powered by Flux.1 diffusion transformer.

Dual-branch conditioning.

Adaptive modulation.

SigLIP semantic alignment.

Read more:

aiadoptionagency.com/lucidflux-ca...

2509.22414, cs․CV, 26 Sep 2025

🆕LucidFlux: Caption-Free Universal Image Restoration via a Large-Scale Diffusion Transformer

Song Fei, Tian Ye, Lujia Wang, Lei Zhu

2509.22414, cs․CV, 26 Sep 2025

🆕LucidFlux: Caption-Free Universal Image Restoration via a Large-Scale Diffusion Transformer

Song Fei, Tian Ye, Lujia Wang, Lei Zhu

- if you prefer searching with danbooru-style tags, go with SigLIP

- if you prefer english sentences go with MobileCLIP

on my ryzen 7 7800x3d cpu, MobileCLIP is faster than SigLIP by a 3-4 seconds

- if you prefer searching with danbooru-style tags, go with SigLIP

- if you prefer english sentences go with MobileCLIP

on my ryzen 7 7800x3d cpu, MobileCLIP is faster than SigLIP by a 3-4 seconds

👇2/4

👇2/4

– Unlike SigLIP models, PE shows a large gap between its image-to-image and text-to-image performance.

– Unlike SigLIP models, PE shows a large gap between its image-to-image and text-to-image performance.

vrg.fel.cvut.cz/ilias/

vrg.fel.cvut.cz/ilias/

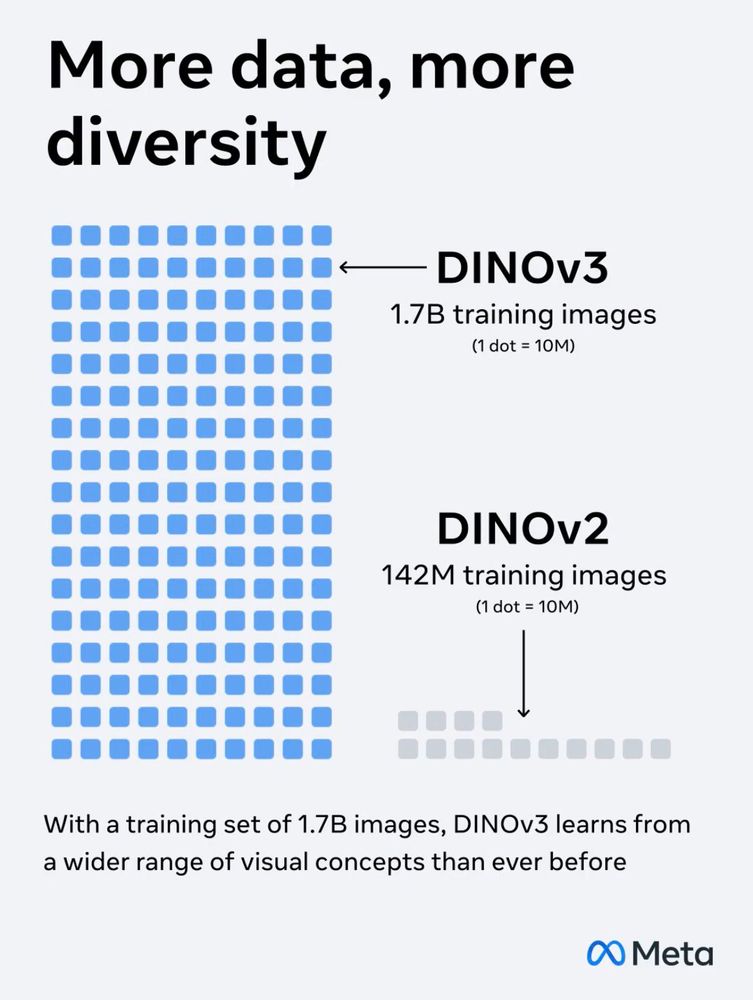

SigLIP (VLMs) and DINO are two competing paradigms for image encoders.

My intuition is that joint vision-language modeling works great for semantic problems but may be too coarse for geometry problems like SfM or SLAM.

Most animals navigate 3D space perfectly without language.

SigLIP (VLMs) and DINO are two competing paradigms for image encoders.

My intuition is that joint vision-language modeling works great for semantic problems but may be too coarse for geometry problems like SfM or SLAM.

Most animals navigate 3D space perfectly without language.

#Deepfake #SigLIP #Transformer #UNITE

www.matricedigitale.it/2025/07/27/u...

#Deepfake #SigLIP #Transformer #UNITE

www.matricedigitale.it/2025/07/27/u...