I released the wordvector package v0.5.0. It is rapidly getting better and different from the original Word2vec package. Please read "Align word vectors of multiple Word2vec models" about the new function blog.koheiw.net?p=2299 #rstats #quanteda

Align word vectors of multiple Word2vec models

I have been developing a new R package called wordvector since last year. I started it as a fork of the Word2vec package but made several important changes to make it fully compatible with quanteda…

blog.koheiw.net

You tired seededLDA already, but its recent version can capture less frequent topics better with adjust Dirichlet priors. I am curious how it works. Please read blog.koheiw.net?p=2233

A new topic model for analyzing imbalanced corpora

I have been developing and testing a new topic model called Distributed Asymmetric Allocation (DAA) because latent Dirichlet allocation (LDA) takes a long time to fit to a large corpus, but does no…

blog.koheiw.net

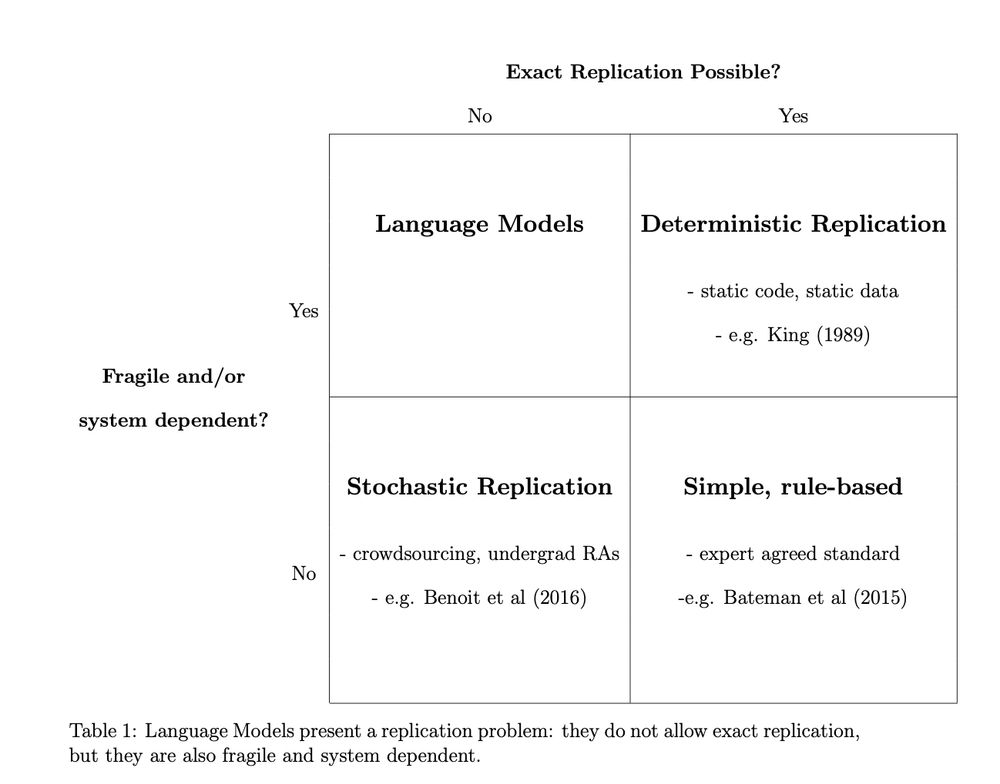

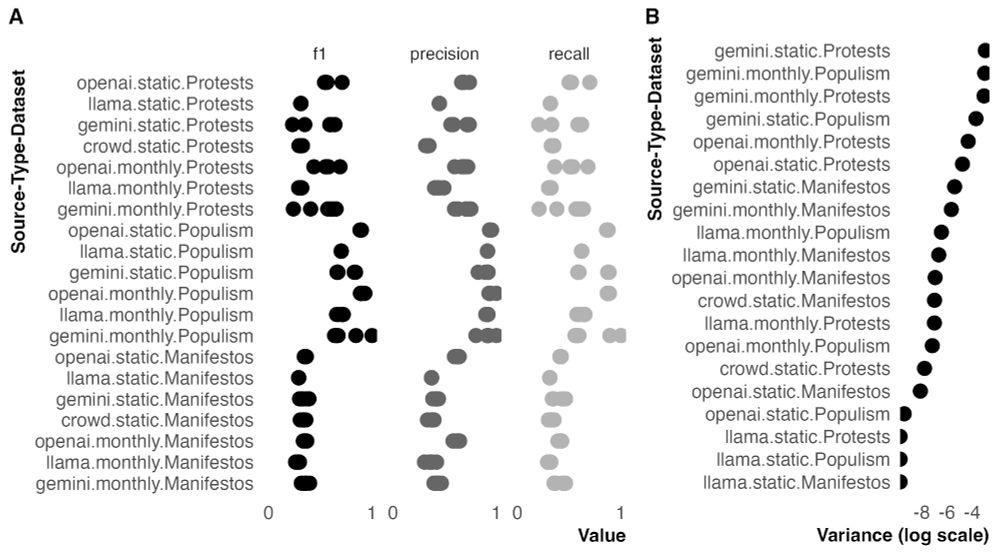

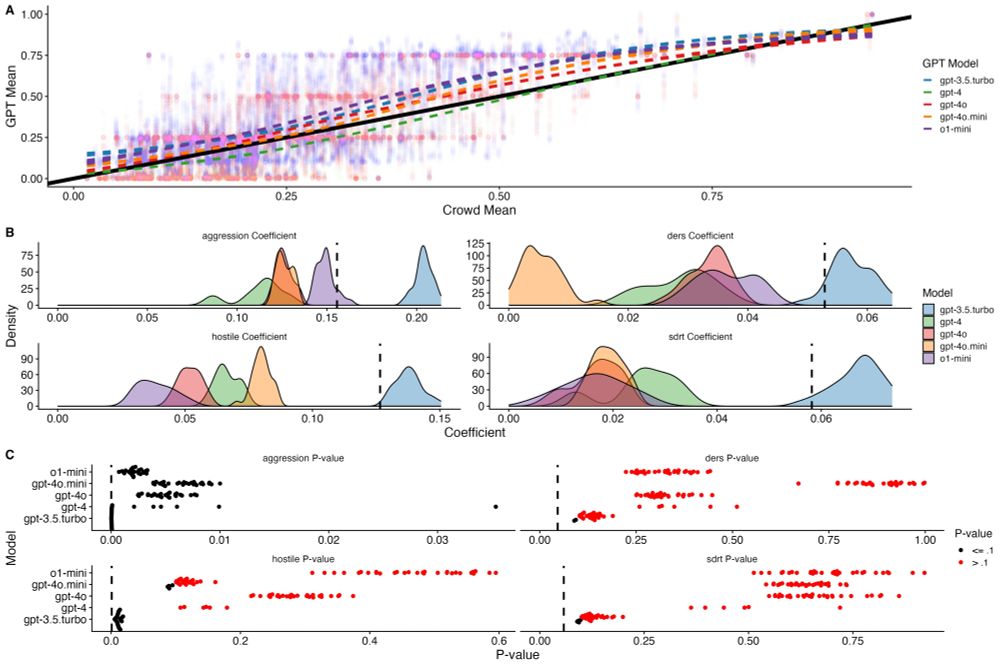

Pleased to share the latest version of my paper with Arthur Spirling and @lexipalmer.bsky.social on replication using LMs

We show:

1. current applications of LMs in political science research *don't* meet basic standards of reproducibility...

We show:

1. current applications of LMs in political science research *don't* meet basic standards of reproducibility...

A few days ago, I received an email from a researcher asking if text analysis is becoming irrelevant because of AI... blog.koheiw.net?p=2254 #text-as-data #quanteda

If you think the number of topics, k, is the only important parameter for topic models, you need to read this post and the research paper. blog.koheiw.net?p=2233 I created a new model to optimize the Dirichlet priors to analyze imbalanced corpus more accurately. #rstats #quanteda

A new topic model for analysis imbalanced corpus

I have been developing and testing a new topic model called model Distributed Asymmetric Allocation (DAA) because latent Dirichlet allocation (LDA) takes a long time to fit to a large corpus but do…

blog.koheiw.net

Reposted by: Kohei Watanabe

Reposted by: Kohei Watanabe