AttentionLab Utrecht University

@attentionlab.bsky.social

160 followers

91 following

7 posts

AttentionLab is the research group headed by Prof. Stefan Van der Stigchel at Experimental Psychology | Helmholtz Institute

| Utrecht University

Posts

Media

Videos

Starter Packs

Pinned

Reposted by AttentionLab Utrecht University

Reposted by AttentionLab Utrecht University

Christoph Strauch

@cstrauch.bsky.social

· Aug 27

Reposted by AttentionLab Utrecht University

Dan Wang

@danwang7.bsky.social

· Aug 27

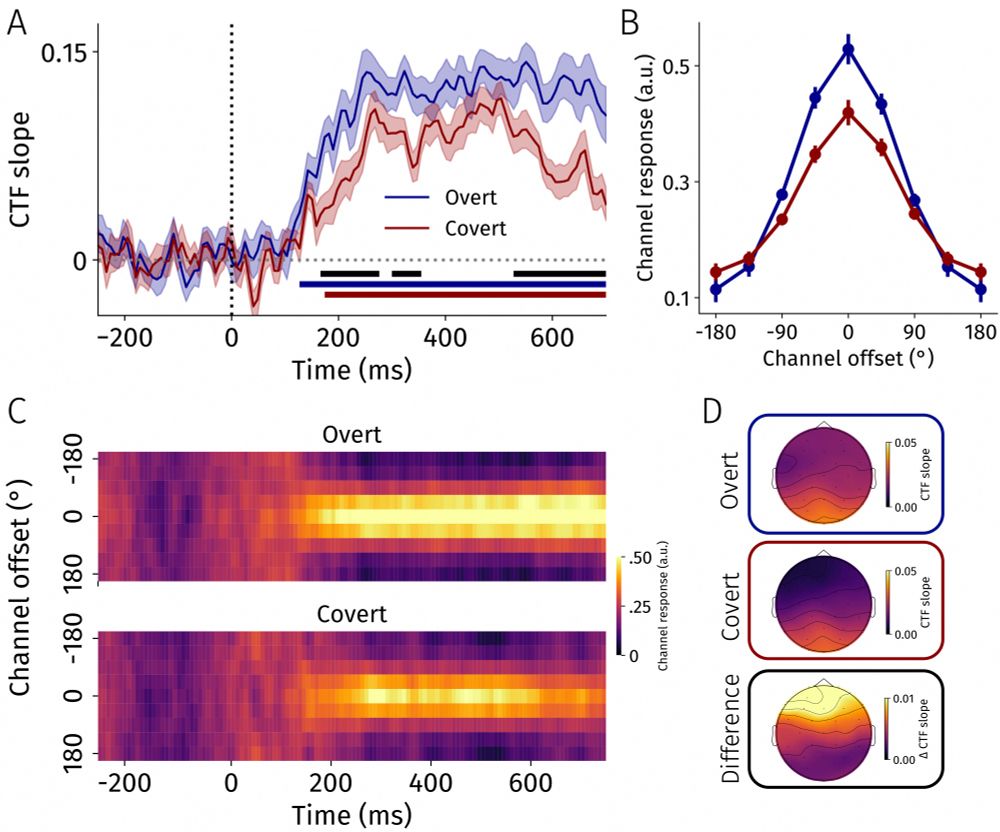

Dynamic competition between bottom-up saliency and top-down goals in early visual cortex

Task-irrelevant yet salient stimuli can elicit automatic, bottom-up attentional capture and compete with top-down, goal-directed processes for neural representation. However, the temporal dynamics und...

www.biorxiv.org

Reposted by AttentionLab Utrecht University

Reposted by AttentionLab Utrecht University

Reposted by AttentionLab Utrecht University

Reposted by AttentionLab Utrecht University

Dan Wang

@danwang7.bsky.social

· Aug 24

Reposted by AttentionLab Utrecht University

Reposted by AttentionLab Utrecht University

Reposted by AttentionLab Utrecht University

Reposted by AttentionLab Utrecht University

Damian Koevoet

@dkoevoet.bsky.social

· Jun 12

Reposted by AttentionLab Utrecht University

Reposted by AttentionLab Utrecht University

Reposted by AttentionLab Utrecht University

Reposted by AttentionLab Utrecht University

Reposted by AttentionLab Utrecht University

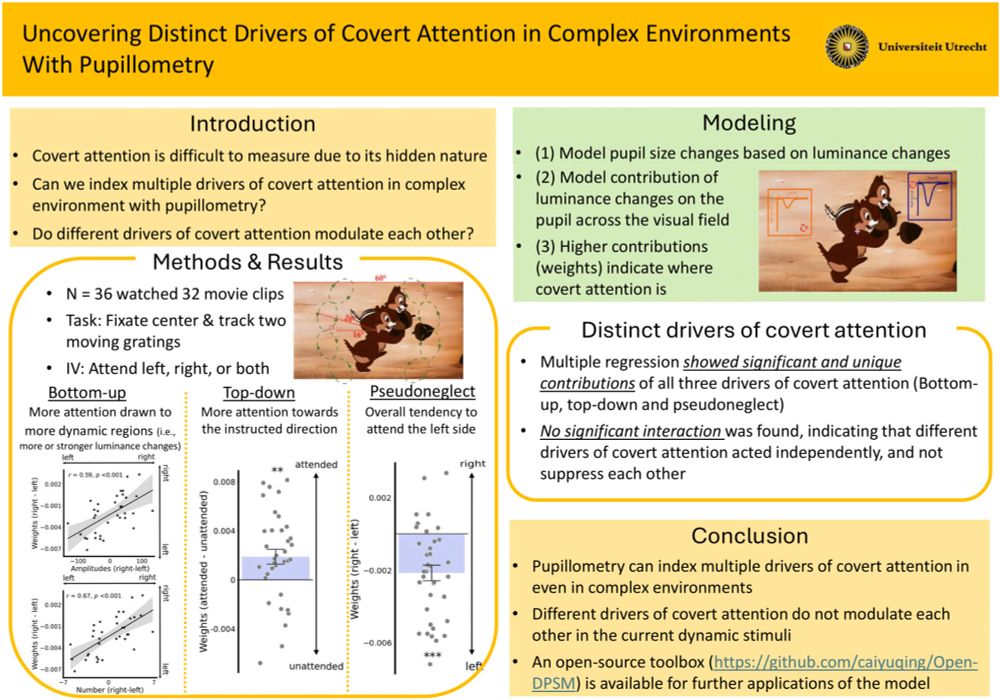

Christoph Strauch

@cstrauch.bsky.social

· Mar 21

<em>Psychophysiology</em> | SPR Journal | Wiley Online Library

Previous studies have shown that the pupillary light response (PLR) can physiologically index covert attention, but only with highly simplistic stimuli. With a newly introduced technique that models ....

doi.org

Reposted by AttentionLab Utrecht University

Damian Koevoet

@dkoevoet.bsky.social

· Mar 19

<em>Psychophysiology</em> | SPR Journal | Wiley Online Library

Dominant theories posit that attentional shifts prior to saccades enable a stable visual experience despite abrupt changes in visual input caused by saccades. However, recent work may challenge this ...

onlinelibrary.wiley.com

Reposted by AttentionLab Utrecht University

Reposted by AttentionLab Utrecht University

Dan Wang

@danwang7.bsky.social

· Dec 20

Reposted by AttentionLab Utrecht University

Dan Wang

@danwang7.bsky.social

· Dec 20

The priority state of items in visual working memory determines their influence on early visual processing

Items held in visual working memory (VWM) influence early visual processing by enhancing memory-matching visual input. Depending on current task deman…

www.sciencedirect.com

Damian Koevoet

@dkoevoet.bsky.social

· Dec 11

Sensory Input Matching Visual Working Memory Guides Internal Prioritization

Adaptive behavior necessitates the prioritization of the most relevant information in the environment (external) and in memory (internal). Internal prioritization is known to guide the selection of ex...

www.biorxiv.org