We show that numerosity adaptation (a seemingly high-level stim property) suppresses neural responses in early visual cortex; these adaptation FX increase as we progress thru the visual processing hierarchy.

www.nature.com/articles/s42...

We show that numerosity adaptation (a seemingly high-level stim property) suppresses neural responses in early visual cortex; these adaptation FX increase as we progress thru the visual processing hierarchy.

I'm open to discuss the topic, but o.c. many ideas!!

3+2 year 100% TVL-13 position in '26 - open topic on the intersection of combined EEG-EyeTracking, Statistical Methods, Cognitive Modelling, VR/Mobile EEG, Vision ...

Apply via Max-Planck IMPRS-IS program until 2025-11-16 imprs.is.mpg.de

Read: www.s-ccs.de/philosophy

I'm open to discuss the topic, but o.c. many ideas!!

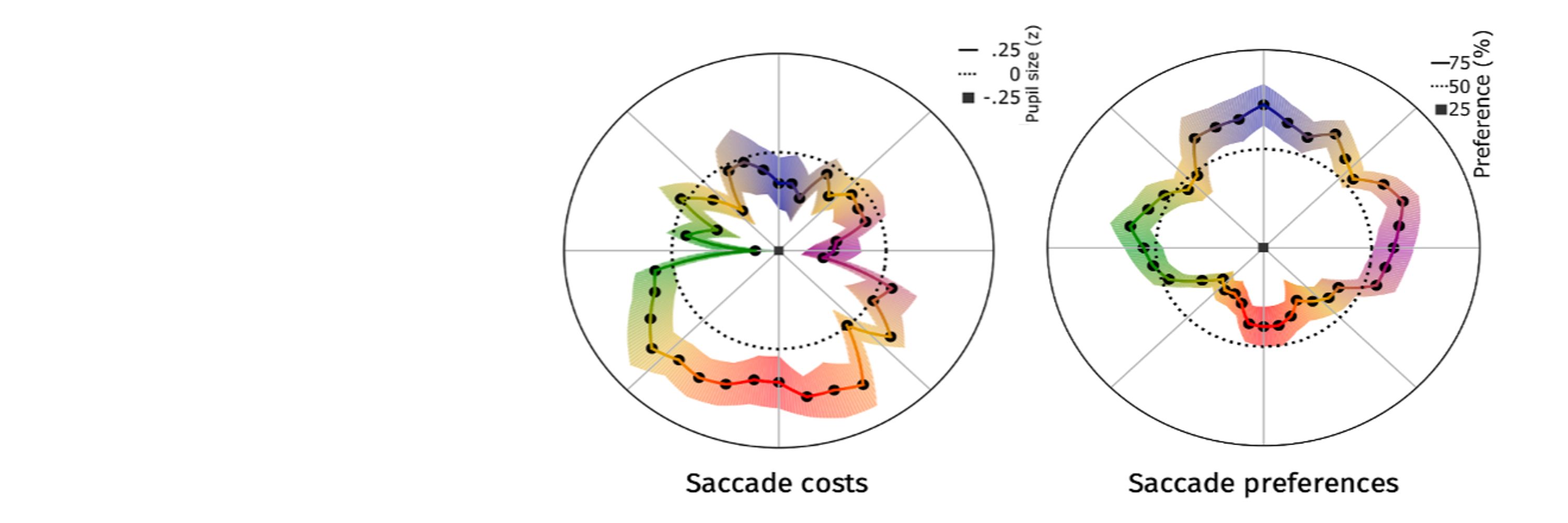

In a new paper @jocn.bsky.social, we show that pupil size independently tracks breadth and load.

doi.org/10.1162/JOCN...

In a new paper @jocn.bsky.social, we show that pupil size independently tracks breadth and load.

doi.org/10.1162/JOCN...

say hi and show your colleagues that you're one of the dedicated ones by getting up early on the last day!

I'll show data, demonstrating that synesthetic perception is perceptual, automatic, and effortless.

Join my talk (Thursday, early morning.., Color II) to learn how the qualia of synesthesia can be inferred from pupil size.

Join and (or) say hi!

say hi and show your colleagues that you're one of the dedicated ones by getting up early on the last day!

@attentionlab.bsky.social @ecvp.bsky.social

Preprint for more details: www.biorxiv.org/content/10.1...

@attentionlab.bsky.social @ecvp.bsky.social

Preprint for more details: www.biorxiv.org/content/10.1...

I'll show data, demonstrating that synesthetic perception is perceptual, automatic, and effortless.

Join my talk (Thursday, early morning.., Color II) to learn how the qualia of synesthesia can be inferred from pupil size.

Join and (or) say hi!

I'll show data, demonstrating that synesthetic perception is perceptual, automatic, and effortless.

Join my talk (Thursday, early morning.., Color II) to learn how the qualia of synesthesia can be inferred from pupil size.

Join and (or) say hi!

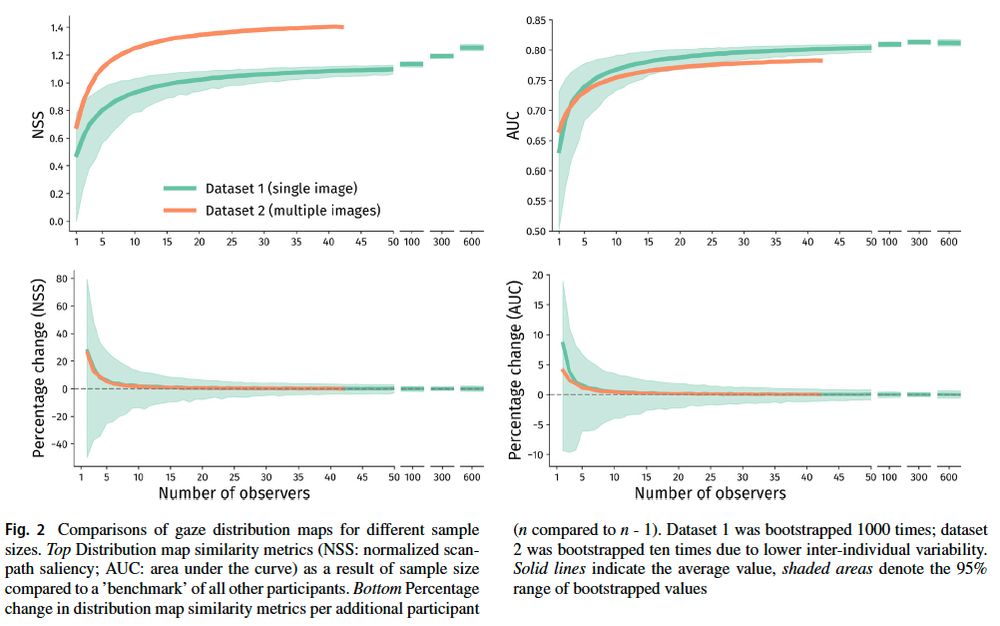

Depends of course, but our guidelines help navigating this in an informed way.

Out now in BRM (free) doi.org/10.3758/s134...

@psychonomicsociety.bsky.social

Depends of course, but our guidelines help navigating this in an informed way.

Out now in BRM (free) doi.org/10.3758/s134...

@psychonomicsociety.bsky.social

www.rug.nl/about-ug/wor...

www.rug.nl/about-ug/wor...

Congratulations dr. Alex, super proud of your achievements!!!

The dissertation is available here: doi.org/10.33540/2960

Congratulations dr. Alex, super proud of your achievements!!!

Open Access link: doi.org/10.3758/s134...

Open Access link: doi.org/10.3758/s134...

presentations today!

R2, 15:00

@chrispaffen.bsky.social:

Functional processing asymmetries between nasal and temporal hemifields during interocular conflict

R1, 17:15

@dkoevoet.bsky.social:

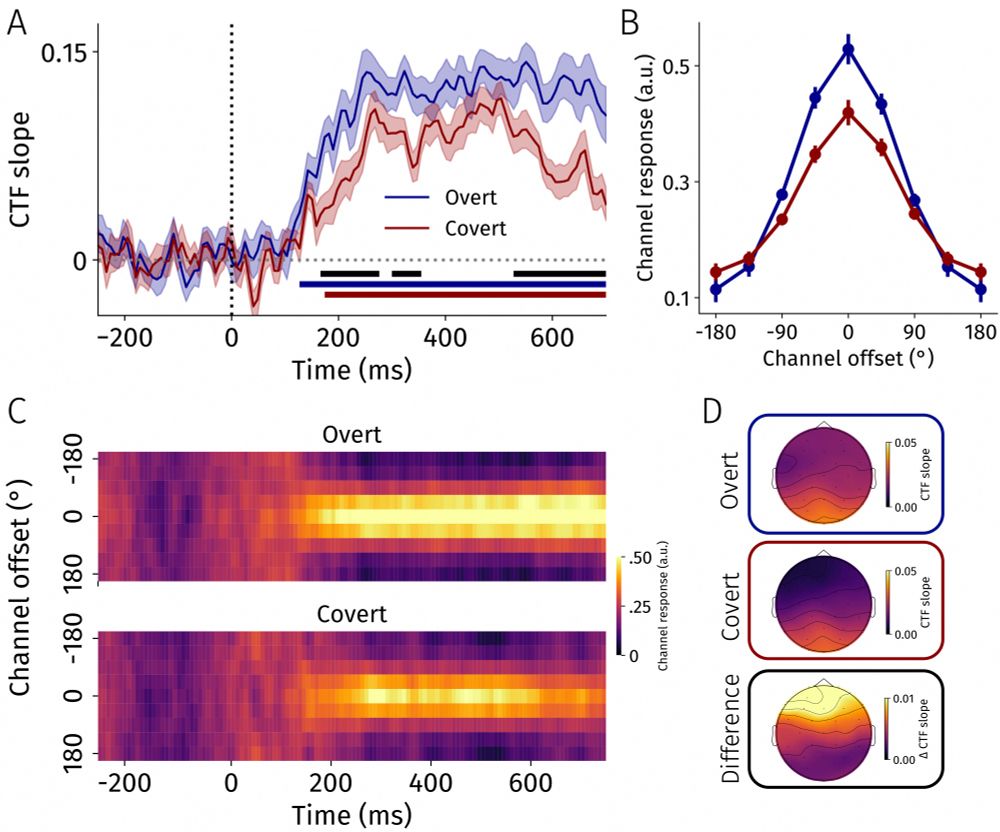

Sharper Spatially-Tuned Neural Activity in Preparatory Overt than in Covert Attention

presentations today!

R2, 15:00

@chrispaffen.bsky.social:

Functional processing asymmetries between nasal and temporal hemifields during interocular conflict

R1, 17:15

@dkoevoet.bsky.social:

Sharper Spatially-Tuned Neural Activity in Preparatory Overt than in Covert Attention

We show: EEG decoding dissociates preparatory overt from covert attention at the population level:

doi.org/10.1101/2025...

OK, I might be biased but still, reading out different cognitive strategies by combining EEG data, our reaction time decomposition method, and a deep learning sequence model is super cool!

Congrats Rick for your first preprint 🥳

If you decide to click on this URL too quickly, you might just skip a cognitive operation! We combine Hidden Multivariate Pattern analysis (HMP) and deep learning (Mamba/State Space Models) to detect cognitive operations at a trial level from EEG data.

OK, I might be biased but still, reading out different cognitive strategies by combining EEG data, our reaction time decomposition method, and a deep learning sequence model is super cool!

Congrats Rick for your first preprint 🥳