- how they are trained (gradient descent)

- the structure into which they are placed (architecture)

- the base arithmetic (matmul, norm, batch norm, and so on)

I didn't spend 10 years in mines of academia to be told ignorance is morally equal knowledge.

We know exactly how LLMs work.

- how they are trained (gradient descent)

- the structure into which they are placed (architecture)

- the base arithmetic (matmul, norm, batch norm, and so on)

Jesse Singal has a long history of attacking children, pediatricians, & healthcare providers.

Example: He once disappeared from Twitter for a couple weeks after “accidentally” leaking a minor’s medical records.

EA/rationalism at last seeping into my wrapped

EA/rationalism at last seeping into my wrapped

xkcd.com/3006/

xkcd.com/3006/

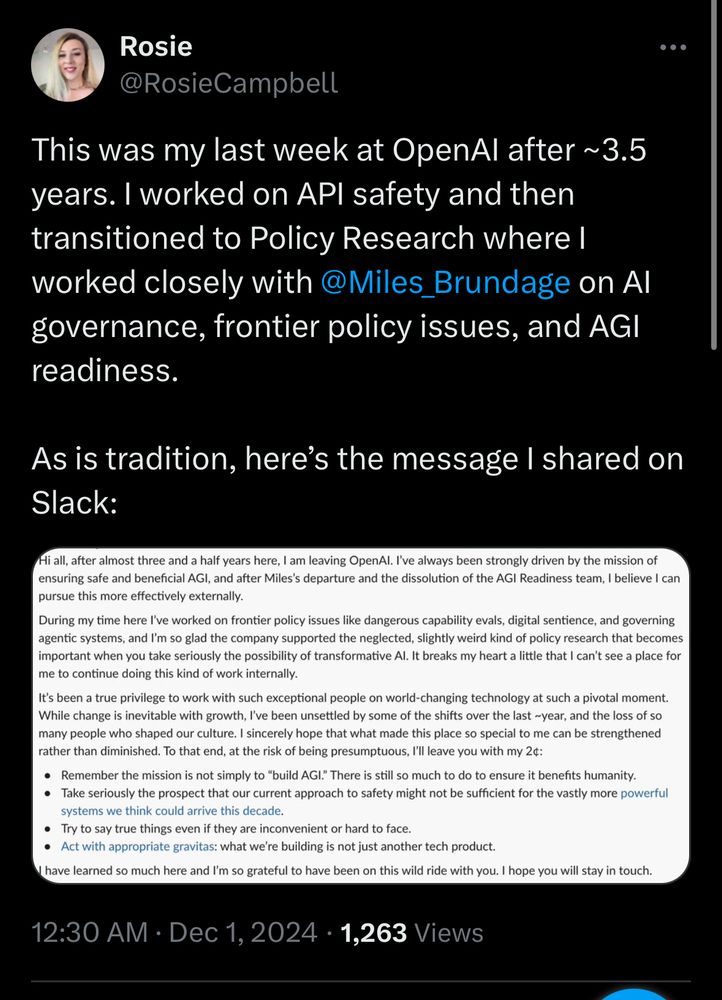

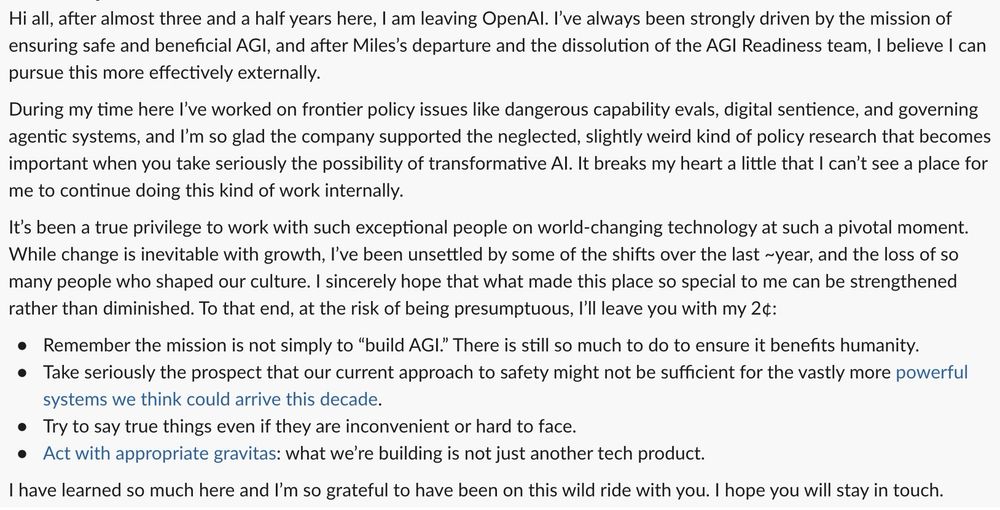

Rosie Campbell says she has been “unsettled by some of the shifts over the last ~year, and the loss of so many people who shaped our culture”.

She says she “can’t see a place” for her to continue her work internally.

Rosie Campbell says she has been “unsettled by some of the shifts over the last ~year, and the loss of so many people who shaped our culture”.

She says she “can’t see a place” for her to continue her work internally.