Colour Connect Pte Lte is goated

large format

1hr

$28

crispy

Colour Connect Pte Lte is goated

large format

1hr

$28

crispy

presenting at the morning poster session on thursday

excited to catch up with friends and collaborators, old and new

let's chat

presenting at the morning poster session on thursday

excited to catch up with friends and collaborators, old and new

let's chat

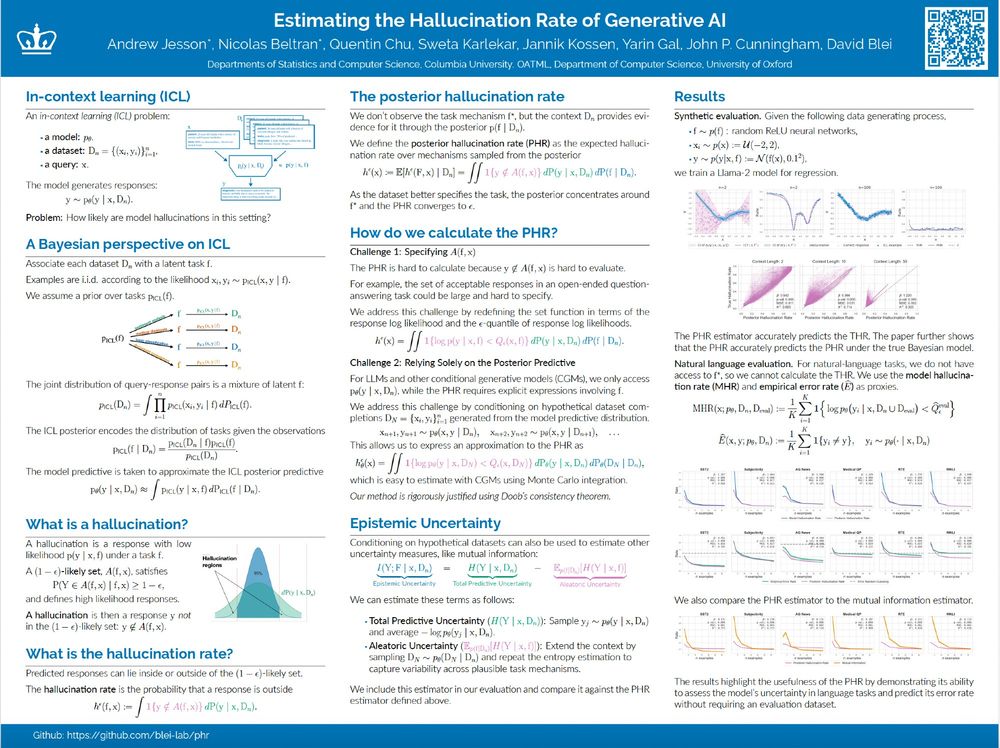

come chat with Nicolas , @swetakar.bsky.social , Quentin , Jannik , and i today

We will be presenting Estimating the Hallucination Rate of Generative AI at NeurIPS. Come if you'd like to chat about epistemic uncertainty for In-Context Learning, or uncertainty more generally. :)

Location: East Exhibit Hall A-C #2703

Time: Friday @ 4:30

Paper: arxiv.org/abs/2406.07457

come chat with Nicolas , @swetakar.bsky.social , Quentin , Jannik , and i today

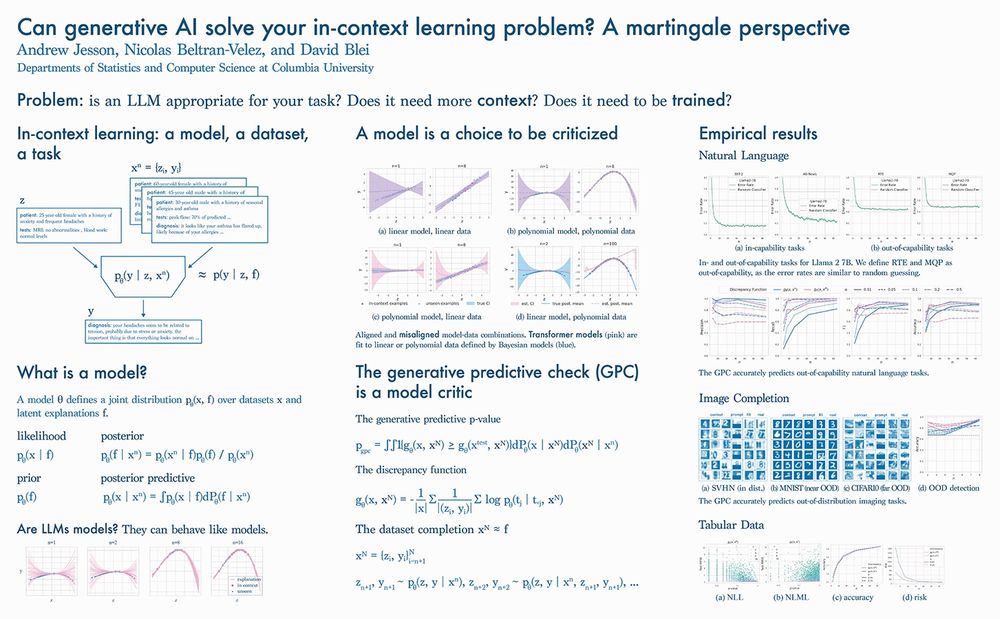

we develop a predictor that only requires sampling and log probs

we show it works for tabular, natural language, and imaging problems

come chat at the safe generative ai workshop at NeurIPS

📄 arxiv.org/abs/2412.06033

we develop a predictor that only requires sampling and log probs

we show it works for tabular, natural language, and imaging problems

come chat at the safe generative ai workshop at NeurIPS

📄 arxiv.org/abs/2412.06033

We will be presenting Estimating the Hallucination Rate of Generative AI at NeurIPS. Come if you'd like to chat about epistemic uncertainty for In-Context Learning, or uncertainty more generally. :)

Location: East Exhibit Hall A-C #2703

Time: Friday @ 4:30

Paper: arxiv.org/abs/2406.07457

We will be presenting Estimating the Hallucination Rate of Generative AI at NeurIPS. Come if you'd like to chat about epistemic uncertainty for In-Context Learning, or uncertainty more generally. :)

Location: East Exhibit Hall A-C #2703

Time: Friday @ 4:30

Paper: arxiv.org/abs/2406.07457

does removing it disable the task ?

does it contain redundant parts ?

don ' t know ?

then come chat about hypothesis testing for mechanistic interpretability

poster 2803 East 4:30-7:30pm at NeurIPS

joint work with @velezbeltran.bsky.social @maggiemakar.bsky.social @anndvision.bsky.social @bleilab.bsky.social Adria @far.ai Achille and Caro

does removing it disable the task ?

does it contain redundant parts ?

don ' t know ?

then come chat about hypothesis testing for mechanistic interpretability

poster 2803 East 4:30-7:30pm at NeurIPS

joint work with @velezbeltran.bsky.social @maggiemakar.bsky.social @anndvision.bsky.social @bleilab.bsky.social Adria @far.ai Achille and Caro

joint work with @velezbeltran.bsky.social @maggiemakar.bsky.social @anndvision.bsky.social @bleilab.bsky.social Adria @far.ai Achille and Caro

i love openreview

but damn

that ' s a lot of tabs

i love openreview

but damn

that ' s a lot of tabs

Please give us a follow! @bleilab.bsky.social

Please give us a follow! @bleilab.bsky.social