Working in mechanistic interpretability, nlp, causal inference, and probabilistic modeling!

Previously at Meta for ~3 years on the Bayesian Modeling & Generative AI teams.

🔗 www.sweta.dev

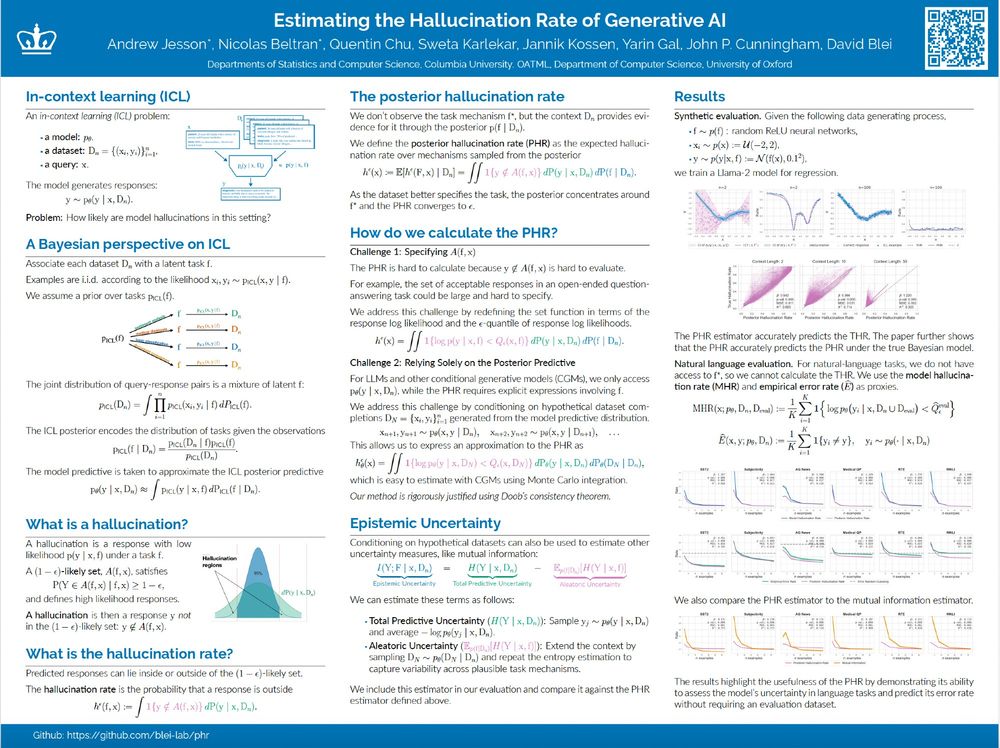

We will be presenting Estimating the Hallucination Rate of Generative AI at NeurIPS. Come if you'd like to chat about epistemic uncertainty for In-Context Learning, or uncertainty more generally. :)

Location: East Exhibit Hall A-C #2703

Time: Friday @ 4:30

Paper: arxiv.org/abs/2406.07457

We will be presenting Estimating the Hallucination Rate of Generative AI at NeurIPS. Come if you'd like to chat about epistemic uncertainty for In-Context Learning, or uncertainty more generally. :)

Location: East Exhibit Hall A-C #2703

Time: Friday @ 4:30

Paper: arxiv.org/abs/2406.07457

come chat with Nicolas , @swetakar.bsky.social , Quentin , Jannik , and i today

We will be presenting Estimating the Hallucination Rate of Generative AI at NeurIPS. Come if you'd like to chat about epistemic uncertainty for In-Context Learning, or uncertainty more generally. :)

Location: East Exhibit Hall A-C #2703

Time: Friday @ 4:30

Paper: arxiv.org/abs/2406.07457

come chat with Nicolas , @swetakar.bsky.social , Quentin , Jannik , and i today

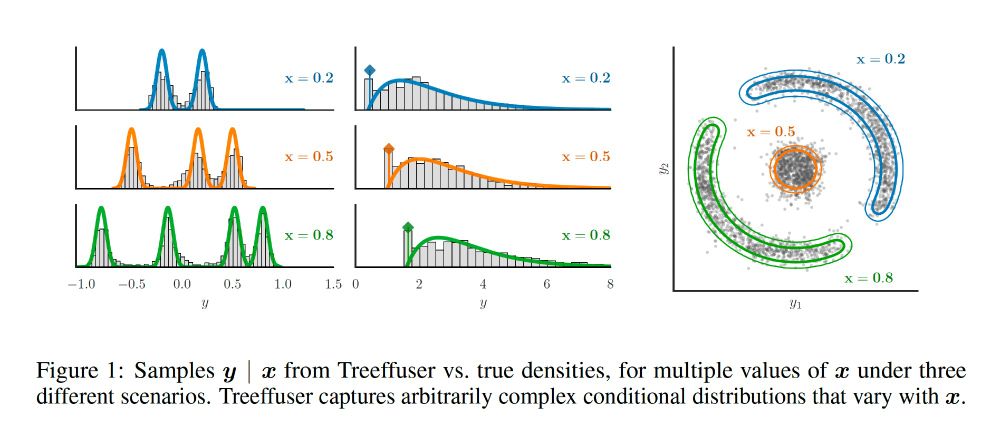

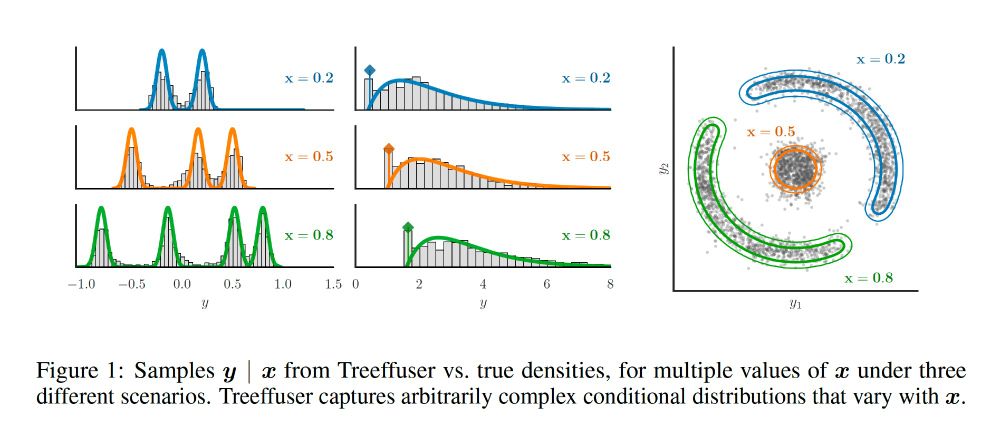

paper: arxiv.org/abs/2406.07658

repo: github.com/blei-lab/tre...

🧵(1/8)

joint work with @velezbeltran.bsky.social @maggiemakar.bsky.social @anndvision.bsky.social @bleilab.bsky.social Adria @far.ai Achille and Caro

🧵>>

🧵>>

paper: arxiv.org/abs/2406.07658

repo: github.com/blei-lab/tre...

🧵(1/8)

Link: blog.neurips.cc/2024/11/27/a...

Link: blog.neurips.cc/2024/11/27/a...

www.bewitched.com/demo/gini

www.bewitched.com/demo/gini

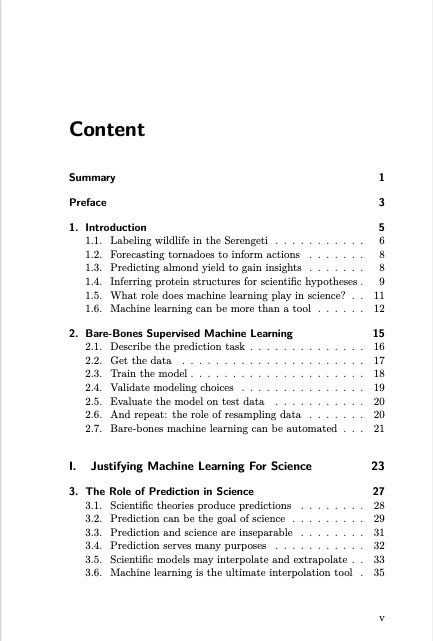

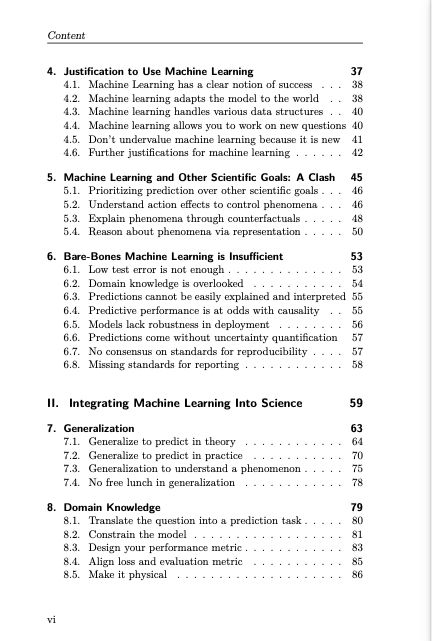

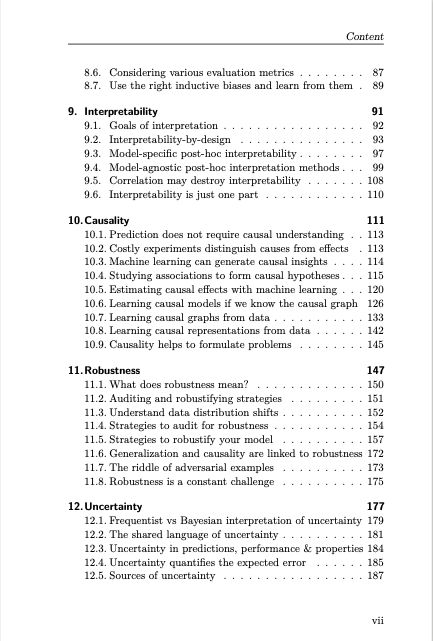

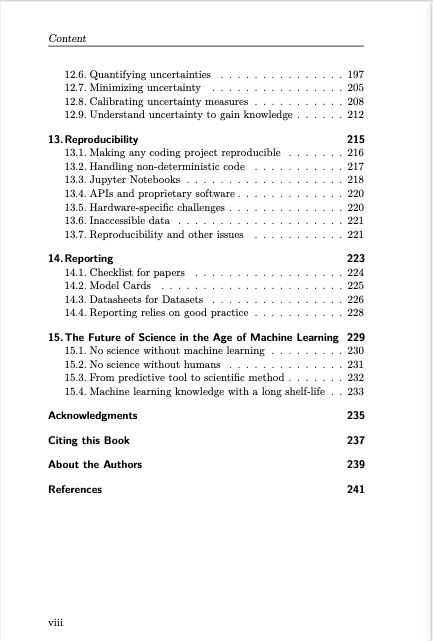

Timo and I recently published a book, and even if you are not a scientist, you'll find useful overviews of topics like causality and robustness.

The best part is that you can read it for free: ml-science-book.com

Timo and I recently published a book, and even if you are not a scientist, you'll find useful overviews of topics like causality and robustness.

The best part is that you can read it for free: ml-science-book.com

Let's start with "What are embeddings" by @vickiboykis.com

The book is a great summary of embeddings, from history to modern approaches.

The best part: it's free.

Link: vickiboykis.com/what_are_emb...

Let's start with "What are embeddings" by @vickiboykis.com

The book is a great summary of embeddings, from history to modern approaches.

The best part: it's free.

Link: vickiboykis.com/what_are_emb...

Please give us a follow! @bleilab.bsky.social

Please give us a follow! @bleilab.bsky.social

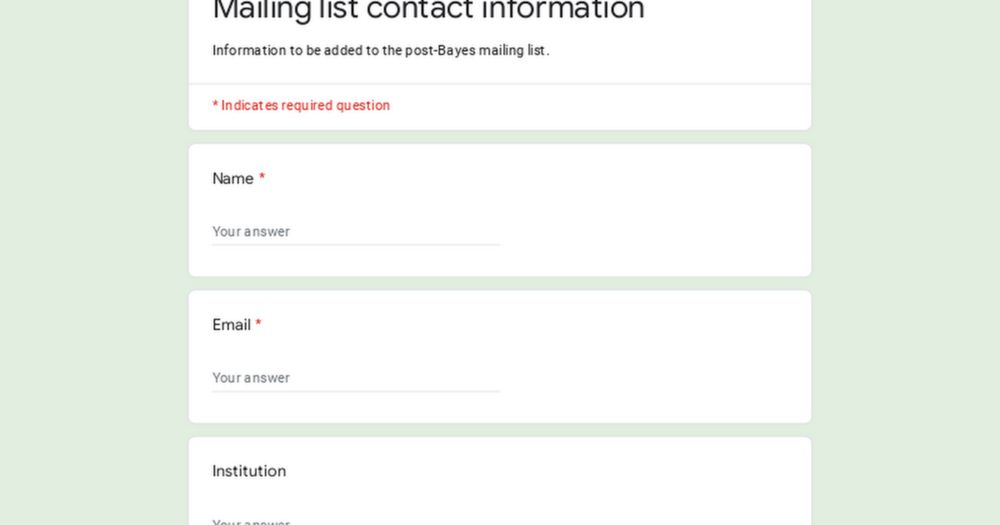

To stay posted, sign up at

tinyurl.com/postBayes

We'll discuss cutting-edge methods for posteriors that no longer rely on Bayes Theorem.

(e.g., PAC-Bayes, generalised Bayes, Martingale posteriors, ...)

Pls circulate widely!

To stay posted, sign up at

tinyurl.com/postBayes

We'll discuss cutting-edge methods for posteriors that no longer rely on Bayes Theorem.

(e.g., PAC-Bayes, generalised Bayes, Martingale posteriors, ...)

Pls circulate widely!

AI (*about* AI, not *for* an AI) go.bsky.app/SipA7it

Spoken Language Processing bsky.app/starter-pack...

Diversify Tech's pack bsky.app/starter-pack...

Women in Tech bsky.app/starter-pack...

Great UK Commentators bsky.app/starter-pack...

Linguistics bsky.app/starter-pack...

AI (*about* AI, not *for* an AI) go.bsky.app/SipA7it

Spoken Language Processing bsky.app/starter-pack...

Diversify Tech's pack bsky.app/starter-pack...

Women in Tech bsky.app/starter-pack...

Great UK Commentators bsky.app/starter-pack...

Linguistics bsky.app/starter-pack...

go.bsky.app/LisK3CP

go.bsky.app/LisK3CP

Saildrone builds ocean drones that collect a ton of oceanic and climate data. And they have some publicly-available datasets on their website for researchers :) 🌊 #mlsky

Saildrone builds ocean drones that collect a ton of oceanic and climate data. And they have some publicly-available datasets on their website for researchers :) 🌊 #mlsky

#AcademicSky #PhDSky

#AcademicSky #PhDSky

DM/reply if you want to be added!

go.bsky.app/DuCtJqC

DM/reply if you want to be added!

go.bsky.app/DuCtJqC