Sweta Karlekar

@swetakar.bsky.social

2.6K followers

1.2K following

31 posts

Machine learning PhD student @ Blei Lab in Columbia University

Working in mechanistic interpretability, nlp, causal inference, and probabilistic modeling!

Previously at Meta for ~3 years on the Bayesian Modeling & Generative AI teams.

🔗 www.sweta.dev

Posts

Media

Videos

Starter Packs

Reposted by Sweta Karlekar

Reposted by Sweta Karlekar

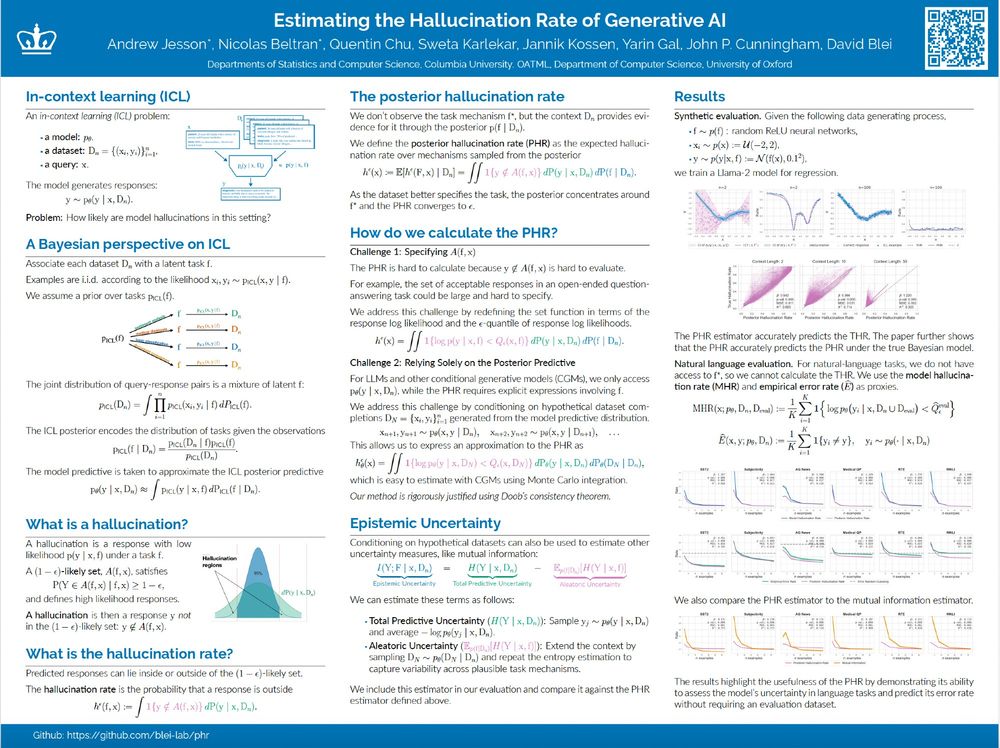

Andrew Jesson

@anndvision.bsky.social

· Dec 13

Reposted by Sweta Karlekar

Reposted by Sweta Karlekar

Reposted by Sweta Karlekar

Sweta Karlekar

@swetakar.bsky.social

· Dec 2

Sweta Karlekar

@swetakar.bsky.social

· Nov 29

GitHub - andrewyng/aisuite: Simple, unified interface to multiple Generative AI providers

Simple, unified interface to multiple Generative AI providers - GitHub - andrewyng/aisuite: Simple, unified interface to multiple Generative AI providers

github.com

Reposted by Sweta Karlekar

Sweta Karlekar

@swetakar.bsky.social

· Nov 25

Sweta Karlekar

@swetakar.bsky.social

· Nov 24

Reposted by Sweta Karlekar

Reposted by Sweta Karlekar

Sweta Karlekar

@swetakar.bsky.social

· Nov 22

Why Can GPT Learn In-Context? Language Models Implicitly Perform Gradient Descent as Meta-Optimizers

Large pretrained language models have shown surprising in-context learning (ICL) ability. With a few demonstration input-label pairs, they can predict the label for an unseen input without parameter u...

arxiv.org

Reposted by Sweta Karlekar

Sweta Karlekar

@swetakar.bsky.social

· Nov 20

Sweta Karlekar

@swetakar.bsky.social

· Nov 20

Sweta Karlekar

@swetakar.bsky.social

· Nov 20

Sweta Karlekar

@swetakar.bsky.social

· Nov 20

Sweta Karlekar

@swetakar.bsky.social

· Nov 20

Reposted by Sweta Karlekar

Reposted by Sweta Karlekar

Sweta Karlekar

@swetakar.bsky.social

· Nov 20

An Extremely Opinionated Annotated List of My Favourite Mechanistic Interpretability Papers v2 — AI Alignment Forum

This post represents my personal hot takes, not the opinions of my team or employer. This is a massively updated version of a similar list I made two…

www.alignmentforum.org