go.bsky.app/AKGJ82V

go.bsky.app/AKGJ82V

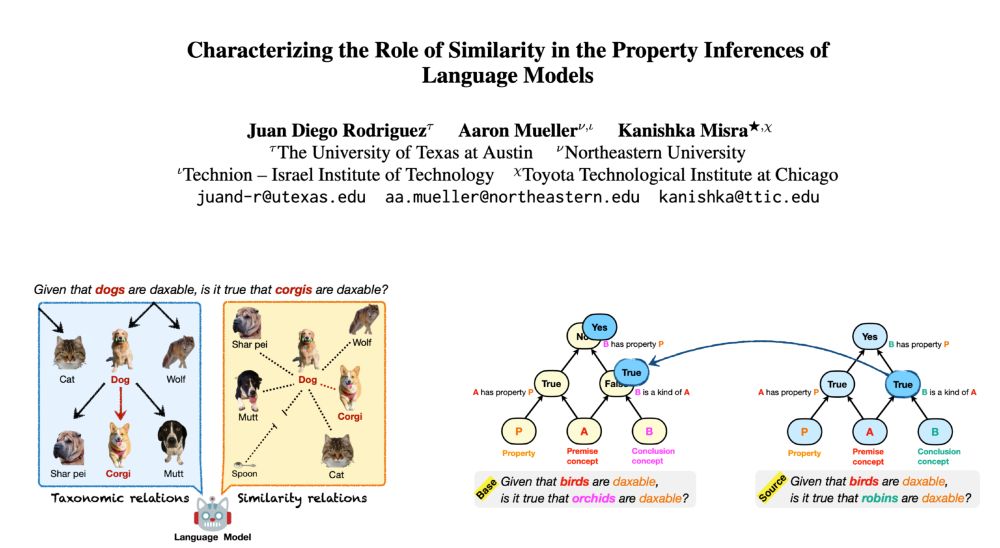

🌟 New paper with my fantastic collaborators @amuuueller.bsky.social and @kanishka.bsky.social

🌟 New paper with my fantastic collaborators @amuuueller.bsky.social and @kanishka.bsky.social

🤖

bskAI

blueskAI

🤖

bskAI

blueskAI