📍Palo Alto, CA

🔗 calebziems.com

Check out our #EMNLP2025 talk: Culture Cartography

🗓️ 11/5, 11:30 AM

📌 A109 (CSS Orals 1)

Compared to traditional benchmarking, our mixed-initiative method finds more knowledge gaps even in reasoning models like R1!

Paper: arxiv.org/pdf/2510.27672

Check out our #EMNLP2025 talk: Culture Cartography

🗓️ 11/5, 11:30 AM

📌 A109 (CSS Orals 1)

Compared to traditional benchmarking, our mixed-initiative method finds more knowledge gaps even in reasoning models like R1!

Paper: arxiv.org/pdf/2510.27672

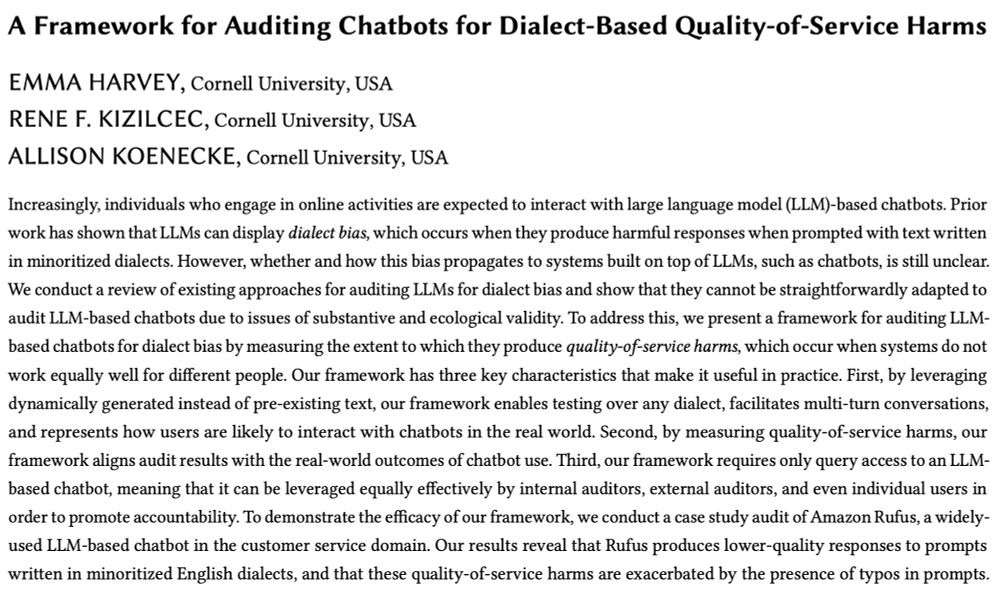

🔗: arxiv.org/pdf/2506.04419

🔗: arxiv.org/pdf/2506.04419

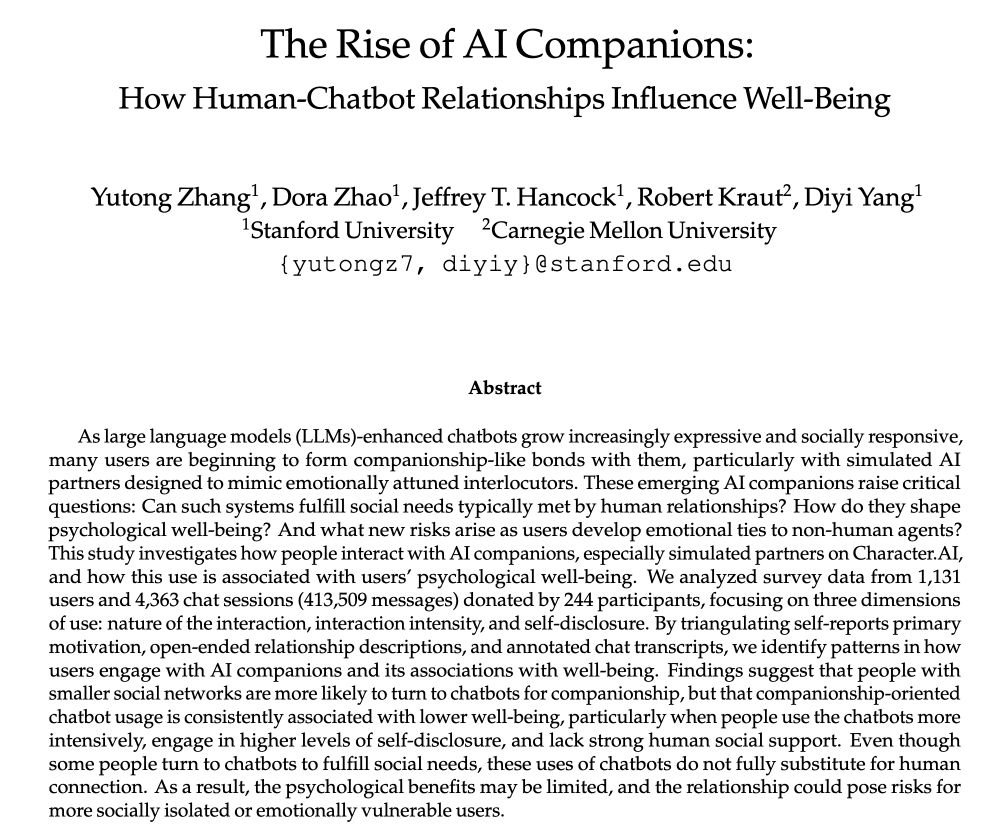

Thousands are turning to AI chatbots for emotional connection – finding comfort, sharing secrets, and even falling in love. But as AI companionship grows, the line between real and artificial relationships blurs.

Thousands are turning to AI chatbots for emotional connection – finding comfort, sharing secrets, and even falling in love. But as AI companionship grows, the line between real and artificial relationships blurs.

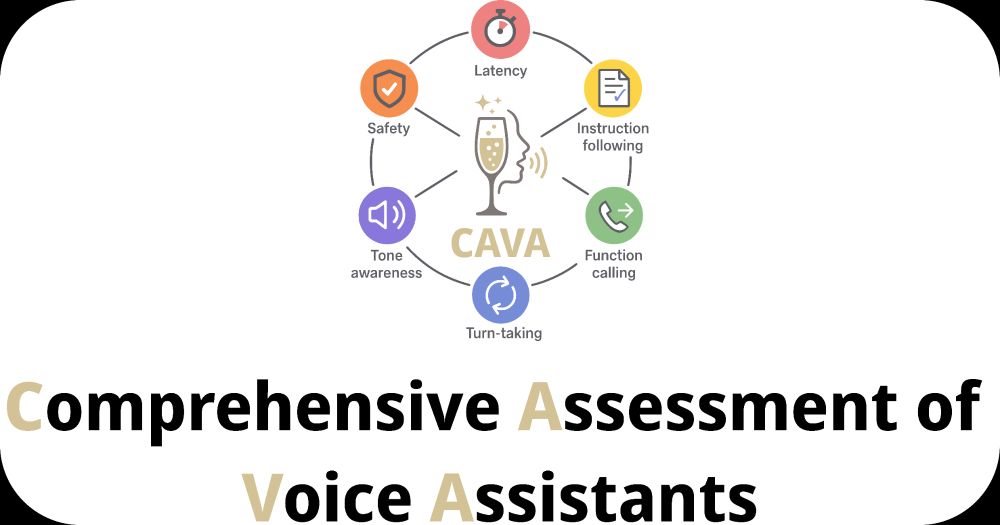

A new benchmark for evaluating the capabilities required for speech-in-speech-out voice assistants!

- Latency

- Instruction following

- Function calling

- Tone awareness

- Turn taking

- Audio Safety

TalkArena.org/cava

A new benchmark for evaluating the capabilities required for speech-in-speech-out voice assistants!

- Latency

- Instruction following

- Function calling

- Tone awareness

- Turn taking

- Audio Safety

TalkArena.org/cava

Excited to share our paper on biases against African American Language in reward models, accepted to #NAACL2025 Findings! 🎉

Paper: arxiv.org/abs/2502.12858 (1/10)

Excited to share our paper on biases against African American Language in reward models, accepted to #NAACL2025 Findings! 🎉

Paper: arxiv.org/abs/2502.12858 (1/10)

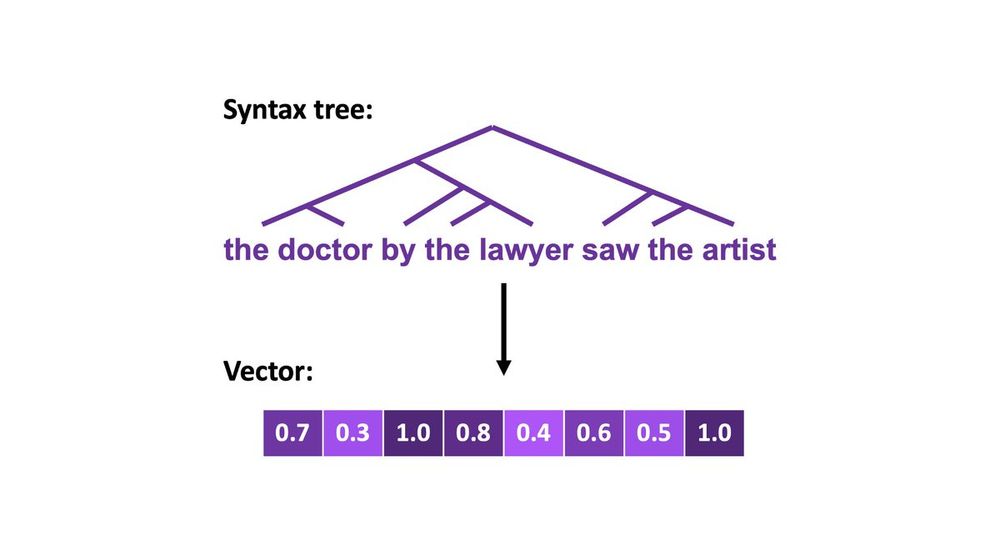

But humans are naturally quite good at this (>90% acc.)

Check it out!

➡️ arxiv.org/abs/2502.20490

But humans are naturally quite good at this (>90% acc.)

Check it out!

➡️ arxiv.org/abs/2502.20490

A position paper by me, @dbamman.bsky.social, and @ibleaman.bsky.social on cultural NLP: what we want, what we have, and how sociocultural linguistics can clarify things.

Website: naitian.org/culture-not-...

1/n

A position paper by me, @dbamman.bsky.social, and @ibleaman.bsky.social on cultural NLP: what we want, what we have, and how sociocultural linguistics can clarify things.

Website: naitian.org/culture-not-...

1/n

Introducing Collaborative Gym (Co-Gym), a framework for enabling & evaluating human-agent collaboration! I now get used to agents proactively seeking confirmations or my deep thinking.(🧵 with video)

Introducing Collaborative Gym (Co-Gym), a framework for enabling & evaluating human-agent collaboration! I now get used to agents proactively seeking confirmations or my deep thinking.(🧵 with video)

I was so lucky to know him, and I am grateful every day that he (and Gillian, and Walt, etc) built an academic field where kindness is expected.

I was so lucky to know him, and I am grateful every day that he (and Gillian, and Walt, etc) built an academic field where kindness is expected.

Introducing talkarena.org — an open platform where users speak to LAMs and receive text responses. Through open interaction, we focus on rankings based on user preferences rather than static benchmarks.

🧵 (1/5)

Introducing talkarena.org — an open platform where users speak to LAMs and receive text responses. Through open interaction, we focus on rankings based on user preferences rather than static benchmarks.

🧵 (1/5)

1. most animate objects, men

2. women, water, fire, violence, and exceptional animals

3. edible fruit and vegetables

4. miscellaneous (includes things not classifiable in the first three)

1. most animate objects, men

2. women, water, fire, violence, and exceptional animals

3. edible fruit and vegetables

4. miscellaneous (includes things not classifiable in the first three)

Students do different research, go on the job market, and recruit other students. Ping me and I'll add you!

Students do different research, go on the job market, and recruit other students. Ping me and I'll add you!

Boulder is a lovely college town 30 minutes from Denver and 1 hour from Rocky Mountain National Park 😎

Apply by December 15th!

Boulder is a lovely college town 30 minutes from Denver and 1 hour from Rocky Mountain National Park 😎

Apply by December 15th!

juliamendelsohn.github.io/resources/

juliamendelsohn.github.io/resources/

Here are some other great starter packs:

- CSS: go.bsky.app/GoEyD7d + go.bsky.app/CYmRvcK

- NLP: go.bsky.app/SngwGeS + go.bsky.app/JgneRQk

- HCI: go.bsky.app/p3TLwt

- Women in AI: go.bsky.app/LaGDpqg

Here are some other great starter packs:

- CSS: go.bsky.app/GoEyD7d + go.bsky.app/CYmRvcK

- NLP: go.bsky.app/SngwGeS + go.bsky.app/JgneRQk

- HCI: go.bsky.app/p3TLwt

- Women in AI: go.bsky.app/LaGDpqg

Here is number 2! More amazing folks to follow! Many students and the next gen represented!

go.bsky.app/GoEyD7d

Here is number 2! More amazing folks to follow! Many students and the next gen represented!

go.bsky.app/GoEyD7d

If you are interested in the intersection of linguistics, cognitive science, and AI, I encourage you to apply!

Postdoc link: rtmccoy.com/prospective_...

PhD link: rtmccoy.com/prospective_...

If you are interested in the intersection of linguistics, cognitive science, and AI, I encourage you to apply!

Postdoc link: rtmccoy.com/prospective_...

PhD link: rtmccoy.com/prospective_...

I wrote a book called Because Internet about how we use language online gretchenmcculloch.com/book

I make @lingthusiasm.bsky.social, a podcast that's enthusiastic about linguistics

And I maintain a linguistics starter pack here: go.bsky.app/UUM7Gcx

I wrote a book called Because Internet about how we use language online gretchenmcculloch.com/book

I make @lingthusiasm.bsky.social, a podcast that's enthusiastic about linguistics

And I maintain a linguistics starter pack here: go.bsky.app/UUM7Gcx

40 new faculty members

in all areas of AI, particularly:

- accessibility,

- sustainability,

- social justice, and

- learning;

building on computational, humanistic, or social scientific approaches to AI.

>

40 new faculty members

in all areas of AI, particularly:

- accessibility,

- sustainability,

- social justice, and

- learning;

building on computational, humanistic, or social scientific approaches to AI.

>

📆 Application deadline: 15 Jan 2025

👥 Supervisors: Pepa Atanasova & me

🤝 Reasons to apply: www.copenlu.com/post/why-ucph/

📝 Apply here: employment.ku.dk/phd/?show=16...

#NLProc #XAI

📆 Application deadline: 15 Jan 2025

👥 Supervisors: Pepa Atanasova & me

🤝 Reasons to apply: www.copenlu.com/post/why-ucph/

📝 Apply here: employment.ku.dk/phd/?show=16...

#NLProc #XAI

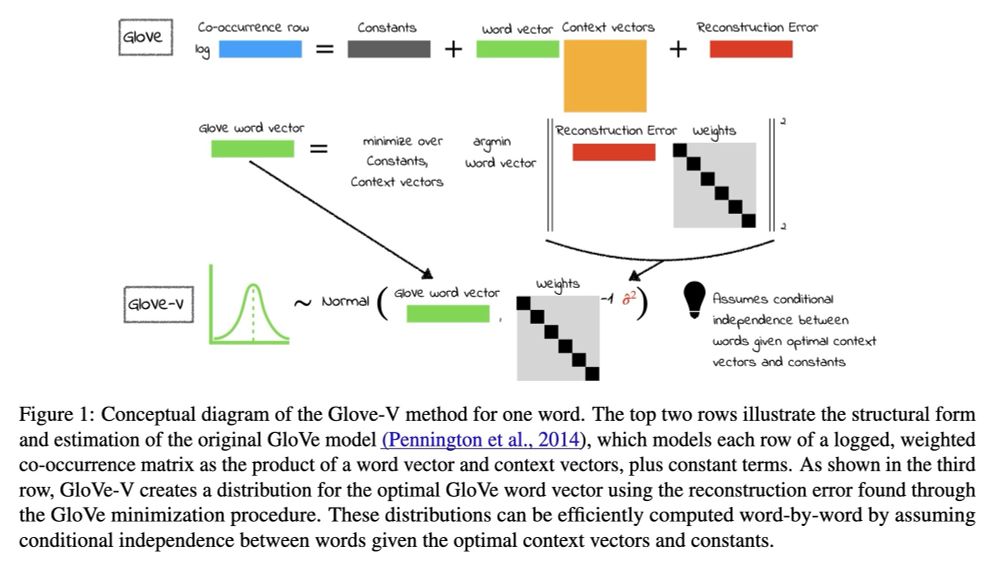

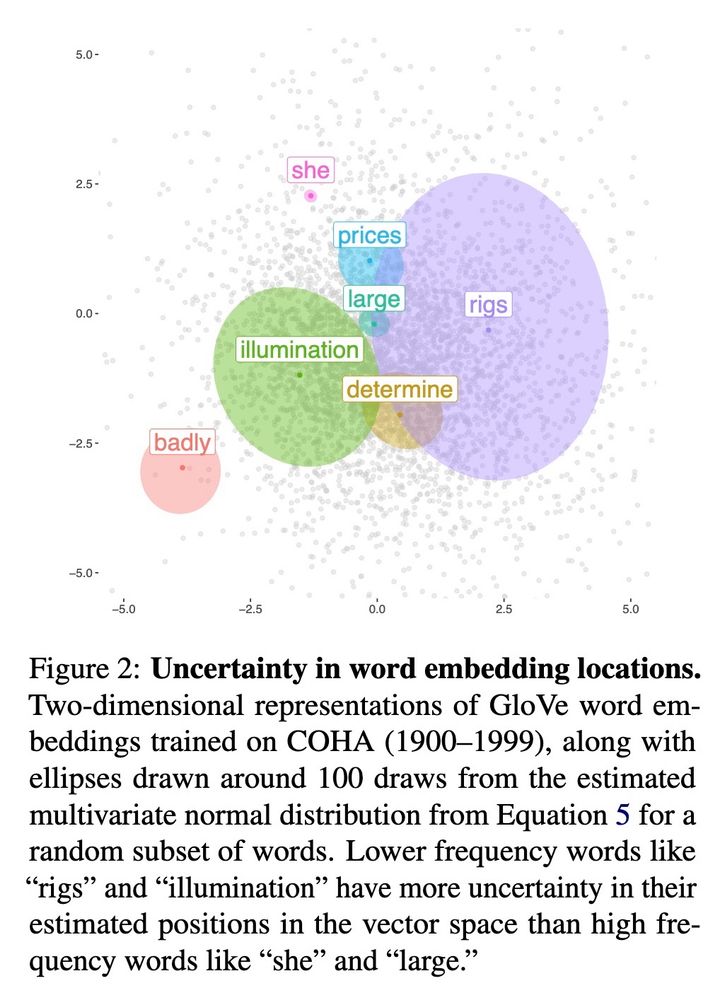

Statistical Uncertainty in Word Embeddings: GloVe-V

Neural models, from word vectors through transformers, use point estimate representations. They can have large variances, which often loom large in CSS applications.

Tue Nov 12 15:15-15:30 Flagler

Statistical Uncertainty in Word Embeddings: GloVe-V

Neural models, from word vectors through transformers, use point estimate representations. They can have large variances, which often loom large in CSS applications.

Tue Nov 12 15:15-15:30 Flagler